Introduction

Let’s introduce application development topics.

This is a course about designing and building software.

Software used to be considered a bespoke product i.e., built in-house for a specific purpose. However, with the rise of consumer electronics, personal computing, and smartphones, software has grown into a multi-trillion dollar industry. Computers and software have transitioned from niche devices into essential tools in practically every industry, intertwined in almost every task that we perform!

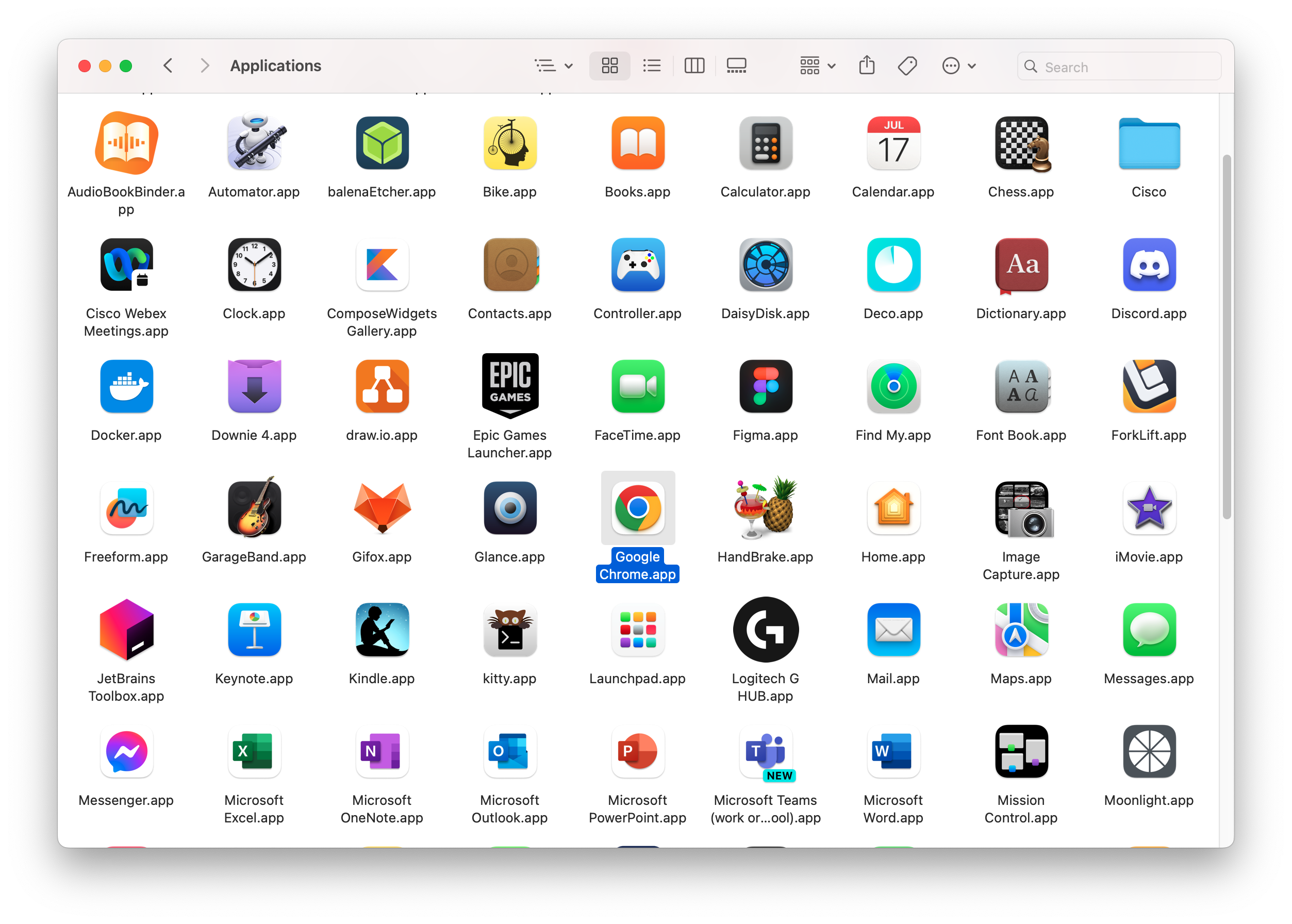

If you look at your phone, or your notebook computer, you can identify many different styles of software that you probably use:

- System software: software that is meant to provide services other other software e.g., Linux or other operating systems, drivers for your NVIDIA graphics card, the printer control panel on your computer.

- Application software: software that is designed to perform a task for users. e.g., your phones calendar application, Minecraft, Visual Studio Code, Google Chrome.

We also have specialized (but important!) types of software:

- Programming languages and compilers: languages and tools designed to produce other software.

- Kiosk software: software meant to be installed on an interactive kiosks, with restricted functionality outside of its intended purpose e.g., a bank machine; an interactive map at a shopping mall.

- Embedded software: meant to control systems; included in the manufacturing of a hardware based system. e.g., firmware in your phone; control systems for a robotic arm in a factory; monitoring systems in a power plant.

In this course, we’re primarily concerned with application software, or software that is used by people to perform useful, everyday tasks. Most of the software that you interact with on a daily basis would be considered application software: the weather app on your phone, the spreadsheet that you use for work, the online game that you play with your friends.

Applications aren’t restricted to a single style either; they can include console applications (e.g., vim), desktop software (e.g., MS Word for Windows), mobile software (e.g., Weather on iOS), or even web applications (e.g., Gmail).

The categories are not always this clear-cut. For example, a web browser is an application, but it also provides services to other applications e.g., rendering HTML, executing JavaScript. Similarly, an operating system is system software, but it also provides services to applications e.g., managing memory, scheduling processes. When you install your operating system, it probably comes preinstalled with a bunch of applications! These distinctions are not always obvious (of meaningful).

For us, we just want to think in terms of software that is usable by an end user for a specific task.

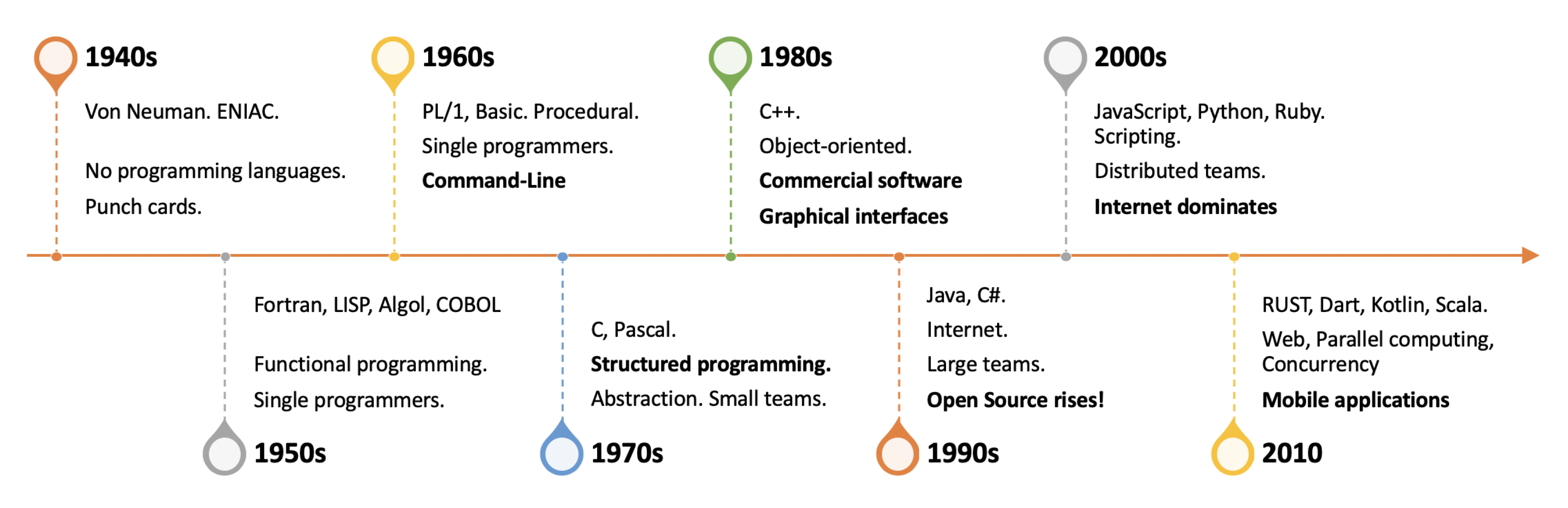

History of software

Computer science has a relatively short history, that mostly started in the 1940s. Let’s consider how software development itself changed over time.

1960s: Terminals & text displays

In the early days of computers, mainframe computers dominated. These were large, expensive systems, which were only available to large instututions that could afford to maintain them. This era started with batch processing dominated, where users would submit their jobs (programs), and wait for the results. By the mid 1960s, we saw the introduction of time-sharing systems, where multiple users could interact with the computer at the same time through text-based terminals.

Development snapshot

Hardware was limited to managing text, so software was (mostly) text-based. Systems were powerful enough to support interactive sessions with users, so software was either either command or menu driven (e.g., you were presented with a screen, and navigated through lists of options, and answering prompts.

Software development at this time was interesting. We started with batch processing (punch cards) and ended the 1970s with dedicated terminals connected to a time-sharing mainframe. Programing meant editing your COBOL or Fortran program on your terminal, with programs stored on bulk storage.

| Category | Examples |

|---|---|

| Programming languages | Fortran (1957), LISP (1958), Cobol (1959), Ada (1979) |

| Programming paradigm | Functional & structured programming |

| Editor of choice | Teco (1962), ED (1969) |

| Hardware | DEC VT100 terminal, IBM mainframe, DEC PDP-10/11 or VAX-11 |

| Operating System | IBM System 7, VAX VMS |

1970s: Personal computing

By the mid 1970s, the situation had changed. Small and relatively affordable computers were becoming commonplace. These personal computers were relatively inexpensive, and designed to be used by a single user i.e., hobbyists, or small businesses that couldn’t afford a mainframe or mini computer (the term personal reinforced the idea that these were single-user machines).

I remember desperately wanting one of these...

While companies were investing in these so-called personal computers, at the end of the 1970s, they will still relatively niche. They had limited capabilities, and very limited software available for them.

Development snapshot

Personal computer software was text-based and command or menu driven, with some limited ability to display color and graphics.

| Category | Examples |

|---|---|

| Programming languages | Basic, Assembler, C |

| Programming paradigm | Structured programming |

| Editor of choice | Emacs (1976), Vi (1976) |

| Hardware | IBM PC, terminal software, DEC PDP-10/11, VAX-11 |

| Operating System | IBM PC DOS, MS PC DOS, VMS |

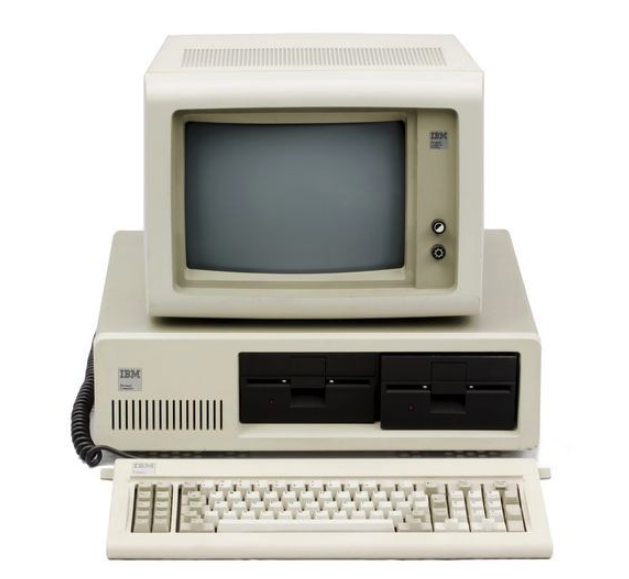

1980s: Graphical user interfaces

The early 1980s was a time of incredible innovation in the PC industry: software was sold on cassettes, floppy disks or cartridges, and program listings were printed in books and magazines (i.e., you were expected to type in a program in order to run it). BBSes existed, where you could connect and download software.

The growth of this sector provided a huge opportunity for specialized vendors to emerge; we saw the rise of companies like Borland and Lotus. Commercial software was being distributed on cassettes or floppy disks and was more professionally produced.

| Feature | Details |

|---|---|

| Developer | International Business Machines (IBM) |

| Release Date | August 12, 1981 |

| Price | $1565 ($5240 in 2023 terms) |

| CPU | Intel 8088 @4.77 MHz |

| Memory | 16Kb - 256Kb |

| Storage | 5.25“ floppy drive, 160Kb or 320Kb |

| Display | IBM 5153 CGA Monitor (16 colors, 640 × 200, text) |

The early 1980s was a time of rapid innovation. Console applications had advanced significantly.

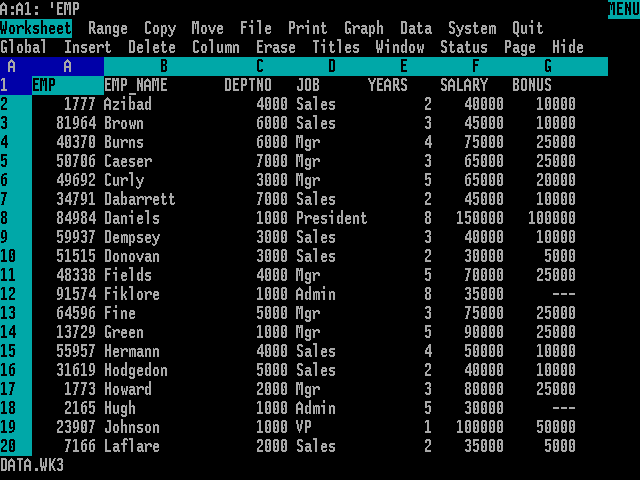

Released on Jan 26 1983, Lotus 1-2-3 was the world’s first spreadsheet. It was so popular that it is often considered a driving factor in the growth of the personal computer industry. Businesses were buying computers specifically to run Lotus 1-2-3. Early software companies like Microsoft were born in this era (their first products were DOS, a PC-based operating system, and BASIC, a programming language).

Applications from this era were text-based, at least for a few more years. Text-based applications still exist today: bash, git, and other tools are commonly used for system administration tasks. Many software developers also like to use console and text-based tools for software development.

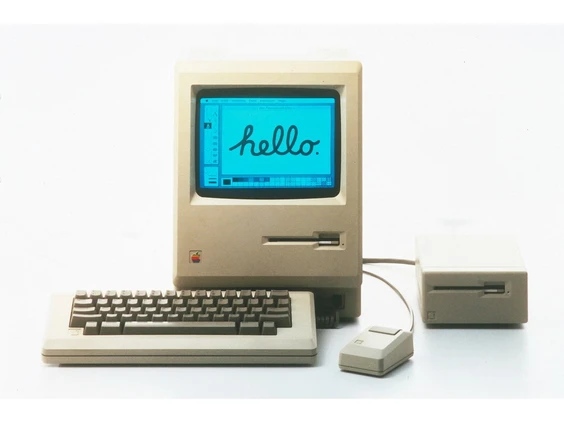

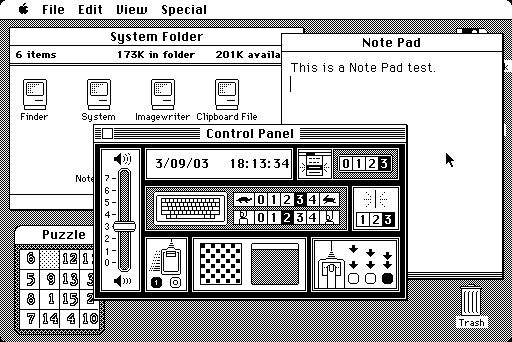

The biggest shift of the 1980s (and one of the major milestones in computer history) was the introduction of the Apple Macintosh in 1984. It didn’t look that much different than other desktops, but it launched the first commercially successful graphical user interface and graphical operating system.

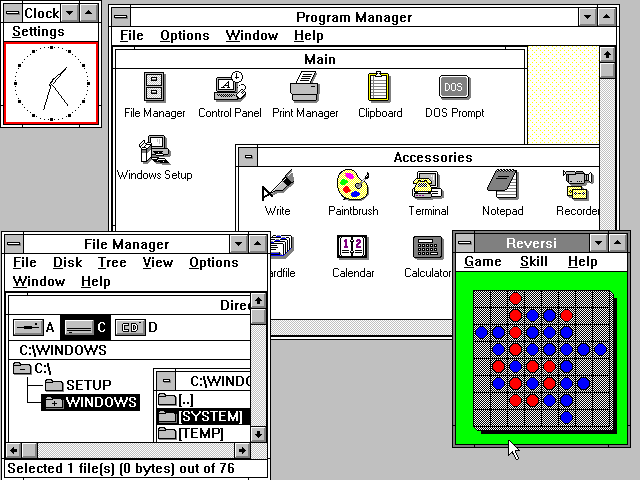

Other vendors e.g. Microsoft, Commodore, Sun followed with their own designs, but it took a number of years before the dust “settled”. By 1990, Microsoft and Apple were the major two platforms. Companies like Wang and Commodore were unable to compete and eventually dropped out of the market.

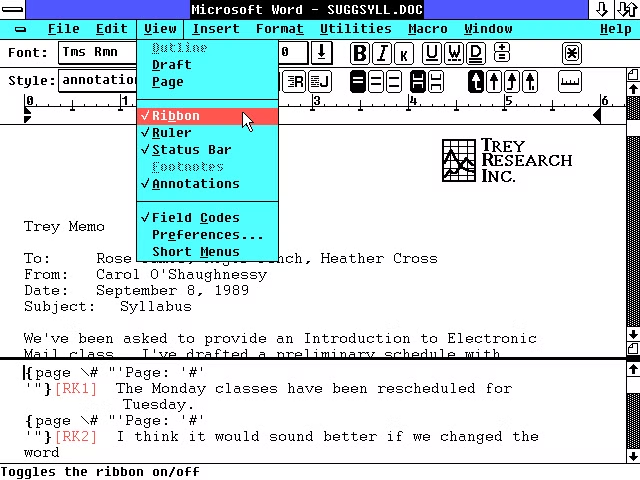

The move to GUI interfaces of course meant that software was ported to these environments, as software companies reimaged their software in this context.

The move towards dedicated user-based computers had a significant impact on computing:

- Dedicated hardware meant that we could move towards quick, interactive software i.e. type a command, get an immediate response.

- Multitasking operating systems arose to support applications running simultaneously.

- Graphical user interfaces became the primary interaction mechanism for most users.

In many ways, we’ve spent the last 40 years improving the performance of this interaction model. Everything has become “faster”, which for most people, translates into more applications running (and more Chrome tabs open).

- Use of a keyboard and pointing device (mouse) for interaction.

Point-and-clickto interact with graphical elements. - Windows are used as logical container for an application’s input/output.

- Cut-Copy-Paste is used as a paradigm for manipulating data (originally test, but we’re accustomed to copy-pasting images and other binary objects as well).

- Undo-Redo as a paradigm for reverting changes.

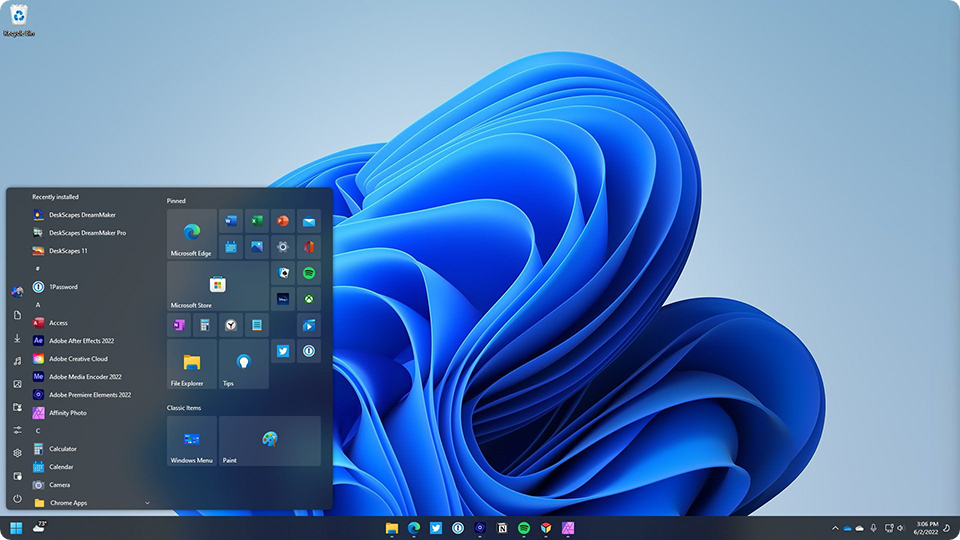

Modern desktop operating systems, including Linux, macOS and Windows, are very similar to these early designs. Little has actually changed in terms of how we interact with desktop systems.

How have graphical applications and operating systems stayed so fixed in design over almost 50 years? There are measurable benefits to having the users environment be familiar, and predictable. It turns out that most users just want things to remain the same.

Development snapshot

There was a scramble as vendors delivered tools and development environments that could deliver interactive, highly graphical applications. OO programming with C++ dominated through the early 90s, but when Java was introduced in 1996 it became an instant hit and quickly became a favorite programming language. For a few years at least 1.

| Category | Examples |

|---|---|

| Programming languages | C, C++, Pascal, Objective C |

| Programming paradigm | Structured & Object-Oriented Programming |

| Editor of choice | IBM Personal Editor (1982), Borland Turbo C++ IDE (1990) |

| Hardware | IBM PC, Apple Macintosh |

| Operating System | MS PC DOS, MS Windows, Apple Mac OS |

1990s: The Internet

Prior to about 1995, most applications were standalone. Businesses might have their own internal networks, but there was very limited ability to connect to any external computers e.g., you might be able to connect to a mainframe at work, but not to a mainframe across the country. You might have a modem at home, but it was used to connect to a local bulletin board system only. The rise of the Internet in the 1990s changed this. Suddenly, it was possible to connect to any computer in the world, and to share information with anyone, anywhere.

The Internet was originally conceived around a series of protocols e.g., FTP, Gopher. The World Wide Web (WWW) simply reflected one way that we expected to share information and services. However, it was definitely the most approachable way, and public demand led to the WWW dominating user’s experience of what a network could do. This led to the rise of web applications – applications that run in a web browser, connecting to one or more remote systems.

This shift towards the World Wide Web happened at a time when developers were struggling to figure out how to handle software distribution more effectively. It also provided a single platform (the WWW) that could be used to reach all users, regardless of operating system, as long as they had a web browser installed. For better or worse, the popularity of the WWW pushed software development towards using web technologies for application development as well. We’re still dealing with that movement today (see Electron and the rise of JS frameworks for application development).

Development Snapshot

| Category | Examples |

|---|---|

| Programming languages | C++, Objective-C, Perl, Python, JS |

| Programming paradigm | Object-Oriented Programming |

| Editor of choice | Vim, Emacs, Visual Studio |

| Hardware | IBM PC, Apple Mac |

| Operating System | Windows, OS/2, Mac OS, (IE, Netscape) |

2000s: Mobile-first

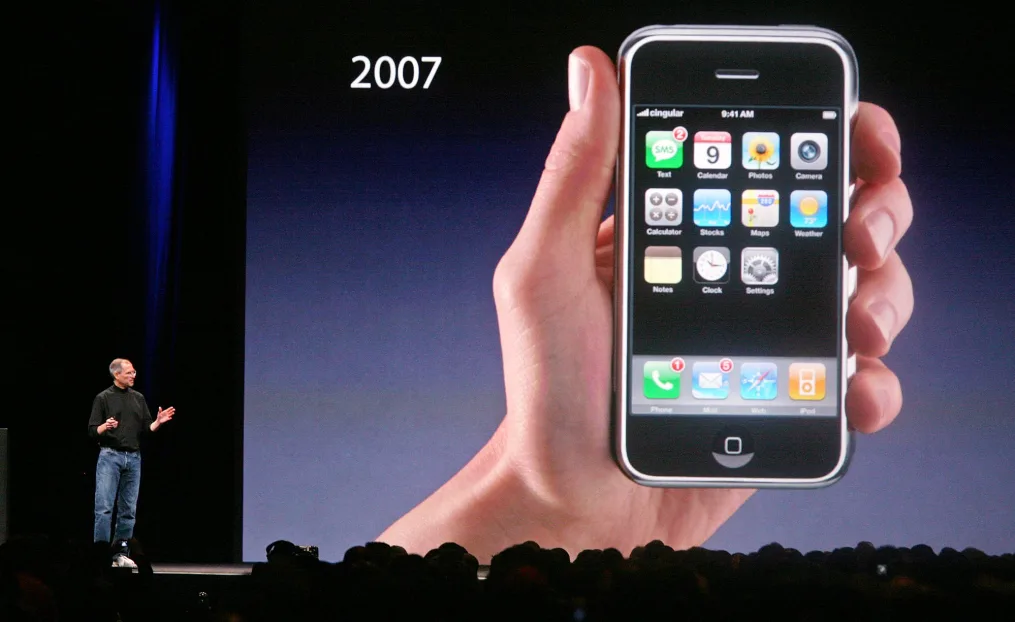

Prior to 2007, most home users had one or more home PCs, and possibly a notebook computer for work (and probably a few game consoles or other devices). Phones were just devices for making phone calls.

The iPhone was launched in 2007, and introduced the idea of a phone as a personal computing device. Society quickly adopted them as must-have devices. Today, there are more smartphone users than desktop users, and the majority of Internet traffic comes from mobile devices (99.9% of it split between iPhones and Android phones).

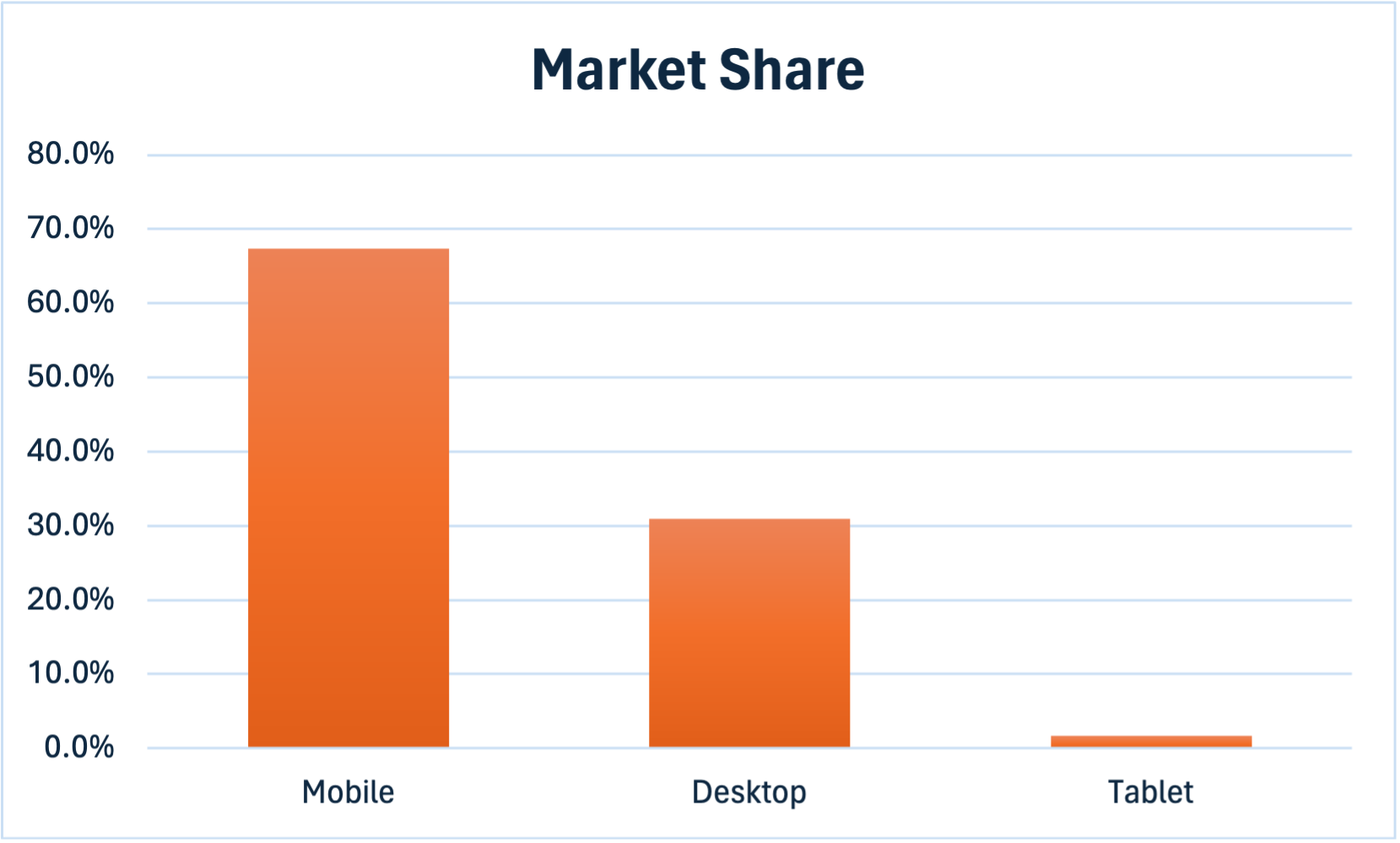

This has led to the notion of mobile-first design, where applications are designed primarily for mobile devices. Depending on the type of software, desktop software may be a secondary concern (and delivered as a web site, or not at all). This is a significant change from the past, where personal computers used to represent the dominant sector.

Mobile devices have changed the way we think about and deliver software. Again.

| Category | Examples |

|---|---|

| Programming languages | Objective-C, Swift, C++, Python, JS, Java, Kotlin |

| Programming paradigm | Object-oriented & functional programming |

| Editor of choice | Emacs, Vi, Visual Studio |

| Hardware | IBM PC (and comparables), iPhone, Android phone |

| Operating System | Windows, Mac OS, iOS, Android, (Chrome, Safari, Firefox) |

The business of building software

Computing environments and the context in which we run software has changed radically in the last 15 years. It used to be the case that most software was run on a desktop or notebook computer. Tasks were means to be performed at a specific location, with dedicated hardware. In the present day, most software is run on mobile devices, with desktops being relegated to more specialized functionality e.g., word processing, composing (as opposed to reading) email.

Does this mean that we can just focus on mobile devices? Probably not. Many of your users will have multiple devices: a notebook computer, a smartphone, and possibly a tablet or smartwatch, and they expect your software to run everywhere. Often simultaneously2.

Platform challenges

This means that you will produce one or more of these application styles, depending on which hardware you target first.

- Console application: Applications that are launched from a command-line, in a shell.

- Desktop application: Graphical windowed applications, launched within a graphical desktop operating system (Windows, macOS, Linux).

- Mobile application: Graphical application, hosted on a mobile operating system (iOS, Android). Interactions optimized for small form-factor, leveraging multi-touch input.

- Web applications. Launched from within a web browser. Optimized for reading short-medium block text with some images and embedded media.

Additionally, your application is expected to be online and network connected. Application data should reside in the cloud, and synchronize between devices so that you can can switch devices fluidly.

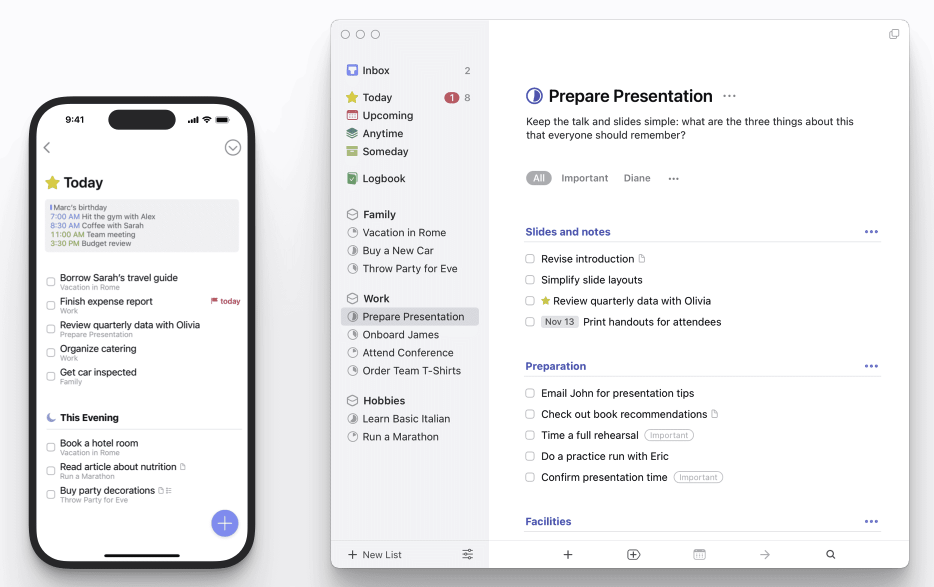

Things is a highly successful task management application that runs on desktop, phone, tablet (and even watch). It is cloud-enabled, and provides a consistent user experience across all of these devices. Multi-device support is a major contributor to its success.

I think I purchased my desktop and mobile licenses in 2012, and I haven’t paid them a penny since then. I almost feel guity.

Your choice of application style should depend on:

- The requirements of your application. An application that is meant to be used casually, through the day, might make the most sense as a mobile application. Something like a desktop authoring program probably makes more sense when combined with the large screen of a desktop application.

- Market share of the platform associated with a particular style. Mobile applications are much more popular than desktop or console applications. This may be a huge consideration for a commercial application thast is concerned with reaching the largest number of users.

The “best” answer to choice of platform might actually be “all of them”. Netflix, for example, has dedicated applications for Apple TV, Roku, and other set-top boxes, as well as for desktop, phone, tablet and web. This allows users to access their content from any device, and to have a consistent experience across all of them.

How we develop applications

So, as developers, how has software development changed? It application development the same now as it was in the 1970s? 1990s? As you might expect, software development as a discipline has had to change drastically over time to keep up with these innovations.

- Console development is relatively simple, and often doesn’t need much more than a capable compiler, and standard libraries that are included with the operating system.

- Desktop development added graphical user interfaces, which in turn, require more sophisticated libraries to be included with the operating system. Eventually we needed to start adding other capabilities e.g., networking support.

- Mobile development expanded this even further, adding support for highly specific hardware capabilities e.g., cellular modem, gyroscope, fingerprint sensors.

Modern applications then, are typically graphical, networked, and capable of leveraging online resources. They must also be fast, visually appealing, and easy to use.

Let’s examine how we achieve this. We can consdier application development as the interplay between the operating system, a programming language, and libraries that bridge these two things and support our required functionality.

The role of the operating system

An operating system is the fundamental piece of software running on your computer that manages hardware and software resources, and provides services to applications which run on it. Essentially, it provides the environment in which your application can execute. The core of an operating system is the kernel, the main process which runs continuously and manages the system.

The role of an operating system includes:

- Allocating and managing resources for all applications, and controlling how they execute. This include allocating CPU time (i.e. which application is running at any given time), and memory (i.e. which application has access to which memory).

- Providing an interface that allows applications to indirectly access the underlying hardware, and does this in such as way that each application is isolated from the others.

- Providing higher-level services that applications can use e.g., file systems, networking, graphics. The OS abstracts away the underlying hardware so that applications don’t need to be concerned with the underlying implementation details i.e. you don’t need to write programs that directly interact with a hard drive, or a particular graphics card.

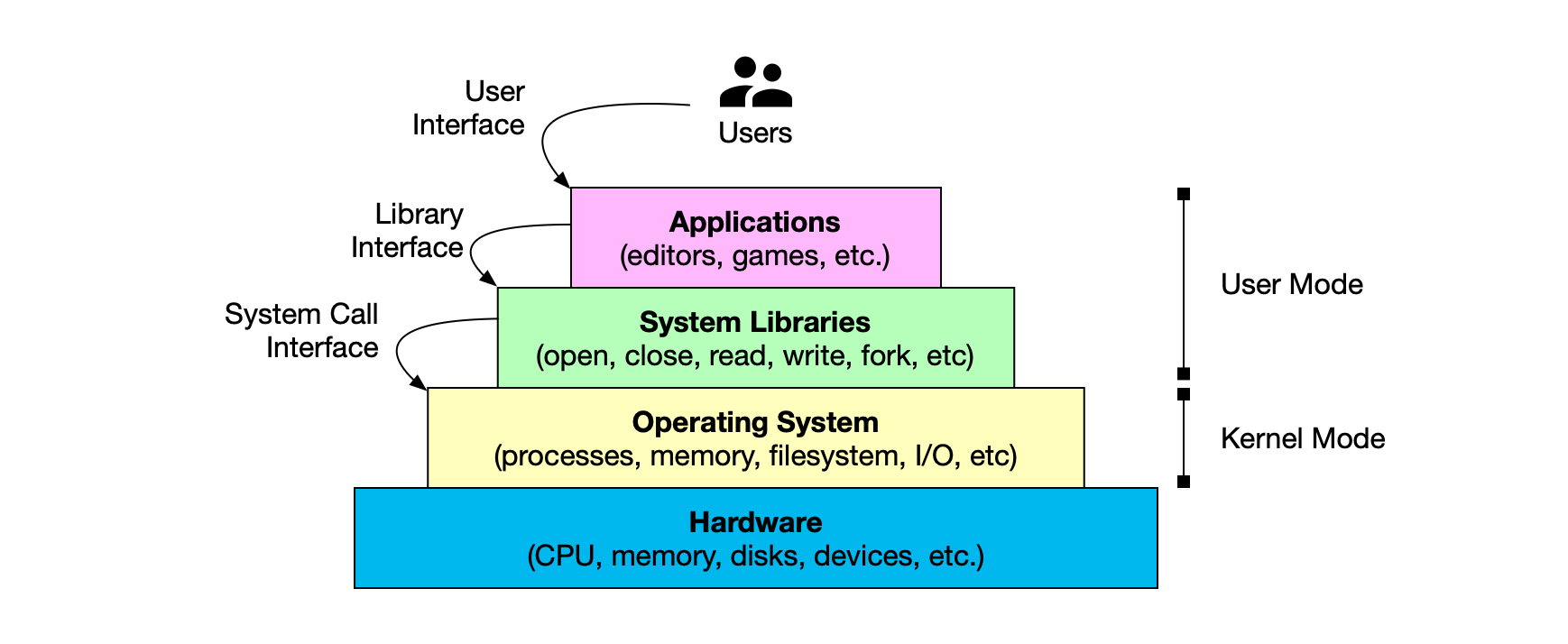

This illustrates the relationship between the operating system and applications running on it:

Our application stack includes multiple layers. Each layer can only call down into the layer directly below it. e.g., applications do not have direct access to hardware. Instead, they must use system libraries to request hardware capabilities from the underlying operating system (which in turn manages the hardware).

Layers are further subdivided based on their level of privilege/access:

User moderepresents code that runs in the context of an application. This code does not have direct access to the hardware, and must use system libraries and APIs to interact with the hardware. Applications and many libraries only exist and execute at this level.Kernel moderepresents private, privileged, code that runs in the context of the operating system. This code has direct access to the hardware, and is responsible for managing the hardware resources. The OS kernel is the core of the operating system, and provides the fundamental services that applications rely on. Some system-level software can also execute at this level.

Notice that the kernel of the operating system runs in a special protected mode, different from user applications. Effectively, the core operating system processes are isolated from the user applications. This is a security feature which prevents user applications from directly accessing the hardware, and potentially causing damage to the system.

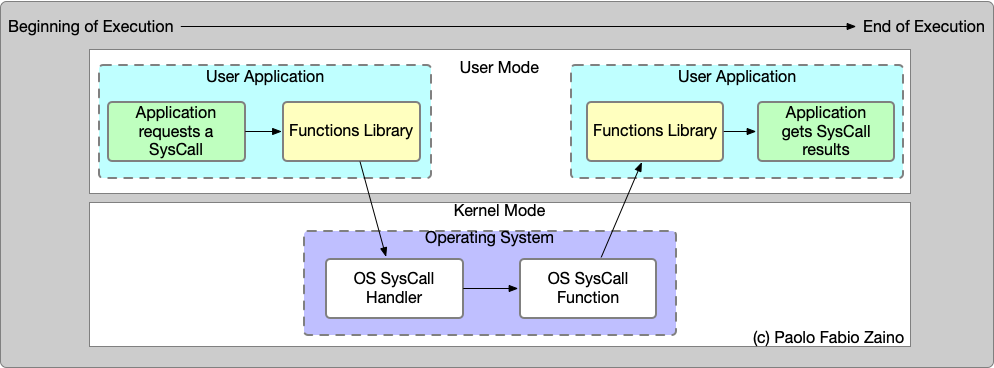

Programming languages rely heavily on the underlying operating system for most of their capabilities. In many ways, you can think of them as a thin layer of abstraction over the operating system. For example, when you write a file to disk, you are actually making a system call to the operating system to write that file. When you read from the network, you are making a system call to the operating system to read that data.

A system call is simply a function call through a specific API that the OS exposes. The actual interface will differ based on the operating system, but can typically used to perform tasks like reading from a file, writing to the console, or creating a network connection. OS vendors each provide their own “native” system call interface. You can think of it as an abstraction layer, which also enforces a security model and other concerns.

Why is this layering important?

- Applications have limited ability to affect the underlying OS, which makes the entire system more stable (and secure).

- We can abstract the hardware i.e. decouple our implementation from the exact hardware that we’re using so that our code is more portable.

Programming languages

Your choice of programming language determines the capabilities of your application. You also need to make sure that you pick a programming language that is suitable for the operating system you’re targeting.

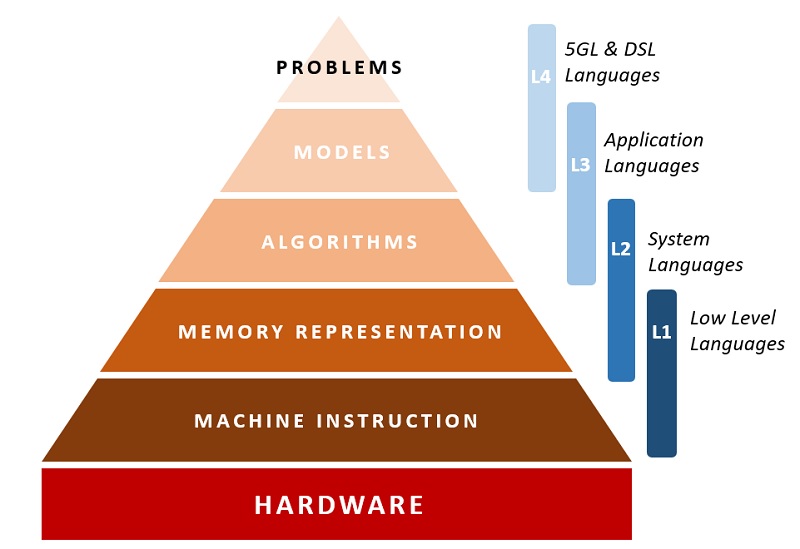

We can (simplistically) divide programming languages into two categories: low-level and high-level languages.

Low-level languages are suitable when you are concerned with the performance of your software, and when you need to control memory usage and other low-level resources. They are often most suitable for systems programming tasks, that are concerned with delivering code that runs as fast as possible, and uses as little memory as possible. Examples of systems languages include C, C++, and Rust.

Systems programming is often used for operating systems, device drivers, and game engines. Low-level languages are obviously tightly-coupled to the underlying OS.

High-level languages are suitable when you are concerned with the speed of development, and the robustness of your solution. Performance may still be important, but you are willing to trade off some performance relative to a low-level language, for these other concerns.

High-level languages are well-suited to applications programming , which can often make performance concesssions for increased reliability, more expressive programming models, and other language features. In other words, applications tend to be less concerned with raw performance, and so we can afford to make tradeoffs like this 3.

These languages are also less coupled to the underlying OS (at least in theory).

Examples of application languages include Swift, Kotlin, and Dart. Applications programming languages are often used for web applications, mobile and desktop applications. They may also be used when creating back-end services e.g. web servers.

Note that is is certainly possible to develop applications in low-level languages, and to develop systems software in high-level languages. However, the choice of language is often driven by the requirements of the software you are building.

Language tradeoffs

So what kinds of benefits do we get from high-level languages?

| Language Features | Systems | Application |

|---|---|---|

| Memory management | manual | garbage collection |

| Type system | static | static or dynamic |

| Runtime performance | fast | medium |

| Executables | small, fast | large, slow |

| Portability | low | high |

Modern application languages like Swift, Kotlin and Dart share similar features that are helpful when building applications:

Functional programming features. Modern languages feature support for functional features like lambdas, higher-order functions, and immutability. These features can make your code more expressive, and easier to understand. Theses are in addition to the traditional object-oriented features that you would expect from a modern language.

Memory management. Garbage collection is common with application languages, where you want to optimize for development time and application stability over performance. Garbage collection is simply a paradigm where the language itself handles memory allocation and deallocation by periodically freeing unused memory at runtime. GC results in a system that is significantly more stable (since you’re not manually allocating/deallocating with all of the risks that entails!) but incurs a runtime performance cost. The majority of the time, the performance difference is negligible4.

NULL safety. Swift, Kotlin and Dart have null safety built in, which can prevent null pointer exceptions. For example, you cannot access uninitialized memory in Kotlin, since the compiler will catch this error at compile time.

Runtime performance. Application languages tend to be slower than systems languages, given the overhead of GC and similar language features. Practically, the difference is often negligible at least when working with application software.

Portability. Systems languages are often “close to the metal”. However, being closely tied to the underlying hardware also make it challenging to move to a different platform. Application languages can be designed in a way that leverages a common runtime library across platforms, making it simpler to achieve OS portability. Note that this is not always the case; Swift, for example, is only available on Apple platforms.

Using libraries

You could (in theory) write software that directly interacts with the operating system using system calls5. However, this is not practical for many situations, since you are then tied to that particular operating system (e.g. Windows 10). Instead, we use system libraries, which are a level of abstraction above system calls that provide a higher-level interface, to your programming language. This is the level at which most applications interact with the operating system.

The C++ standard library is an example of a system library which provides a set of C++ style functions that work on all supported operating systems. When you use std::cout to write to the console, for example, you are actually calling into the operating system using system calls to interact with the hardware. Your code compiles and runs across different operating systems because the required functionality has been implemented for that particular OS, and exposed through that standard library interface.

C++ is a special case, where industry leaders standardized this behaviour early on (making C/C++ sort of lingua-franca). However, there is a lot of other OS functionality that differs across operating systems, and doesn’t have a standard interface. For example, there is no standard library for creating a window on a desktop, or for accessing the camera on a phone. This poses challenges.

Vendors and OSS communities also usually provide user libraries, which are simply libraries that run in user-space and can be linked into your application to provide high-level functionality. Accessing a database for instance isn’t handled by the operating system directly, but by a user library that abstracts away the details of the database and provides a simple interface for your application to use.

| Library type | Example | Description |

|---|---|---|

| Programming language library | stdio | Provides low-level functionality e.g., file I/O. Abstraction over OS syscalls. |

| OS vendor library | Win32 | Provides access to specific OS functionality e.g., create a window, open a network connection. |

| Third party library | OpenGL | Provides extra capabilities that sit “on top of” the systems libraries e.g., high performance 3D graphics, database connectivity. |

We’ll make extensive use of libraries in this course! We’ll use system libraries to interact with the operating system, and user libraries to provide higher-level abstractions that make it easier to build applications.

Technology stacks

A software stack is the set of related technologies that you use to develop and deliver software. e.g., LAMP for web applications (Linux, Apache, MySQL and PHP).

Given what we now know about programming languaged and libraries: which software stack is “the best one” for building applications?

Is there a “best one”?

Let’s consider which technologies are commonly used:

| Platform | OS | Main Languages/Libraries |

|---|---|---|

| Desktop | Windows | C#, .NET, UWP |

| macOS | Swift, Objective-C, Cocoa | |

| Linux | C, C++, GTK | |

| Phone | Android | Java, Kotlin, Android SDK |

| iOS | Swift, Objective-C, Cocoa | |

| Tablet | Kindle | Java, Kotlin, Android SDK |

| iPad | Swift, Objective-C, Cocoa | |

| Web | n/a | HTML, CSS, JavaScript, TS Frontend: React, Angular, Vue Backend: Node.js, Django, Flask |

Why are there so many different technology stacks!? Why isn’t there one language (and set of libraries) that works everywhere?!

In a word, competition.

Development tools, including programming languages, are typically produced by operating system vendors (Microsoft for Windows, Apple for macOS and iOS, Google for Android). For each vendor, their focus is on producing development tools to make it easy to develop on their platforms. Apple wants consumers to run macOS and iOS, so they developed Swift and the supporting frameworks to make it easy for you to build applications on their platforms. Microsoft spend billions producing C# to make development easier for Windows easier; it would be against their interests to support it equally well on macOS.

As a result, each platform has it’s own specific application programming languages, libraries, and tools. If you want to build iOS applications, your first choice of technologies should be the Apple-produced toolchain i.e. the Swift programming language, and native iOS libraries. Similarly, for Android, you should be using Kotlin and the native Android libraries.

Why are we so focused on building native applications? Why not just build web applications instead?

Web technologies have evolved drastically over the past 20 years, and it’s possible to build practically anything as a web application (especially with modern JS, React, Svelte etc). There are a large number of fantastic applications written for the web e.g., Gmail, Netflix, D&D Beyond. For applications where you are mainly consuming data that is already residing on a remote site, web applications makes sense.

However people often choose to use native applications over web applications. Why?

- Native applications tend to be faster. We don’t incur the cost of fetching data (code, images, resources) over a network just to display the starting screen.

- Native applications can do more. We aren’t restricted by a browser’s security model. e.g., I have a file manager that I use a lot. I can’t imagine how to build this as a functional web application.

- Native applications can work offline. Web applications are fundamentally tethered to a web server, and services in the cloud. What happens when your internet is spotty? What if

eduroamisn’t working very well that day? Often the answer is that your application just doesn’t work. - We can customize interaction. How do you create a second window on a web site? How do you do popups? Keyboard shortcuts? You have limited control over interaction in a browser environment.

Here’s a quick list of my most commonly used applications, which includes a mix of native and web apps. I don’t think I would choose to replace the native applications with web applications in most cases.

Native

- Visual Studio Code, IntelliJ IDEA IDEA.

- Kotlin compiler, C++ compiler, Python interpreter.

- MS Powerpoint, MS Word, MS Excel.

- Forklift file browser.

Web applications

- Banking application

- Healthcare portal (for submitting claims)

- Configuration screen for my home RAID server

Web applications disguised as native applications, which I wish were fully native

- MS Teams

- Discord

- Slack

What do we do?

Choosing technologies is complicated! In our case, we have the luxury of picking something that is interesting (as opposed to “something that the team knows”, or “what your manager tells you to use”). We’ll stick with native applications, which provide the most flexibility.

We’ll start with a platform, and then narrow down from there:

- Pick a platform: Android (mobile).

- Pick a programming language: Kotlin (high-level, modern).

- Sprinkle in libraries to cover expected functionality.

- graphical user interfaces: Compose

- networking: Ktor

- databases, web services: specific to our cloud provider.

We’ll explore all of this as we progress through the course.

Last word

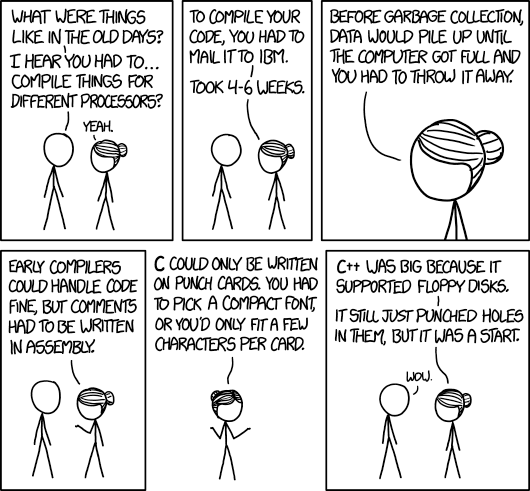

https://imgs.xkcd.com/comics/old_days.png

-

Programming languages go up and down in popularity, but Java has remained successful over time, usually sitting in the top-three since first introduced. This is mainly due to its success as a very stable back-end programming language, which runs on practically any platform. It was also the main Android language for a long time, which certainly didn’t hurt it’s ranking. ↩

-

Does this seem ridiculous? It’s not really. We all routinely read/delete email on our phones, and expect our notebooks to reflect that change immediately. ↩

-

Note that this is not always the case! Computer games, for example, are applications that are also expected to be highly performant; you are not allowed to compromise features OR performance. This is also why it can take highly skilled game developers 5+ years to develop a title. However, the rest of us rarely have that much time or budget to work with. ↩

-

Kotlin GC runs in less than 1/60th of a second, and only when necessary. Dart GC runs in less than 1/100th of a second. Users certainly don’t notice this. ↩

-

My first “real” Windows program was created in C, making system calls to the Win32 C-style API to create and manipulate windows. It was incredibly painful, and I don’t recommend it to anyone. ↩