CS 346 Winter 2025

The Winter 2025 term is complete. Thanks to everyone that took the course!

This course is not offered in Spring terms, but will return in Fall 2025.

Welcome to the course!

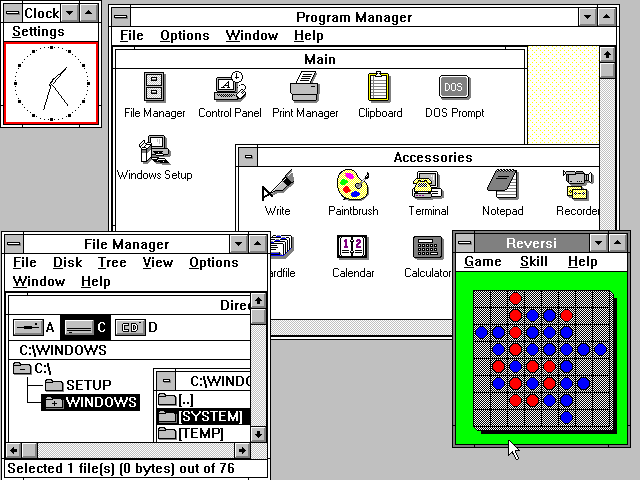

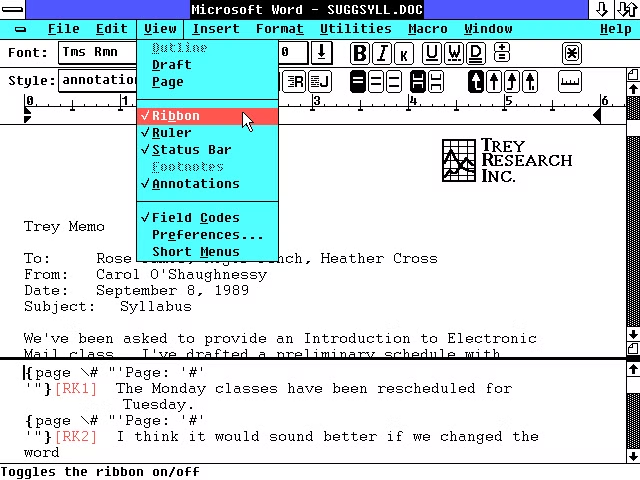

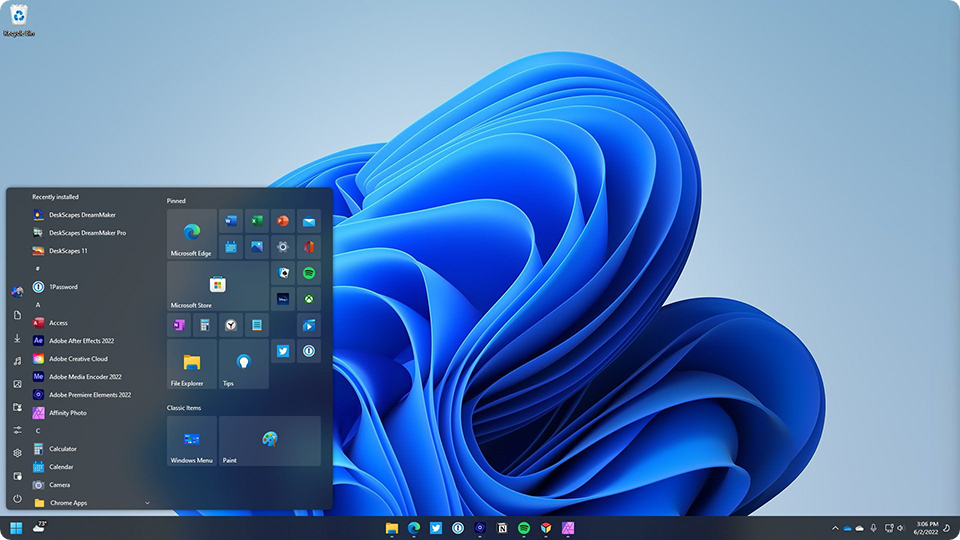

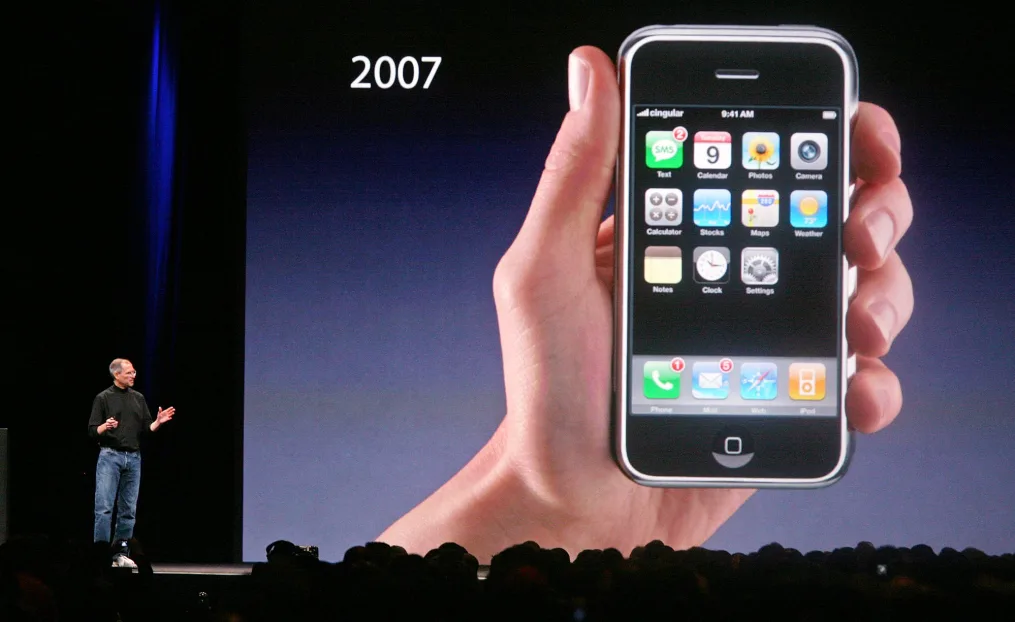

CS 346 is a course about designing and building software.

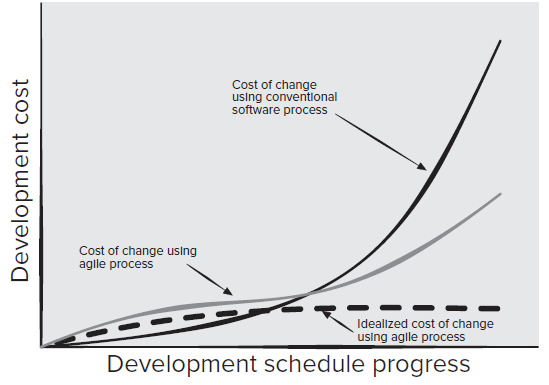

Modern software is often too complex for a single person to develop on their own. By working together, you and your project team will use best-practices to design and build a commercial-quality, robust, full-featured application, using a modern technology stack. As much as possible, we aim to explore modern and effective development techniques.

If you’re taking this course, all the information that you need is available on this website.

If you’re unsure where to start, try these links first:

- Class schedule describes the high-level course structure, including all course deadlines.

- Lecture plans details each week’s agenda, with links to lecture slides.

- Course project describes the team project and how to get started.

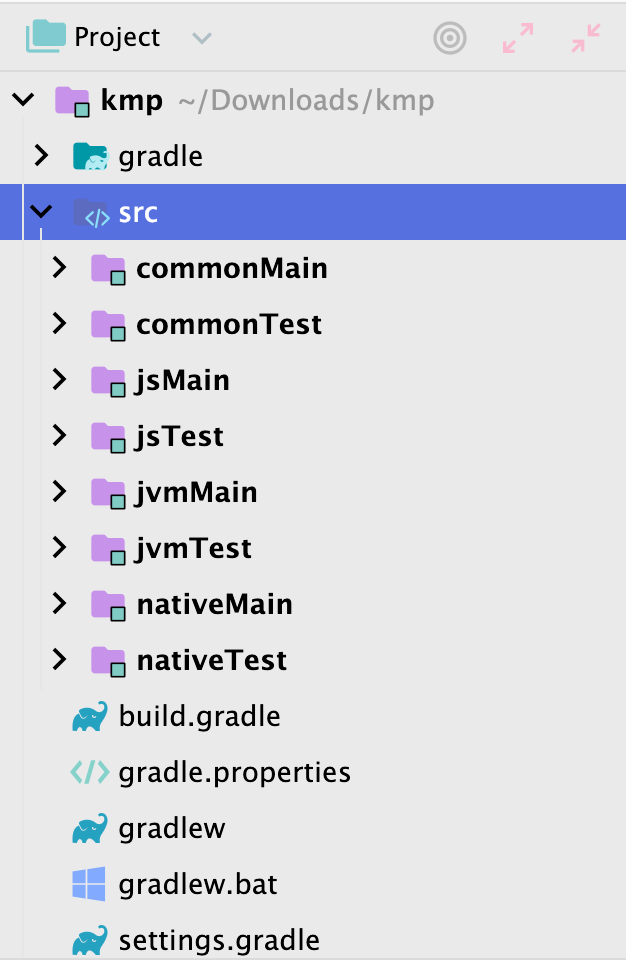

If you need to reach course staff, including your TA, their contact information is here. You are also welcome to contact the instructor or ISC directly with questions.

Have a great term,

![]()

Description

Description

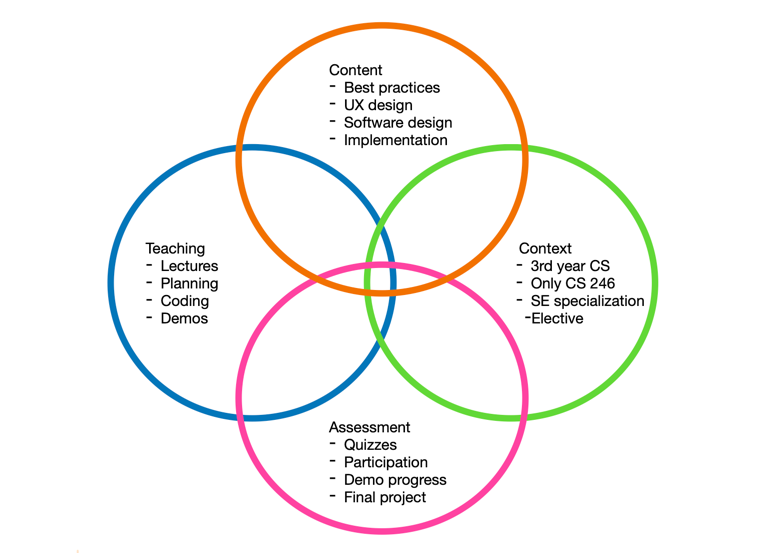

Introduction to full-stack application design and development. Students will work in project teams to design and build complete, working applications and services using standard tools. Topics include best-practices in design, development, testing, and deployment.

Units

0.50

This is third-year course, intended to be taken in 2B or 3A.

It’s a direct successor to CS 246 Object-Oriented Programming, and an informal lead-up to CS 446: Software Design and Architectures. It is one of the courses that can be applied towards the Software Engineering specialization.

Prerequisites

This course is restricted to Computer Science students.

Additionally, you must have successfully completed CS 246 prior to taking this course. From that course, you should be able to:

- Design, code and debug small C++ programs using standard tools. e.g. GCC on Windows, macOS or Unix.

- Write effective unit tests for these programs. e.g. basic I/O tests; checking for a range of valid and invalid conditions.

- Demonstrate programming proficiency in C++, which includes:

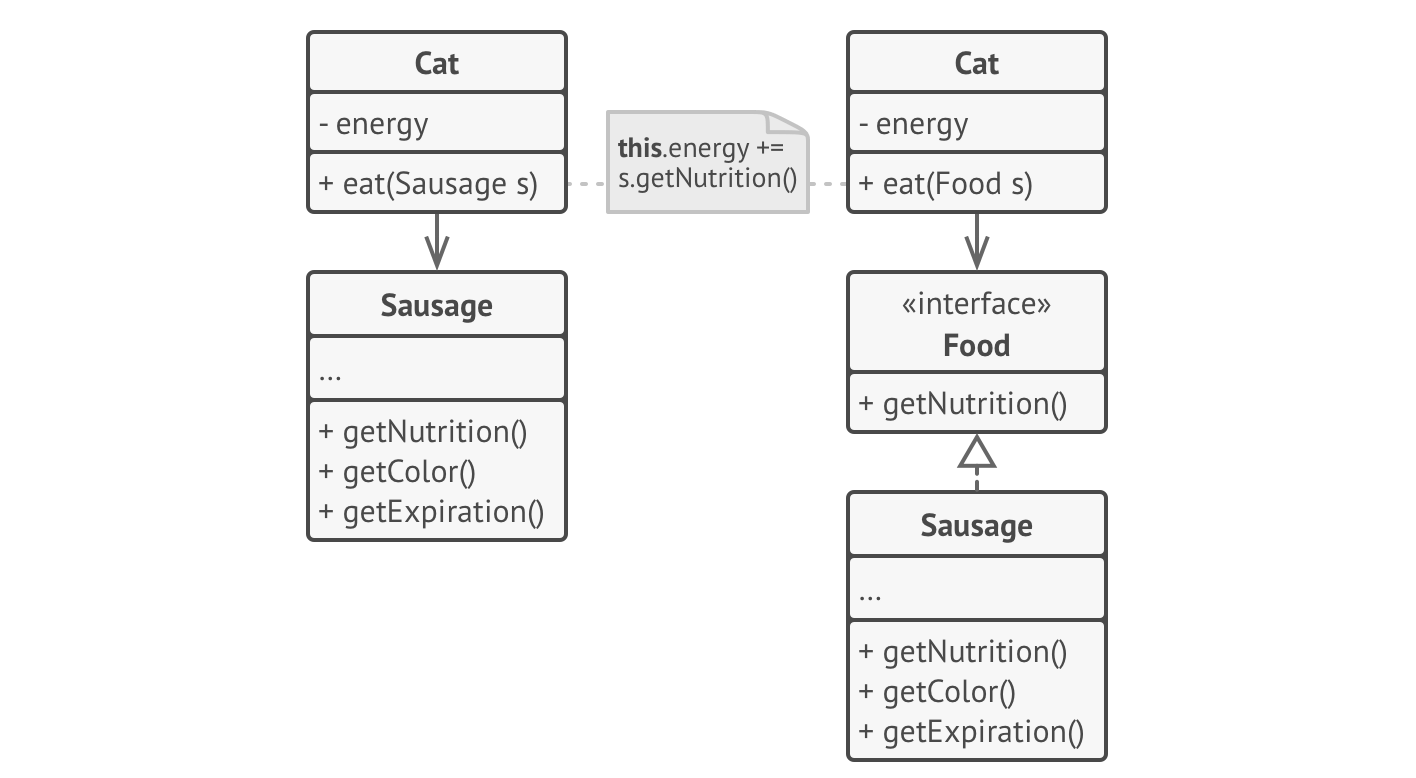

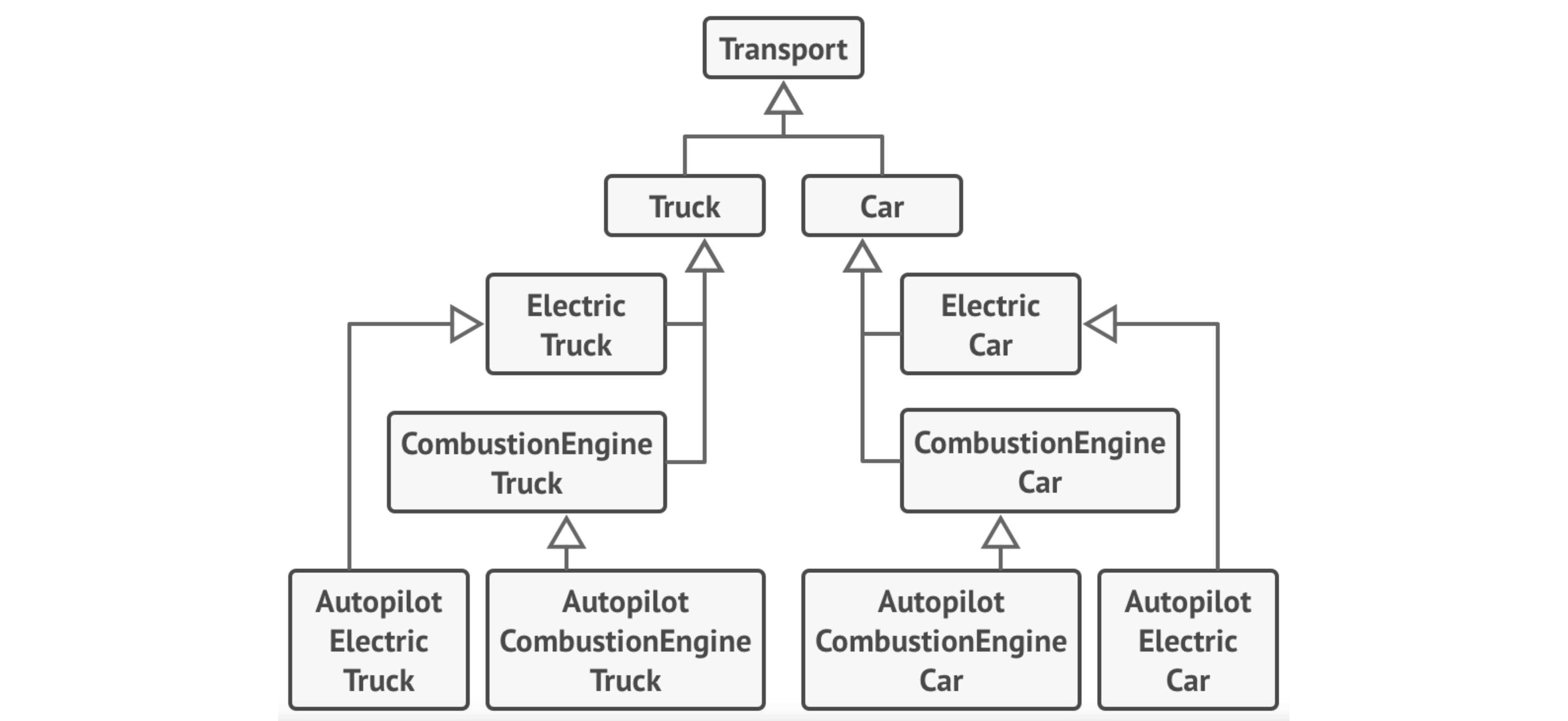

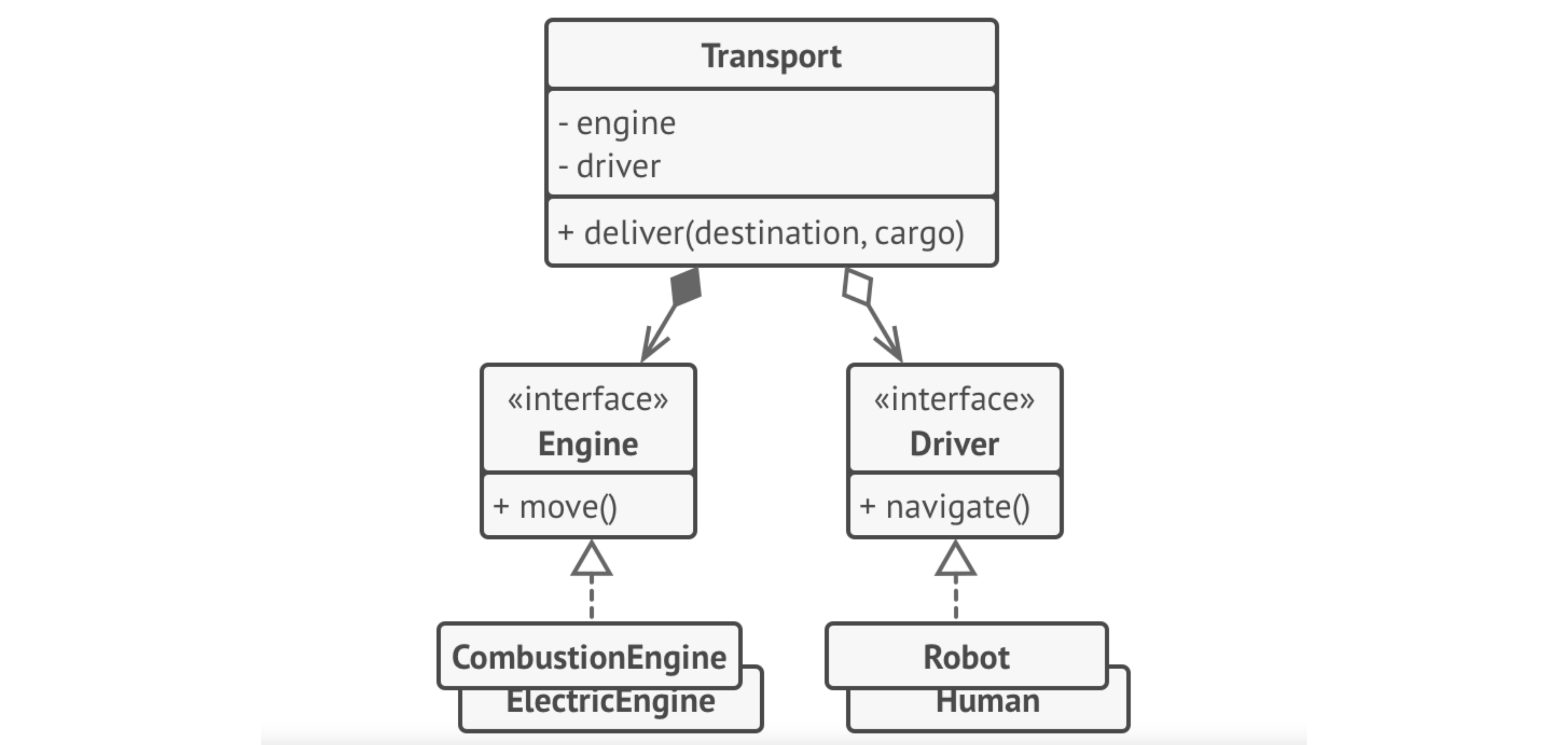

- understanding of fundamental OO concepts. e.g. abstraction, encapsulation

- knowing how to use classes, objects, method overloading, and single inheritance; polymorphism

- understanding how to use assertions, and

- knowing how to manage exceptions.

Learning Objectives

This course includes a mix of lectures, demos and project activities. The course project is a significant element of the course.

On successful completion of the course, students will be able to:

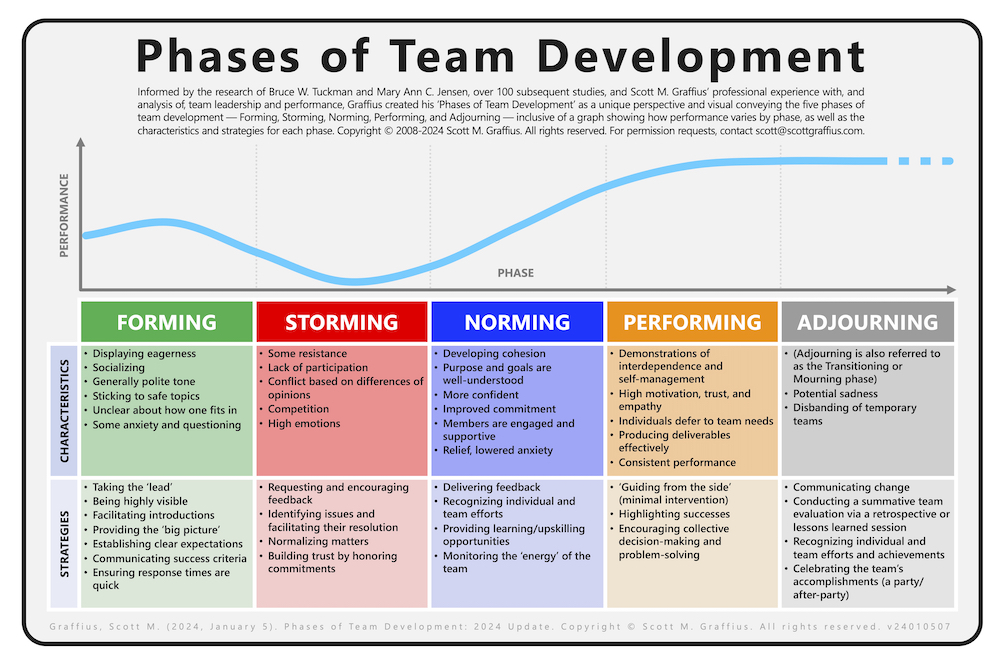

- Work effectively as a member of a software development team.

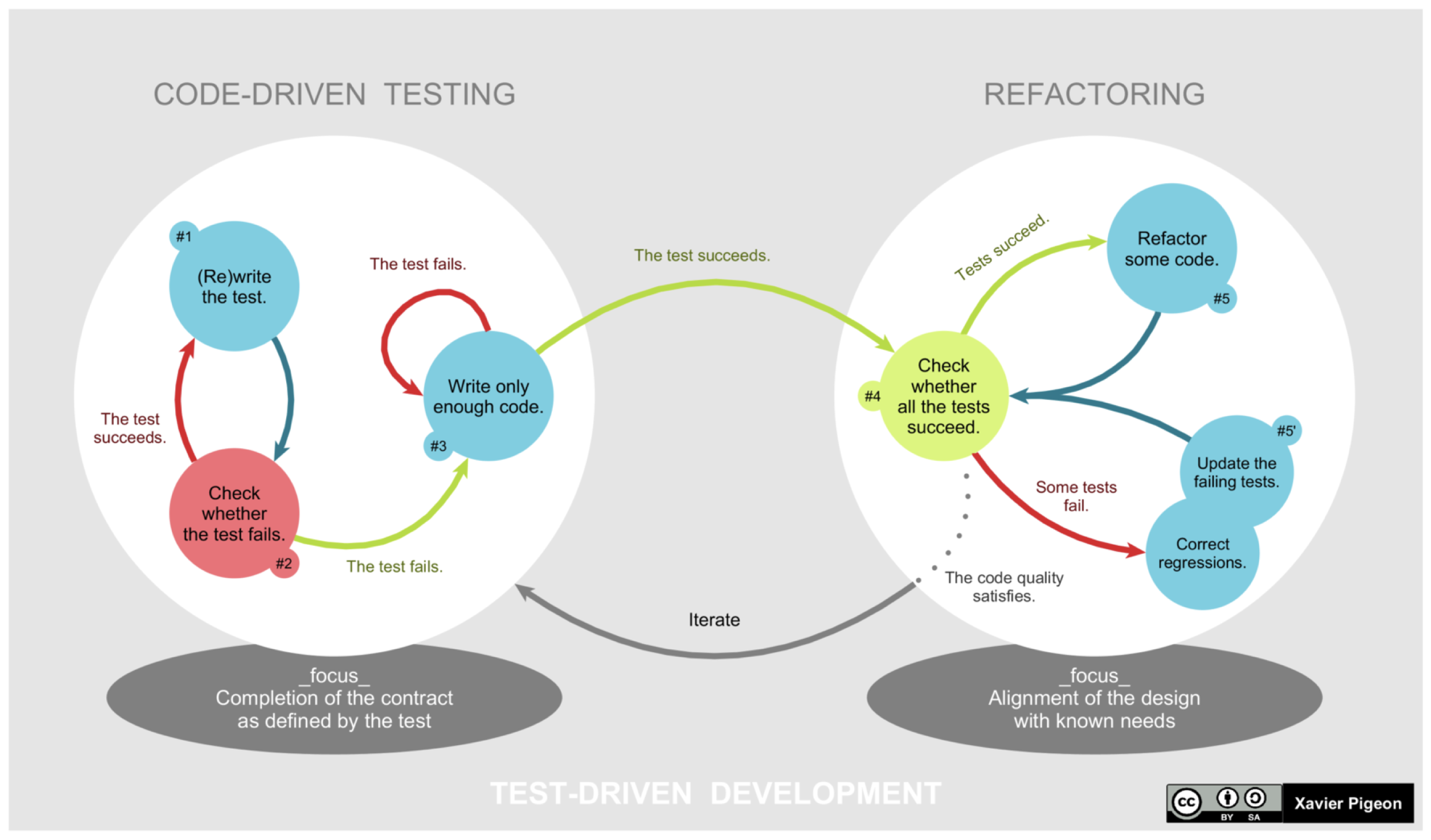

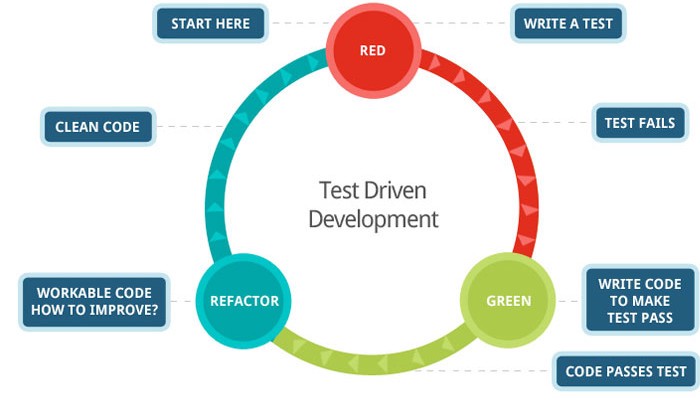

- Use an iterative process to manage the design, development and testing of software projects.

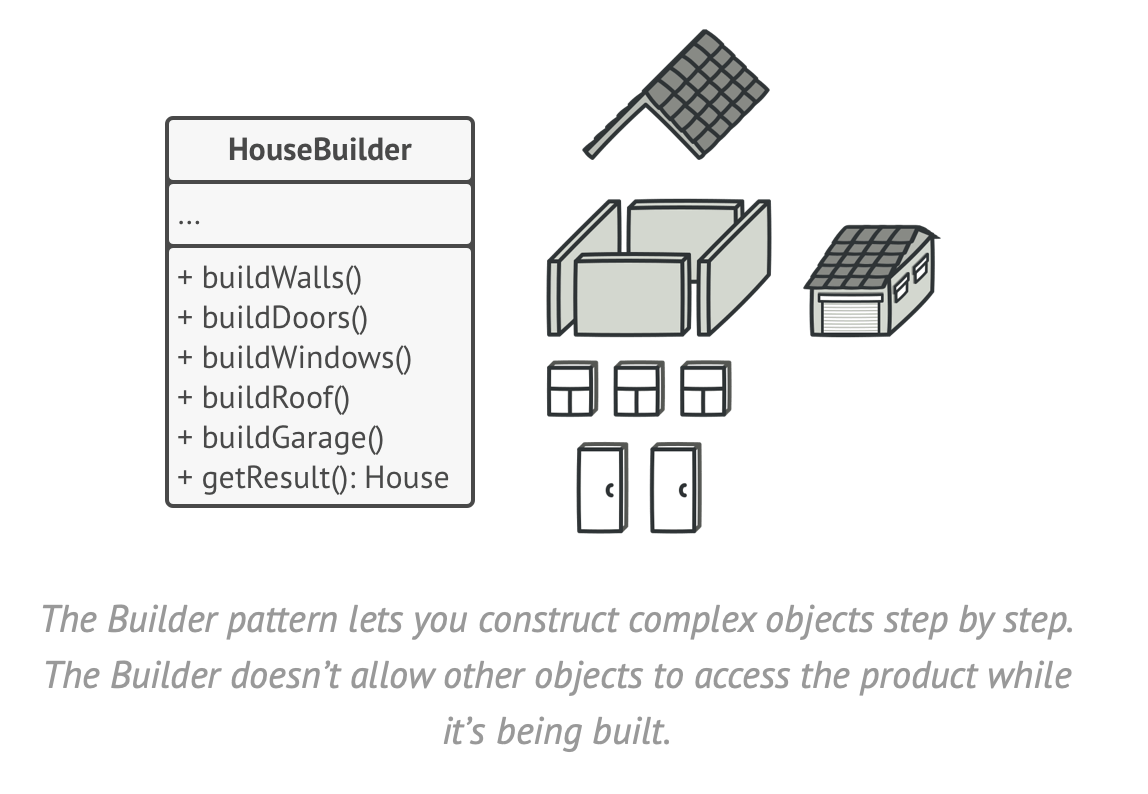

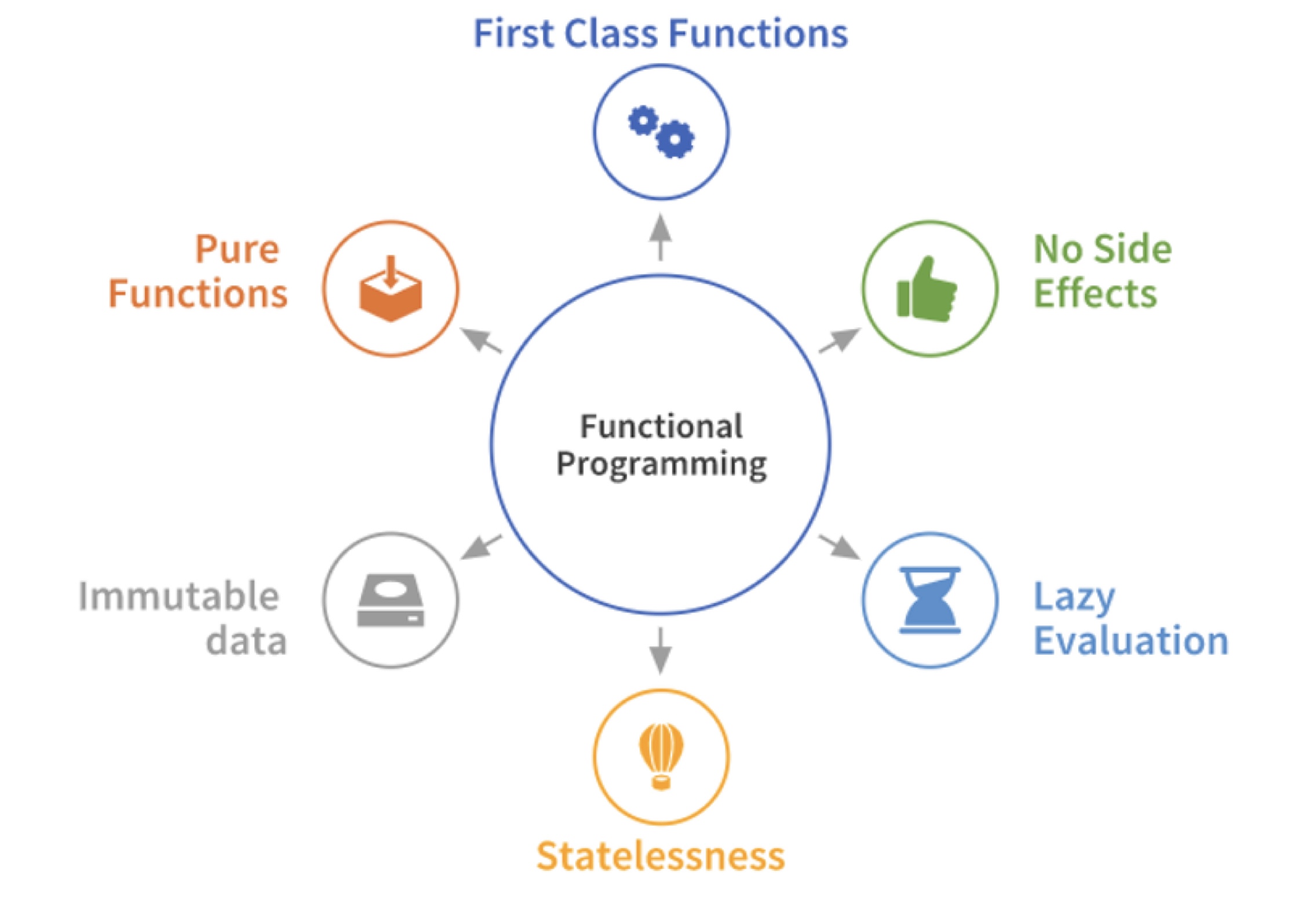

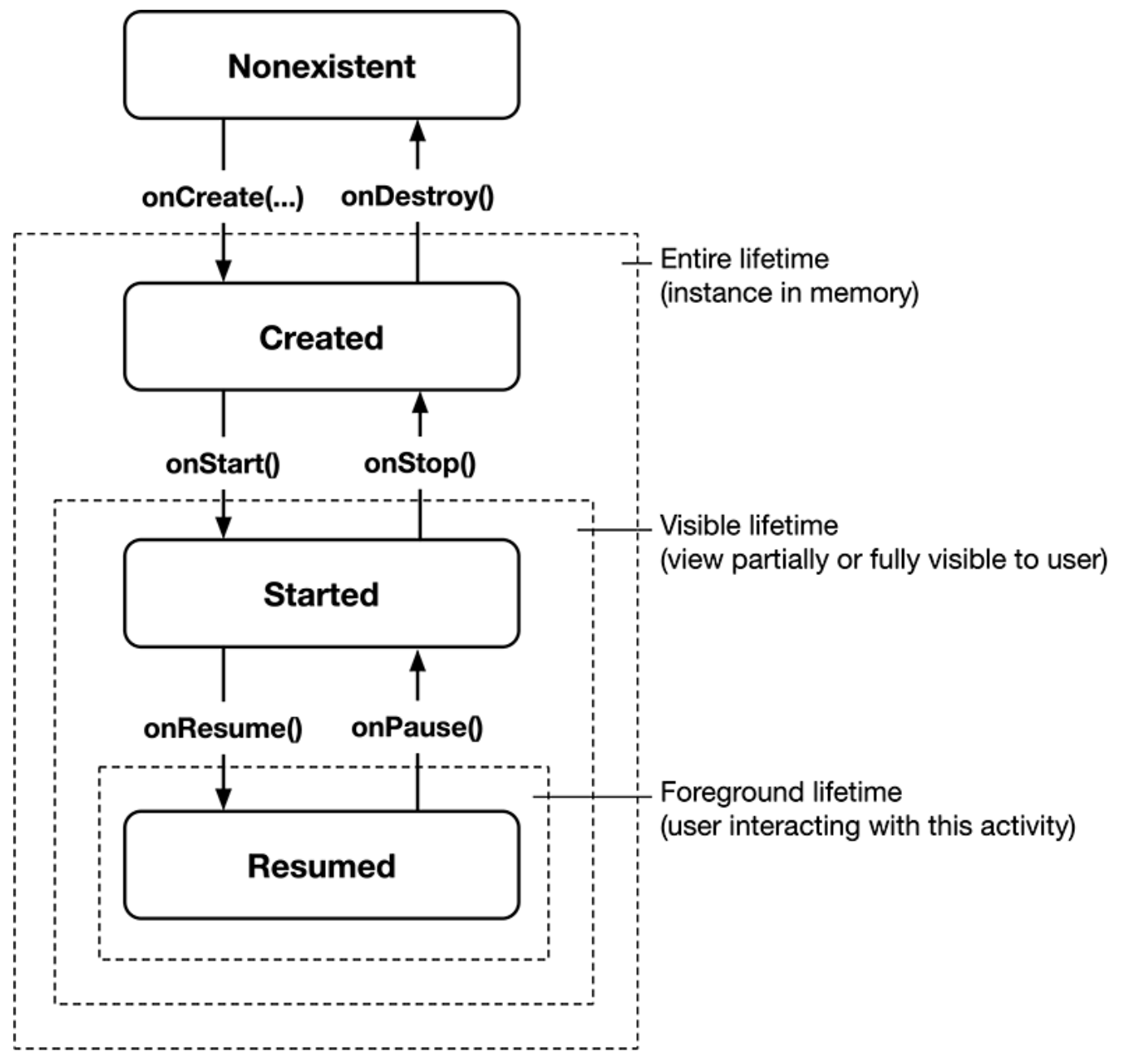

- Design and develop different styles of application software in Kotlin, with appropriate architectural choices.

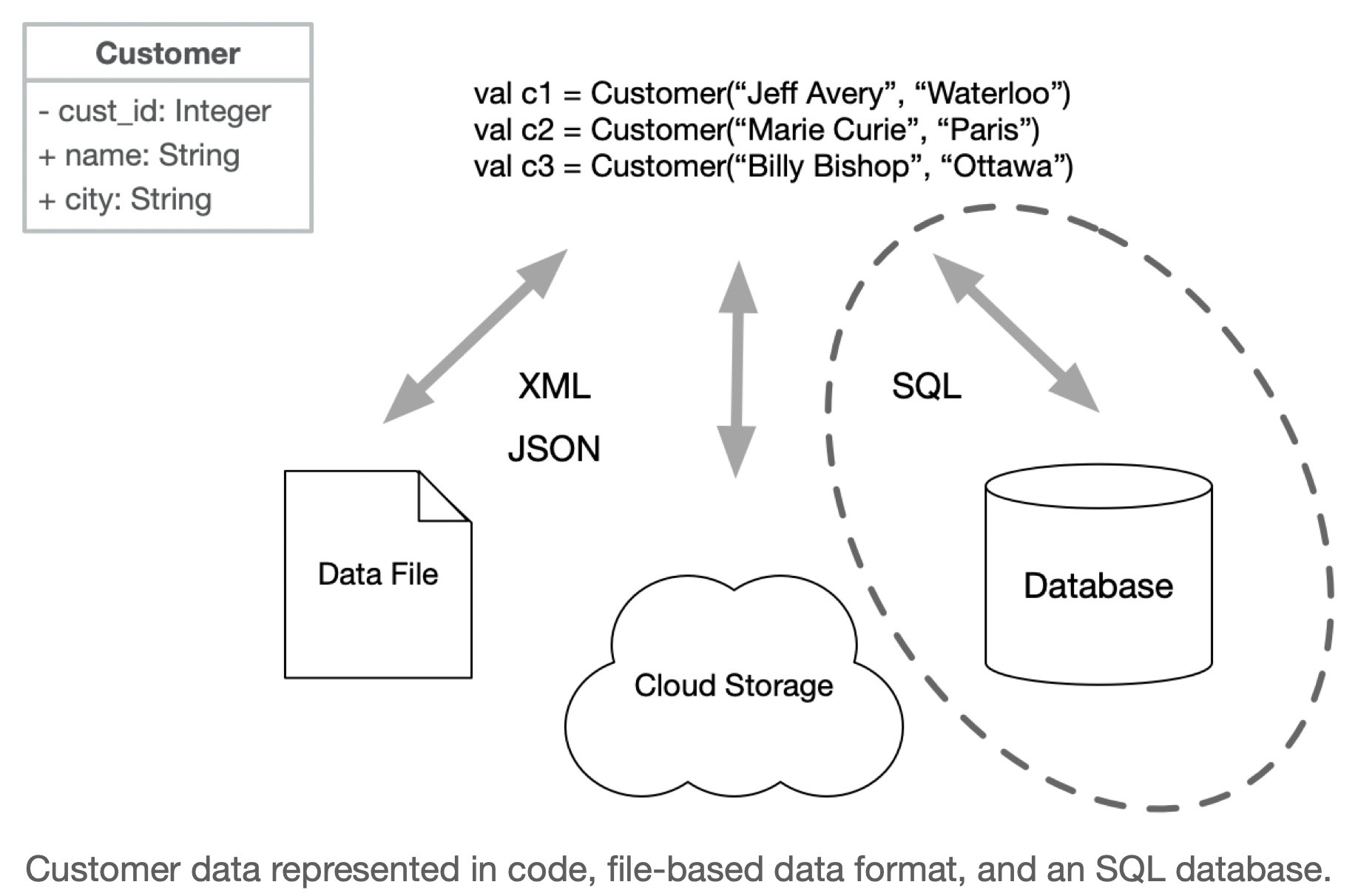

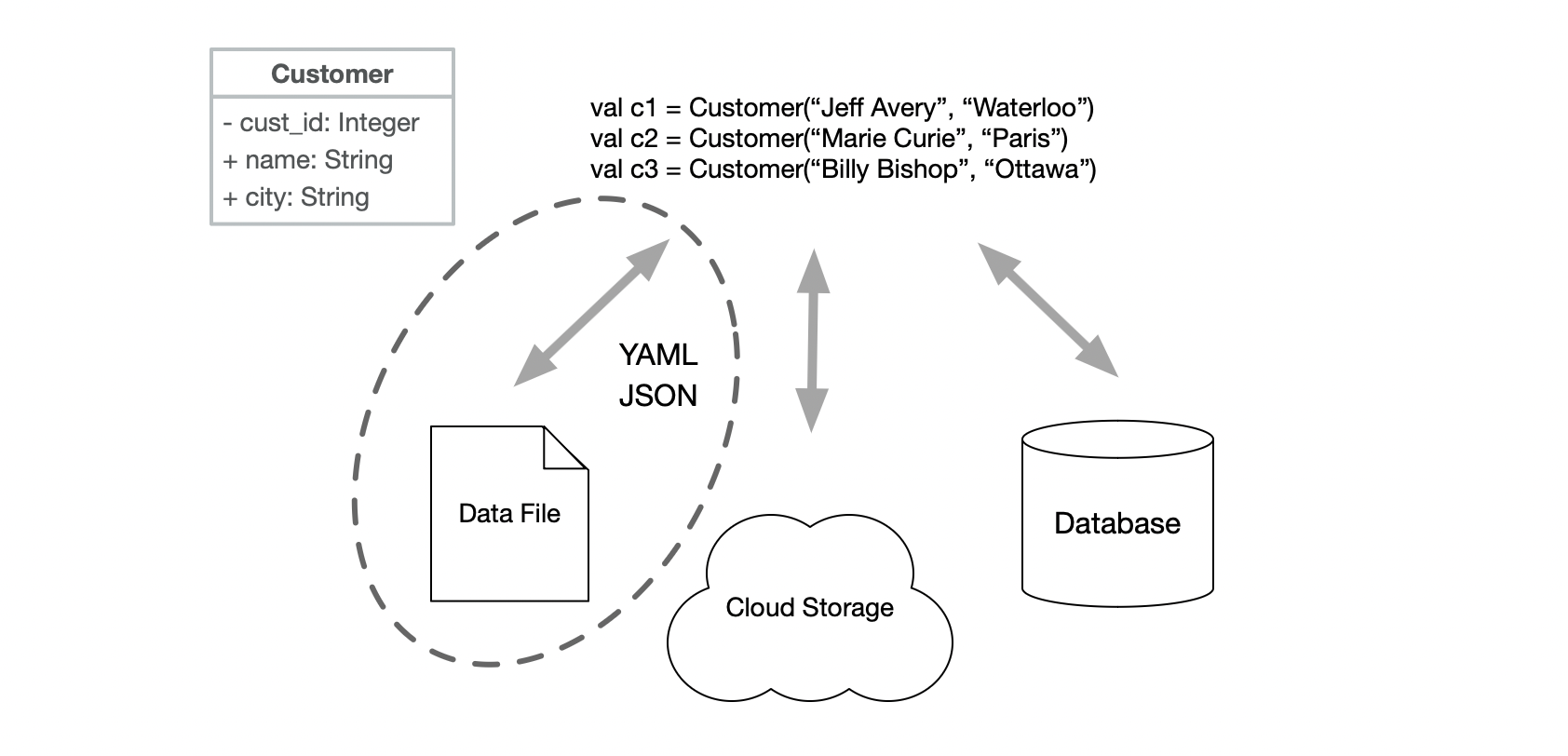

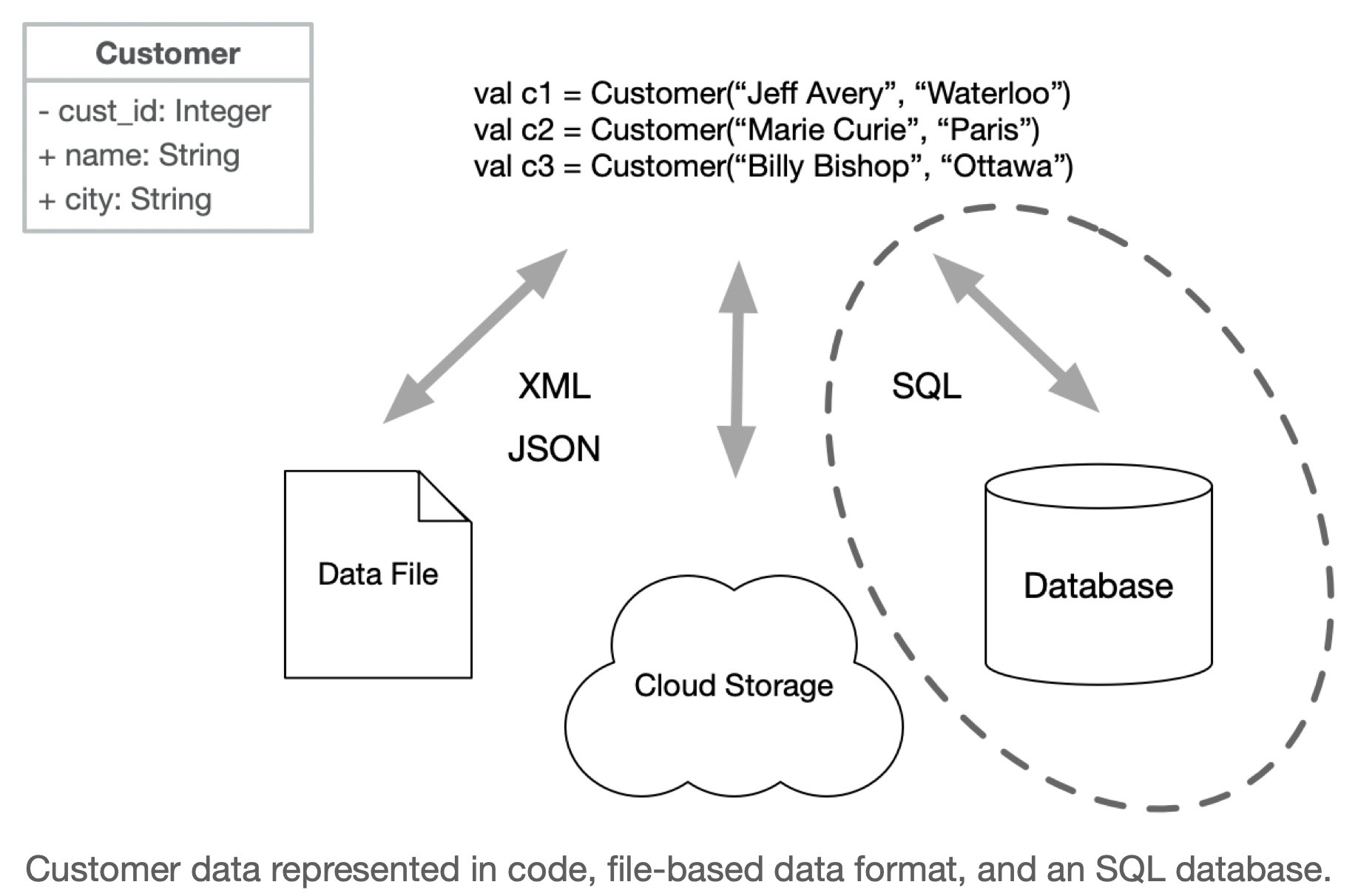

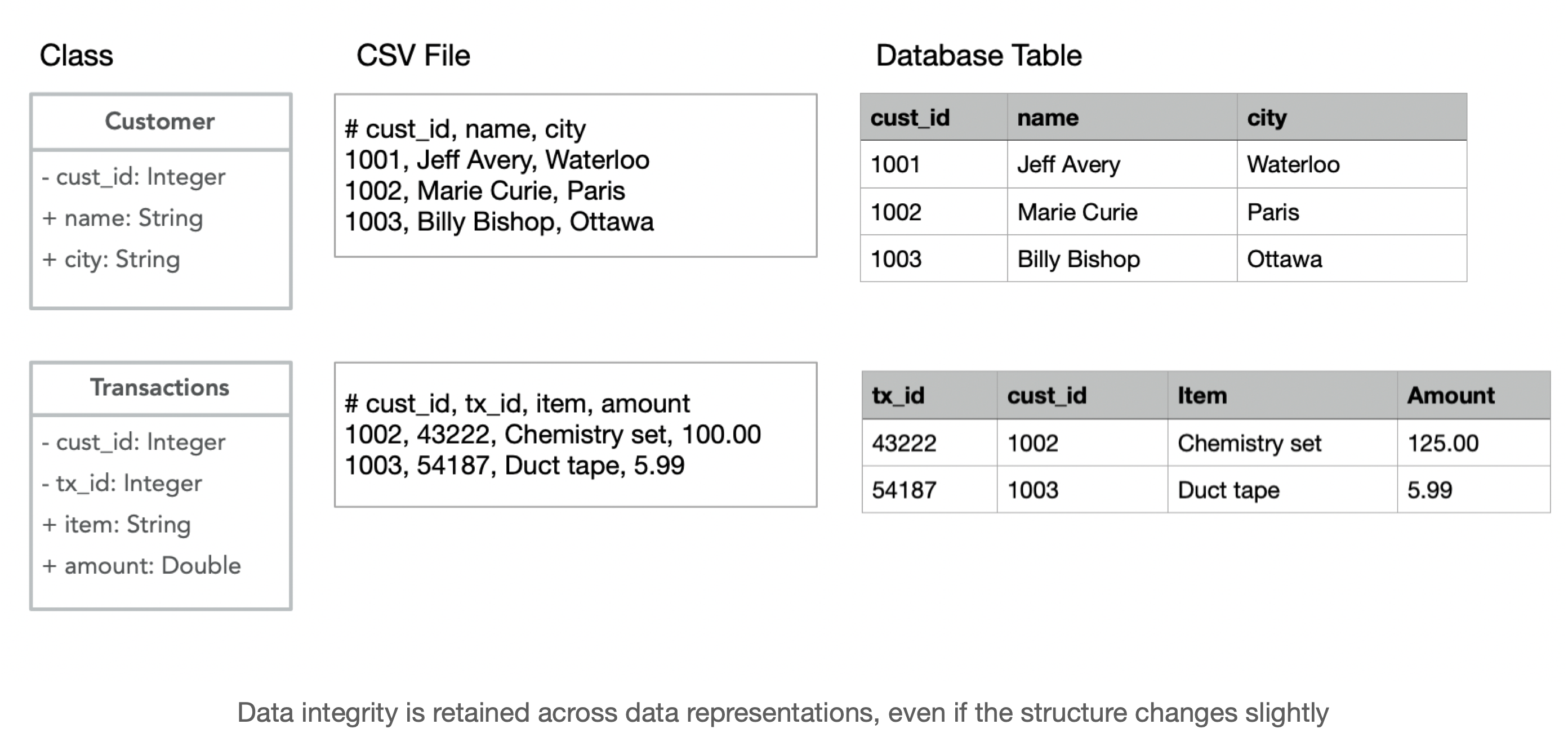

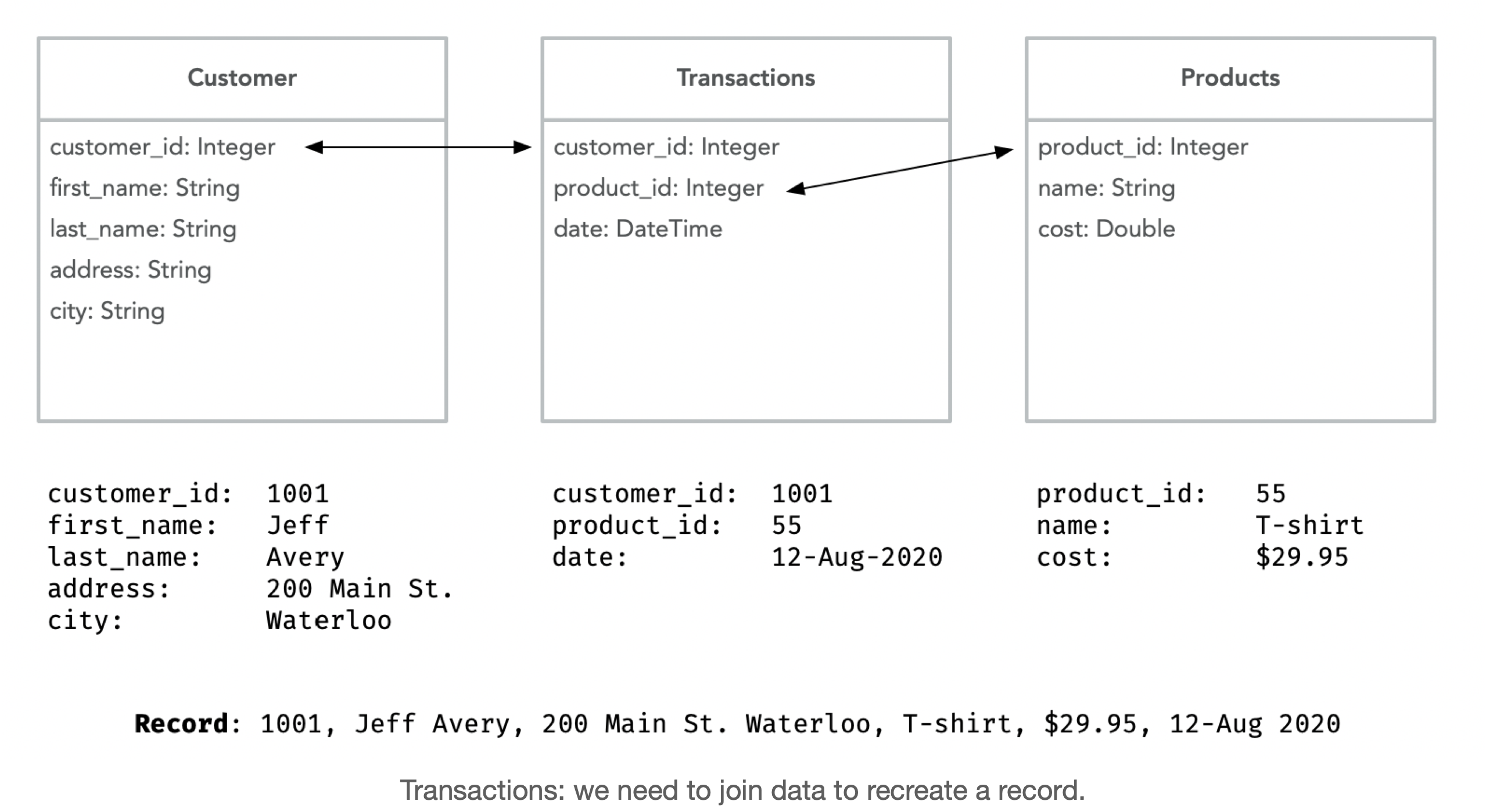

- Include online and offline capabilities in your application, leveraging both local and remote data storage.

- Design services that can provide remote capabilities to your application.

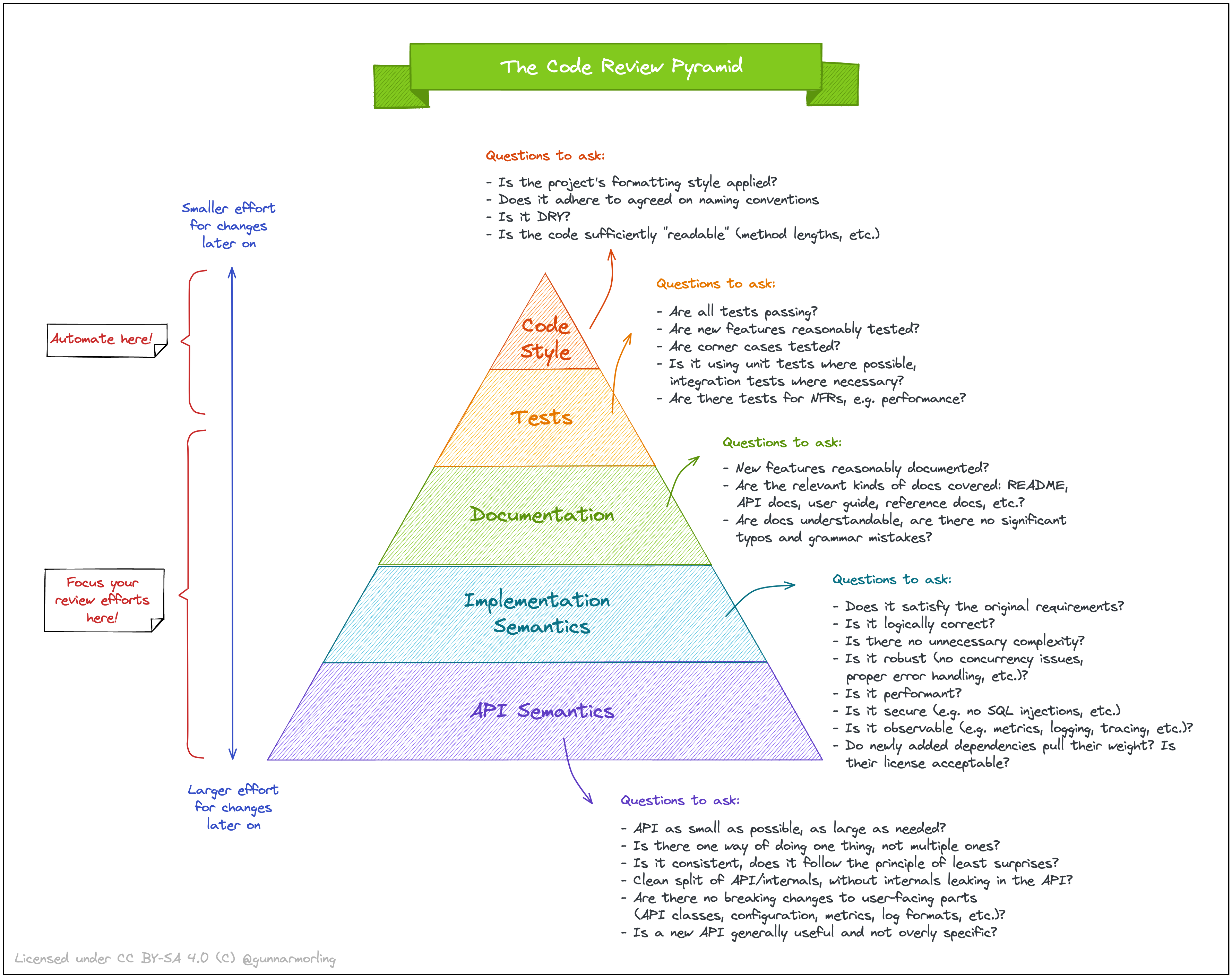

- Produce automated tests as an essential part of the development process.

- Apply debugging and profiling techniques as required during development.

Assessment

This course is designed around your team project, and most activities are tied to the course project in some way. You will be assessed on both your individual work and the team project.

The course schedule lists the due dates for each component.

Individual grades (33%)

Assessments based on individual work.

| Item | What it addresses | Grade |

|---|---|---|

| Quizzes | Quizzes covering lecture content. | 10 x 2% = 20% |

| Participation | Attending and participating in the project demos. | 4 x 2% = 8% |

| Team assessment | Rating from your team members at the end of the term | 5% |

Quizzes

We have weekly quizzes for the first 10 weeks of the course. Quiz content is based entirely on material presented in lectures that week. You are responsible for all material presented, not just what is in the slides.

Quizzes are hosted in Learn (Submit > Quizzes). Each quiz opens on Fri at 6:00 PM, and includes the lecture material from the preceding week. It remains open until the following Fri at 6:00 PM (i.e. each quiz is open in Learn for a full week).

- Each quiz is 15 minutes in length. You are only allowed one attempt.

- Quizzes are open-book, meaning that you may refer to your notes while writing the quiz.

- Quizzes must be completed individually, without assistance. You are not permitted to collaborate or communicate with other students about the quiz content.

- We normally do not grant extensions for missed quizzes. See policies.

Participation

Everyone is expected to attend project demos and present their contribution. Participation marks are awarded for attending and actively participating in the process. Attendance is mandatory. See policies.

Team assessment

At the end of the course, teams members will rate each other’s performance through the term. This accounts for 5% of your grade. See team assessment for details.

Team grades (67%)

Project milestones earned by the team.

| Item | What it addresses | Grade |

|---|---|---|

| Project proposal | Project identified, requirements logged. | 5% |

| Design proposal | Detailed design document. | 5% |

| Project iterations | Features completed, release process followed, demo. | 4 x 8% = 32% |

| Final submission | Completed project including documentation. | 25% |

All project deliverables

- See the appropriate page linked above for the specifics of each milestone.

- Grading details are available for each item in

Learn>Submit>Rubric.

Policies

The following policies set expectations for how this course will be managed. Your responsibilities are outlined here, so you should take time to review them carefully.

Course Project

Participation

You are expected to participate in the course project, and all related activities, to the best of your ability. This means:

- You must be able to attend class with your team. You are not allowed to take this course remotely, or while on a work term that prevents you from attending in-person. If you attempt to take this course remotely, you may be required to withdraw from the course.

- If you fail to participate in a meaningful way during the term, you may be removed from the course. This may be done at any time prior to the start of the “Drop with WD” period. See the Important dates calendar for specific term dates.

- The instructor reserves the right to adjust grades if it is determined that a team member failed to substantially contribute to the course project. Failing to participate in team demos, without an explicit exemption from the instructor, is grounds for reassessing grades.

This course is not suitable for students on a work term. You must be physically present for demos.

Team formation

You are expected to form project teams in the first couple of weeks. The following guidelines apply:

- Students are responsible for matching up and forming teams. Teams must be formed by the end of the second week (i.e. the add-course deadline). If you fail to find a team, you must inform the instructor by this deadline.

- We do not guarantee you a team after the end of the second week. If you have not joined a team, and have not made some arrangement with the instructor, you may be required to withdraw from the course.

- Course enrolment will be managed by the instructor to encourage teams of four students. If necessary, the instructor may authorize larger or smaller teams, or modify team membership to accommodate everyone that is enrolled in the course.

Team demos

You are expected to participate in each project iteration. This includes contributing to each sprint, and attending and participating in team demos. If you fail to attend without a valid reason, you will receive a zero for that demo participation grade. At the instructors discretion, you may be exempt if:

- You have a conflicting coop interview and you are unable to reschedule it (per coop policy, rescheduling should be your first attempted course of action).

- You are ill on the day of the demo, and submit a VIF - see below for instructions.

- Other reason approved by the instructor ahead-of-time.

If an exemption is granted, it will take the form of an EXEMPT status for that component.

We do not typically allow absences from demos without a very compelling reason. e.g., “I want to study for another course”, or “I want to go home on the weekend”, or “I missed the bus” are not typically valid reasons for an exemption. Also, failure to contact us before missing the demo is an automatic zero for demo participation.

Absences

If you need to be absent and miss an assessment, please see the specific policies below.

Illness

If you are ill, and want relief from a course deliverable, you should follow the guidelines and steps outlined under Math Accommodations > Submitting a VIF.

You should also inform the instructor via email and also inform your team members so that they can make accommodations in your absence.

Religious accommodations

If you need to be absent for reasons related to a religious observance, please follow the instructions under Math Accommodations > Religious observances.

Please also inform the instructor via email.

Short-term absences

You cannot use a short-term absence (STA) to delay a team deliverable e.g., a team demo or deliverable. However, you can use it to be excused from the attendance requirement; your team will need to run the demo without you. You may also use a STA for an exemption from a quiz, if it’s submitted within 24 hours of the quiz deadline. To use a STA to request an exemption from a quiz, you need to fill out 2 forms:

- Math Accommodations > Short-Term Absence form, and

- CS 346 declaration form to inform the instructor.

Note that you MUST fill in both forms for the absence request to be considered valid.

Grading policies

Grade exemptions

If an exemption is granted for any reason (see above), it will normally take the form of an EXEMPT status for that component. The specific exempt component will not be included in your grade calculation, and grade weights will be redistributed across other components.

Regrade requests

Quizzes are marked automatically by Learn, and course grades are released by 10:00 AM on the Monday after a quiz closes. Other materials are manually graded by TAs, and grades are normally returned within 1 week of the due date.

Regrade requests for graded materials will be considered for one week after the grade has been released; we will not entertain last-minute regrade requests. If you wish to dispute a grade, email the instructor (for quizzes) or your TA (for project submissions). Contact information is here.

Incomplete (INC) grades

A grade of INC based on missed work will not normally be granted in this course.

Inclusiveness

It is our intent that students from all diverse backgrounds and perspectives are well-served by this course, and that student’s learning needs be addressed both in and out of class. We recognize the immense value of the diversity in identities, perspectives, and contributions that students bring, and the benefit it has on our educational environment. Your suggestions are encouraged and appreciated. Please let us know ways to improve the effectiveness of the course for you personally or for other students or student groups.

In particular:

- We will gladly honour your request to address you by an alternate/preferred name or pronoun. Please advise us of this preference early in the term, so we may make appropriate changes to our records.

- We will honour your religious holidays and celebrations. Please inform us of these at the start of the course.

- We will follow AccessAbility Services guidelines and protocols on how to best support students with different learning needs. Please submit these requests early in the term so that suitable accommodations can be provided.

Academic integrity

To maintain a culture of academic integrity, members of the University of Waterloo community are expected to promote honesty, trust, fairness, respect and responsibility. Contact the Office of Academic Integrity for more information.

You are expected to follow the policies outlined here for quiz and project submissions.

To ensure academic integrity, MOSS (Measure of Software Similarities) is used in this course as a means of comparing student projects. We will report suspicious activity, and penalties for plagiarism/cheating are severe. Please read the available information about academic integrity very carefully.

Ethical behaviour

Students are expected to act professionally, and engage one another in a respectful manner at all times. This expectation extends to working together in project teams. Harassment or other forms of personal attack will not be tolerated. Course staff will not referee interpersonal disputes on a project team; incidents will be dealt with according to Policy 33.

Plagiarism and third-party code

Students are expected to either work on their own (in the case of quizzes), or work with a project team (for the remaining deliverables in the course). All work submitted should either be their own original work or work created by the team for this course.

The team is also allowed to use third-party source code or libraries for their project under these specific conditions:

- Published third-party libraries in binary form may be used without restriction. This may include libraries for networking, user interfaces and other libraries that are introduced in class.

- Source code from external sources may only be used if the contribution is less than 25 lines of code from a single source. Code copied in this way must be acknowledged with a comment embedded directly in your source code in the appropriate section.

- All external sources (libraries and source code) should be identified in the project’s README file.

Failure to acknowledge a source will result in a significant penalty to your final project grade (ranging from a minor deduction to a grade of zero for the project, depending on the severity of the infraction). Note that MOSS will be used to compare student assignments, and that this rule also applies to copying from other student projects, source code found online, or projects from previous terms of this course.

Reuse of materials

You cannot base your project in part or in whole on coursework that you have completed for a different course. You cannot submit the same project to multiple courses for credit, even if you are taking the courses concurrently (i.e. CS 346 and CS 446 would require different projects to be submitted that do not share source code).

Similarly, you cannot use anything that you created prior to the start of this course without explicit written permission from the instructor. This includes code, documentation, and other materials that you may have created in previous courses or work terms, or materials that you have created on your own time.

Generative AI & LLMs

The use of Generative AI and/or Large Language Models (e.g., ChatGPT, CoPilot and similar systems) is restricted in this course. You are not allowed to use such systems when writing quizzes, or for generating any part of your written submission (e.g., design document, user instructions, final report).

You are allowed to use these tools to generate a limited amount of code towards your project, provided that you adhere to the policies described above under plagiarism. Content generated by these systems must be sourced and cited properly, just like any other third-party source code.

Improper use of these tools will be subject to Policy 71 and an investigation into academic misconduct.

Keep in mind that if you use these types of systems to generate code, you are responsible for what is produced! These models can generate incorrect or illogical code. Non-working generated code will not be an acceptable excuse for failing to meet project requirements or deadlines.

Student discipline

A student is expected to know what constitutes academic integrity to avoid committing an academic offence, and to take responsibility for his/her actions. A student who is unsure whether an action constitutes an offence, or who needs help in learning how to avoid offences (e.g., plagiarism, cheating) should seek guidance from the course instructor, academic advisor, or the undergraduate Associate Dean.

For information on categories of offences and types of penalties, students should refer to Policy 71, Student Discipline. For typical penalties, check Guidelines for the Assessment of Penalties.

Intellectual property

Students should be aware that this course contains the intellectual property of their instructor, TA, and/or the University of Waterloo. Intellectual property includes items such as:

- Lecture content, spoken and written (and any audio/video recording thereof)

- Lecture handouts, presentations, and other materials prepared for the course (e.g., PowerPoint slides)

- Questions or solution sets from various types of assessments (e.g., assignments, quizzes, tests, final exams), and

- Work protected by copyright (e.g., any work authored by the instructor or TA or used by the instructor or TA with permission of the copyright owner).

Course materials and the intellectual property contained therein, are used to enhance a student’s educational experience. However, sharing this intellectual property without the intellectual property owner’s permission is a violation of intellectual property rights. For this reason, it is necessary to ask the instructor, TA and/or the University of Waterloo for permission before uploading and sharing the intellectual property of others online (e.g., to an online repository). Permission from an instructor, TA or the University is also necessary before sharing the intellectual property of others from completed courses with students taking the same/similar courses in subsequent terms. In many cases, instructors might be happy to allow distribution of certain materials. However, doing so without expressed permission is considered a violation of intellectual property rights.

Continuity plan

As part of the University’s Continuity of Education Plan, every course should be designed with a plan that considers alternate arrangements for cancellations of classes and/or exams.

Here is how we will handle cancellations in this course, if they occur.

-

In the case of minor disruptions (e.g. one lecture), the lecture content will be reorganized to fit the remaining time. This should not have any impact on demos or deliverables.

-

Cancellation of multiple classes may result in a reduction in the number of sprints and associated deliverables to fit the remaining time. If this happens, lecture content will also be pruned to fit available time. Assessment weights will be redistributed evenly over the remaining content if required to align with the material.

-

Cancellation of in-person (midterm or final) examinations has no effect on this course, since we do not have scheduled exams and quizzes are online.

Resources

All required course materials, including lecture slides, are freely available on the course website.

We use the following additional websites.

- Piazza 🔗: Forum software. Used for course announcements, and you can ask questions.

- Learn 🔗: Used for quizzes, and project submissions. Grades are also recorded here.

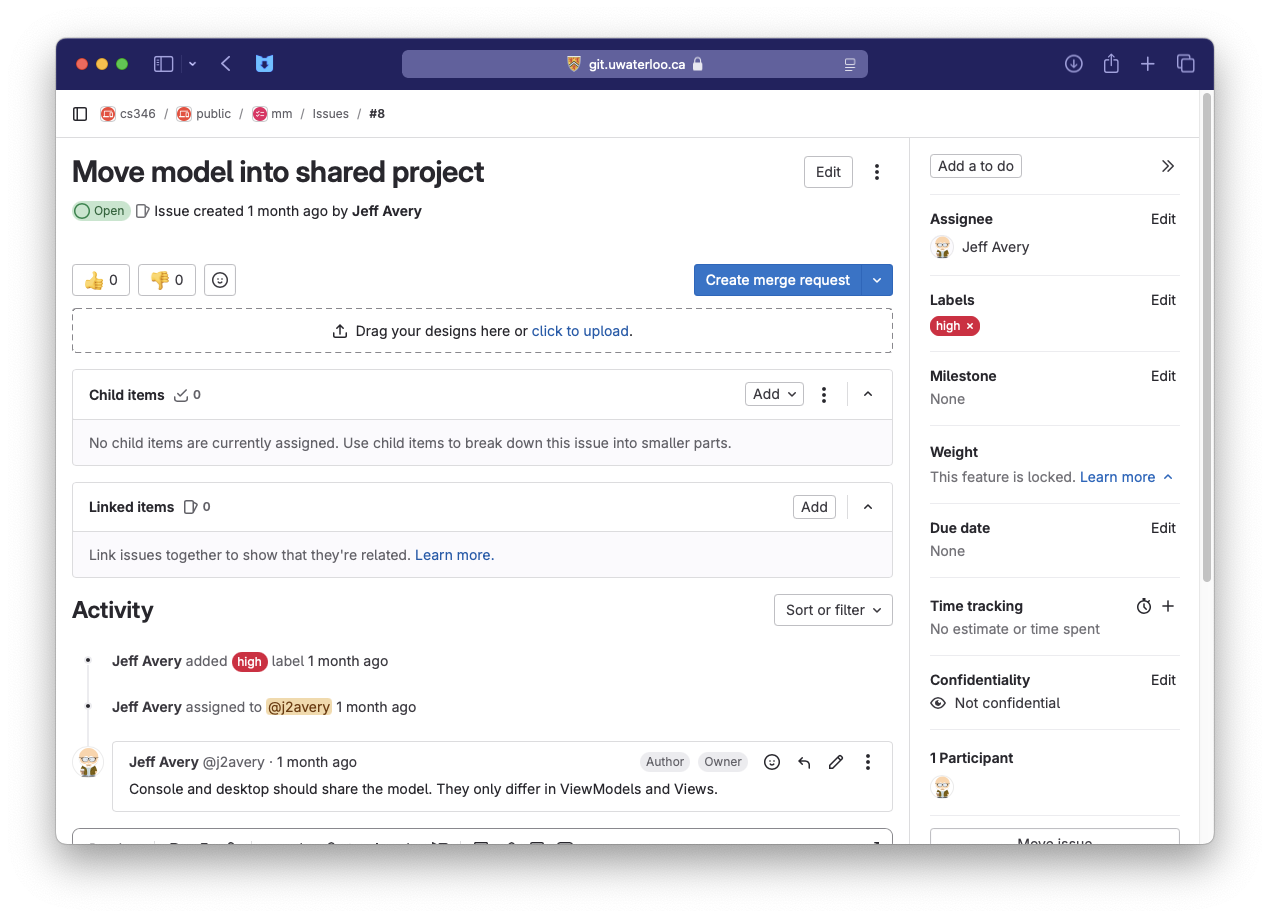

- GitLab 🔗: CS 346 public repository, with sample code and templates.

Finally, you will require access to a computer to work on the course project.

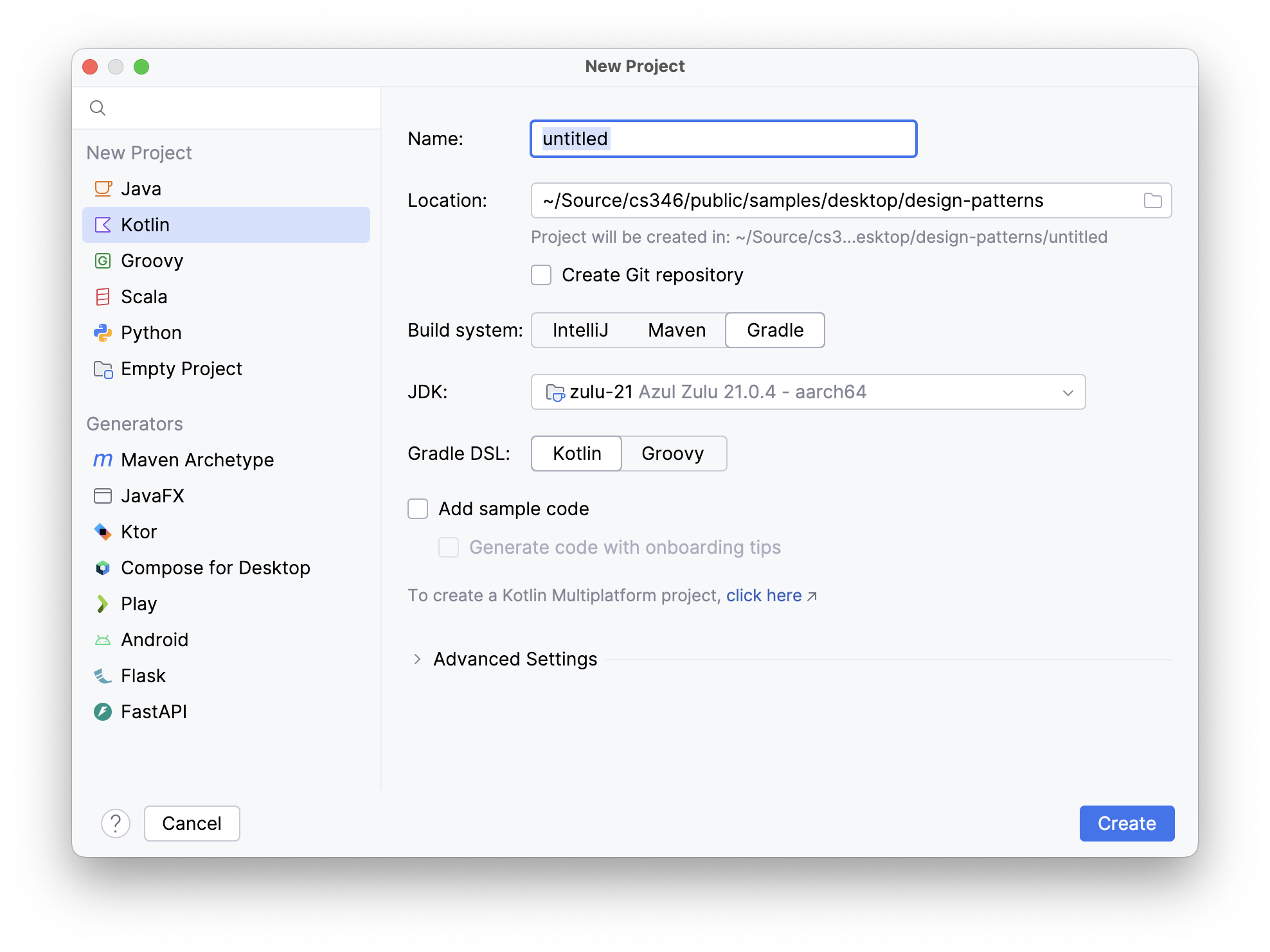

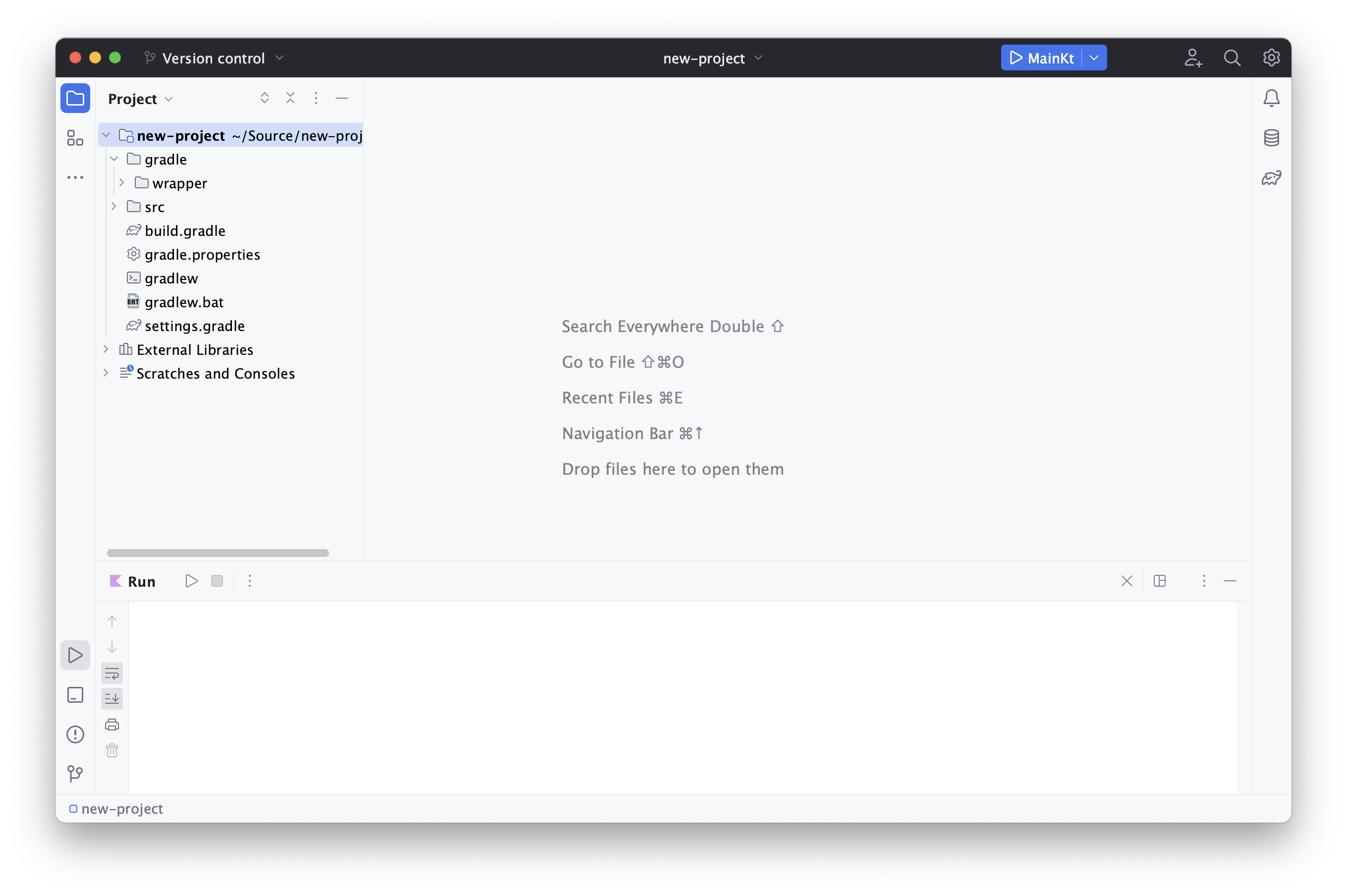

- See Development > Getting-Started > Toolchain installation for details.

We use Piazza for course announcements, so please make sure to monitor that site regularly. When signing up, please use your real name (you can still post anonymously, but the course staff can see your actual name when you do so).

Contact us

You are welcome to contact us directly. Your assigned TA will be listed on the Project teams page after Week 2.

| Contact List | |

|---|---|

| Prof. Jeff Avery Course Instructor jeffery.avery@uwaterloo.ca MC 6461 |  |

| Caroline Kierstead Instructional Support Coordinator (ISC) ctkierst@uwaterloo.ca |  |

| Shaikh Shawon Arefin Shimon Teaching Assistant ssarefin@uwaterloo.ca |  |

| Rafael Ferreira Toledo Teaching Assistant rftoledo@uwaterloo.ca |  |

| Favour Kio Teaching Assistant gn2kio@uwaterloo.ca |  |

| Paul Wooseok Lee Teaching Assistant w69lee@uwaterloo.ca |  |

| Aniruddhan Murali Teaching Assistant a25mural@uwaterloo.ca |  |

| Amber Wang Teaching Assistant j2369wan@uwaterloo.ca |  |

Schedule

The course schedule for the term, with important dates.

Class Times

The class schedule is below. You must be enrolled in corresponding LEC/LAB sections. Both Wed and Fri classes are used for lectures, project activities and demos; plan to attend both classes.

| Morning Sections | Afternoon Sections | |

|---|---|---|

| Wed classes | LEC 001 @ 10:30a - 12:20p / EXP 1689 | LEC 002 @ 2:30p - 4:20p / MC 4021 |

| Fri classes | LAB 101 @ 10:30a - 12:20p / MC 2035 | LAB 102 @ 2:30p - 4:20p / MC 2038 |

Deadlines

Significant dates and deadlines for the term are listed below.

- Quizzes open at Fri @ 6:00 pm, covering that week’s lectures. They are open for a full week, and close the following Fri @ 6:00pm. Make sure to submit before the deadline.

- Project demos are conducted in-class during your scheduled demo time.

- Other deliverables are due by Fri @ 6:00pm.

| Week | Dates | Wed, Fri Lectures | Fri Submissions |

|---|---|---|---|

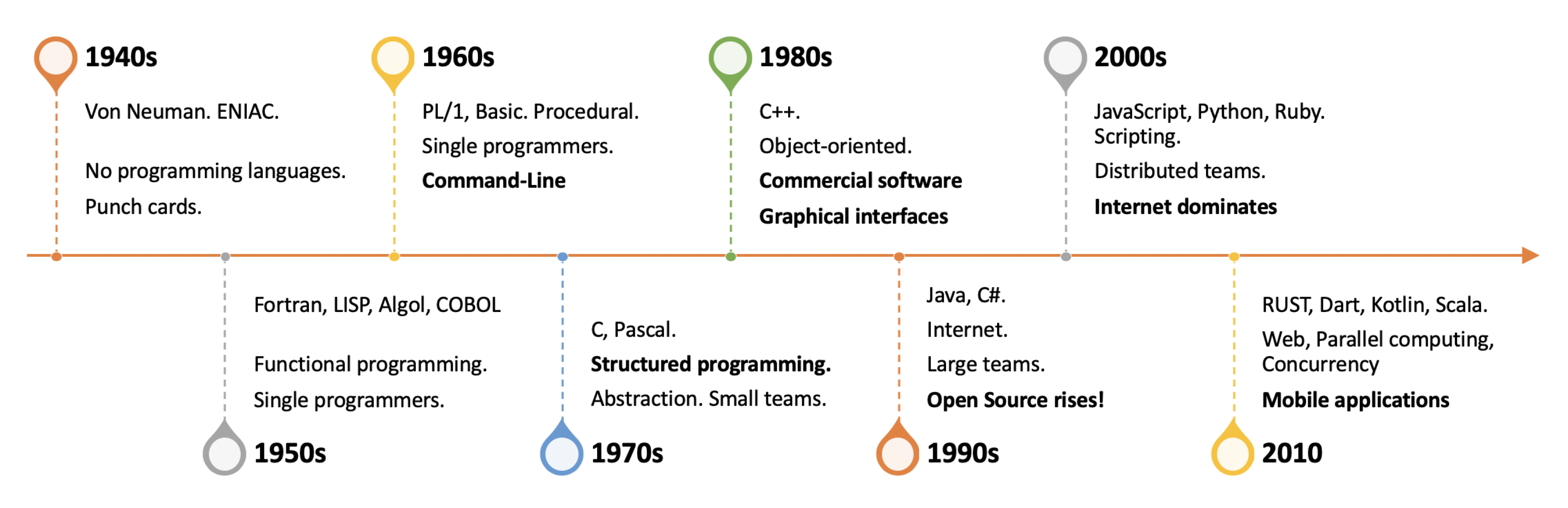

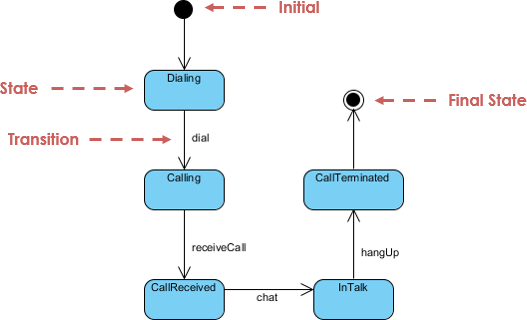

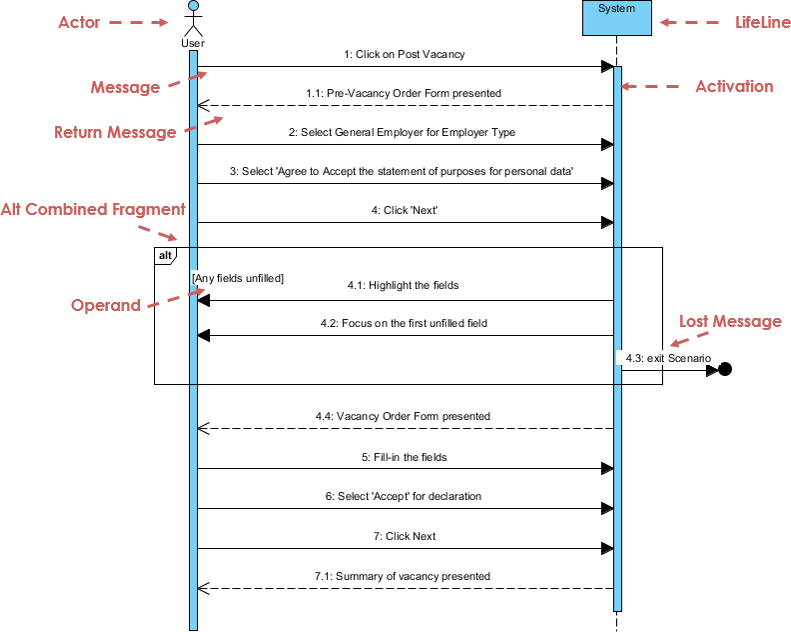

| 1 | Jan 8, 10 | Course outline, Introduction, Agile, Design thinking | |

| 2 | Jan 15, 17 | GitLab, Documentation, Kotlin | Project setup Q1 due from wk 1 |

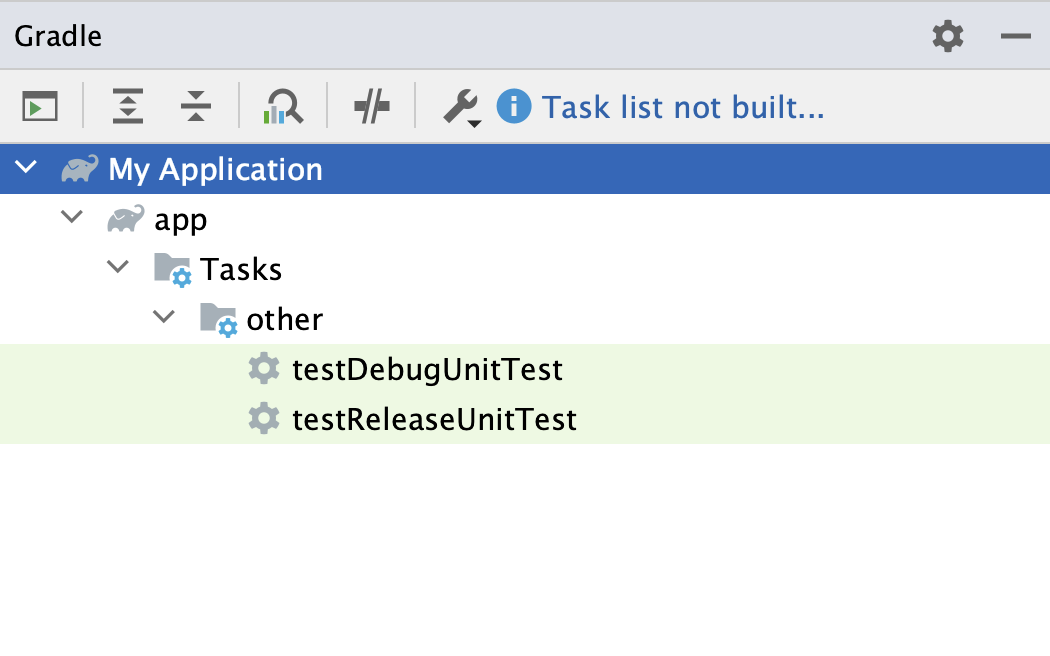

| 3 | Jan 22, 24 | Gradle, Architecture & design | Project proposal Q2 due from wk 2 |

| 4 | Jan 29, 31 | User interfaces, Desktop/mobile apps | Design proposal Q3 due from wk 3 |

| 5 | Feb 5, 7 | Unit testing, Kickoff | Q4 due from wk 4 |

| 6 | Feb 12, 14 | Databases | Demo 1 (times) Q5 due from wk 5 |

| 7 | Feb 19, 21 | Reading week | - |

| 8 | Feb 26, 28 | Data formats, JSON | Q6 due from wk 6 |

| 9 | Mar 5, 7 | Web services, Cloud services | Demo 2 (times) Q7 due from wk 8 |

| 10 | Mar 12, 14 | Concurrency - room change Wed AM to AL 116 | Q8 due from wk 9 |

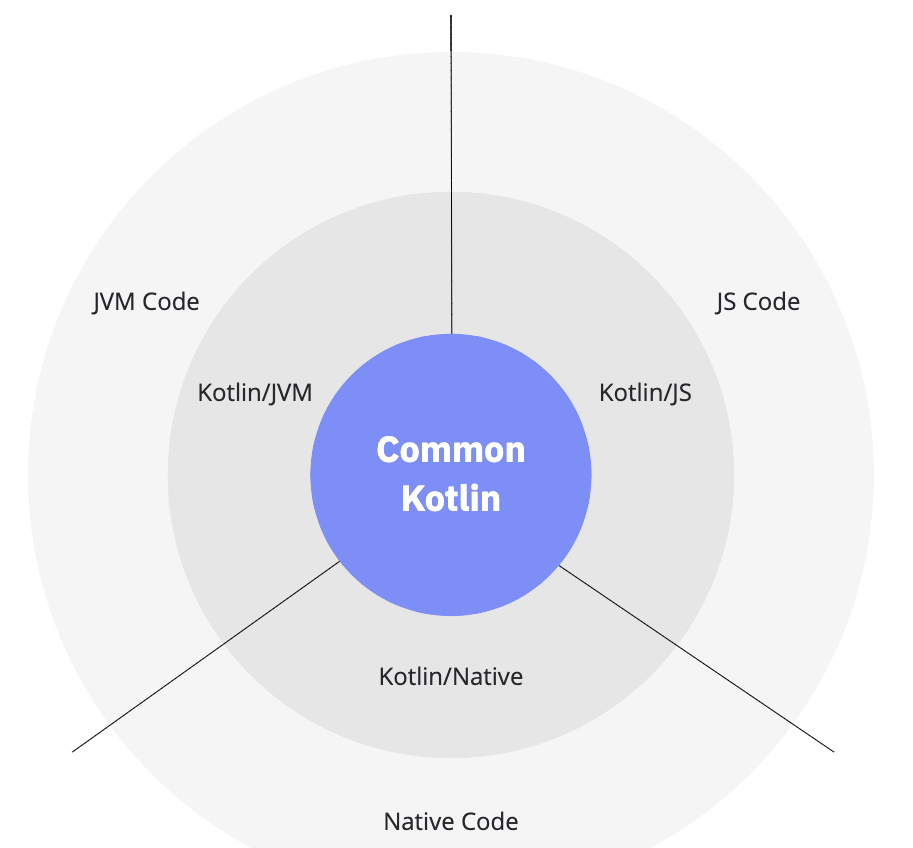

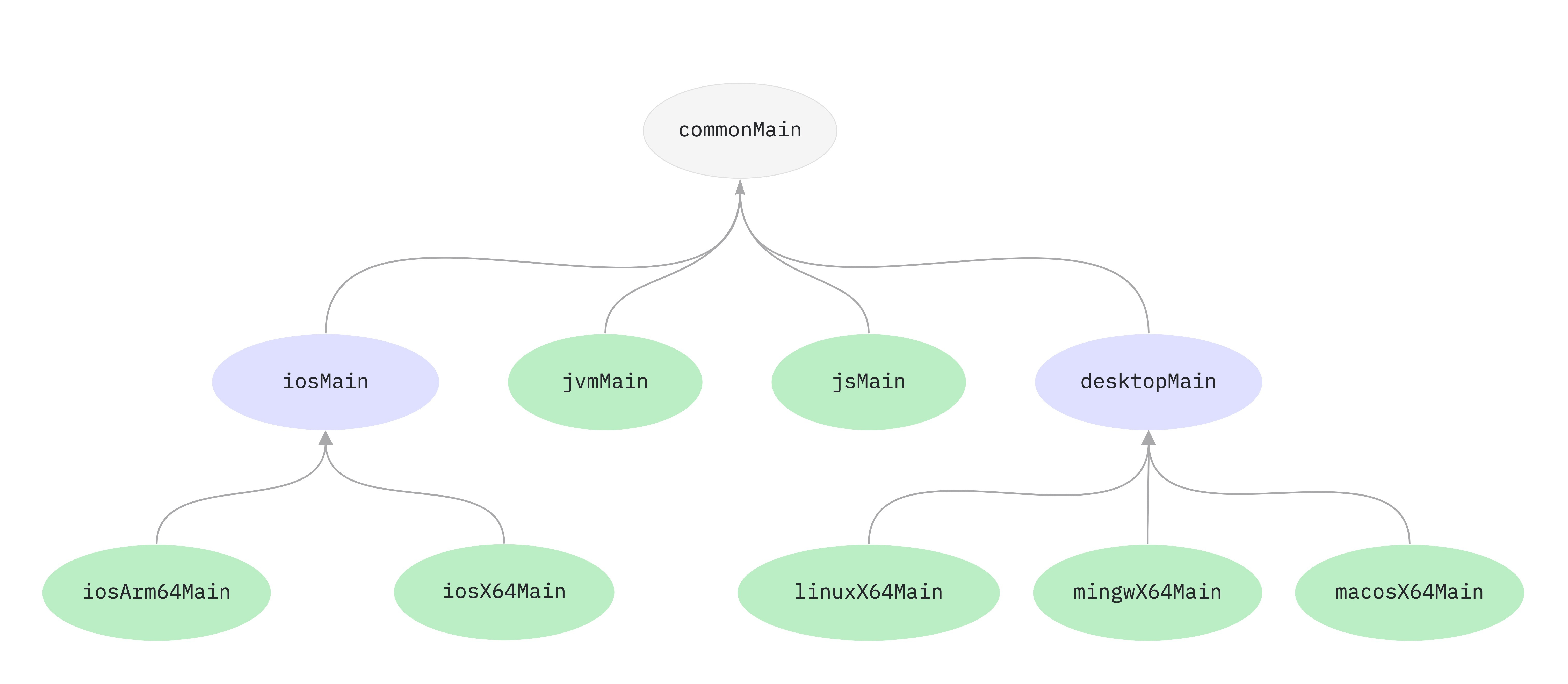

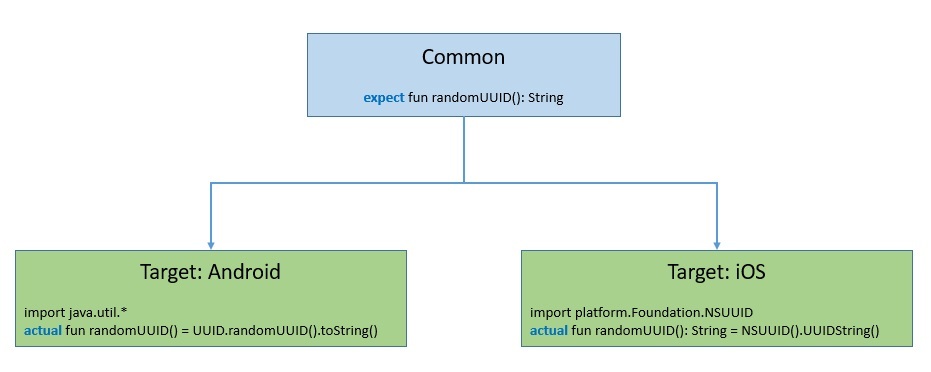

| 11 | Mar 19, 21 | Kotlin Multiplatform (KMP) | Demo 3 (times) Q9 due from wk 10 |

| 12 | Mar 26, 28 | Final submission overview | Q10 due from wk 11 |

| 13 | Apr 2, 4 | - | Demo 4 (times) Final submission - see below |

NOTE: the final submission is due Fri Apr 4, but can be handed in up to Sun Apr 6 @ 11:59 PM without penalty.

Lecture plans

Week-by-week lecture plans, with links to course slides and notes.

- Week 01 - Introduction, Design thinking

- Week 02 - GitLab, Kotlin programming

- Week 03 - Gradle, Architecture & Design

- Week 04 - User interfaces, Applications

- Week 05 - Unit testing, Project kickoff

- Week 06 - Databases, Demo 1

- Week 07 - Reading week

- Week 08 - Data formats

- Week 09 - Web services, Demo 2

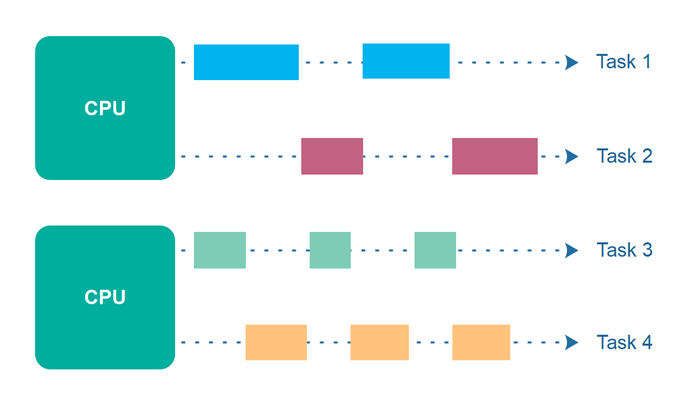

- Week 10 - Concurrency

- Week 11 - Kotlin Multiplatform, Demo 3

- Week 12 - Final Submission Guidelines

- Week 13 - Wrapup

Week 01 - Introduction, Design thinking

Welcome to the course! We have a lot of material to cover in the first few weeks so it’s important that you attend class. Please email the instructor or post on Piazza if you have questions.

Wed

Lectures

- Course outline - slides

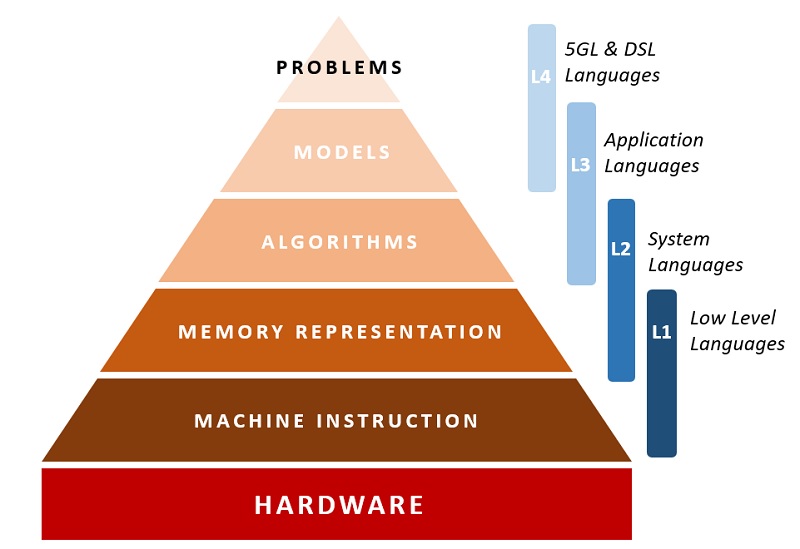

- Introduction - notes, slides

- Project requirements - notes

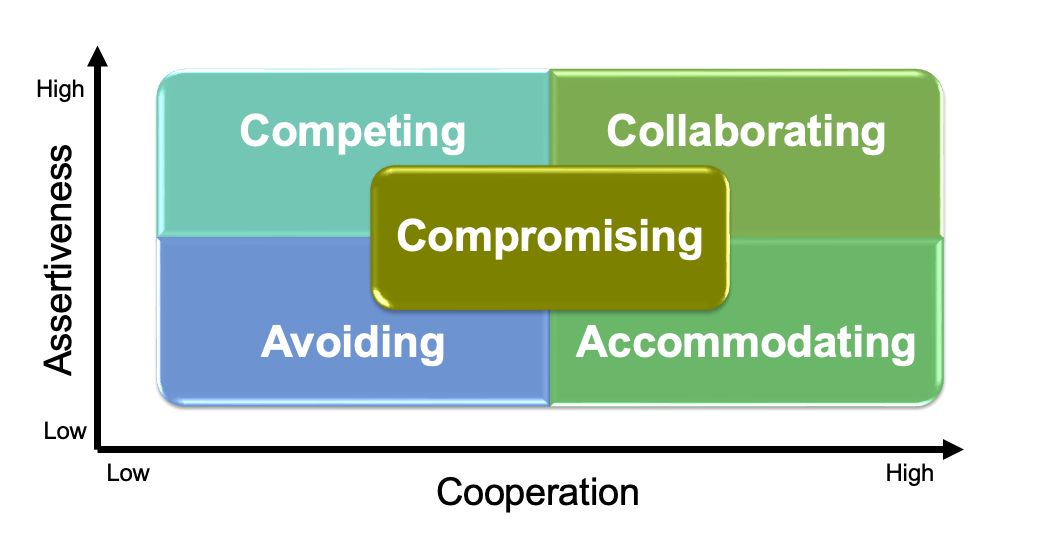

- Teamwork - notes, slides (team contract and team roles only)

Fri

Lectures

Outside of class

Your project goals this week are focused on finding a project team!

- Search for teammates in this Piazza post.

- Form a project team this week.

- Review the requirements with your team and start brainstorming project ideas.

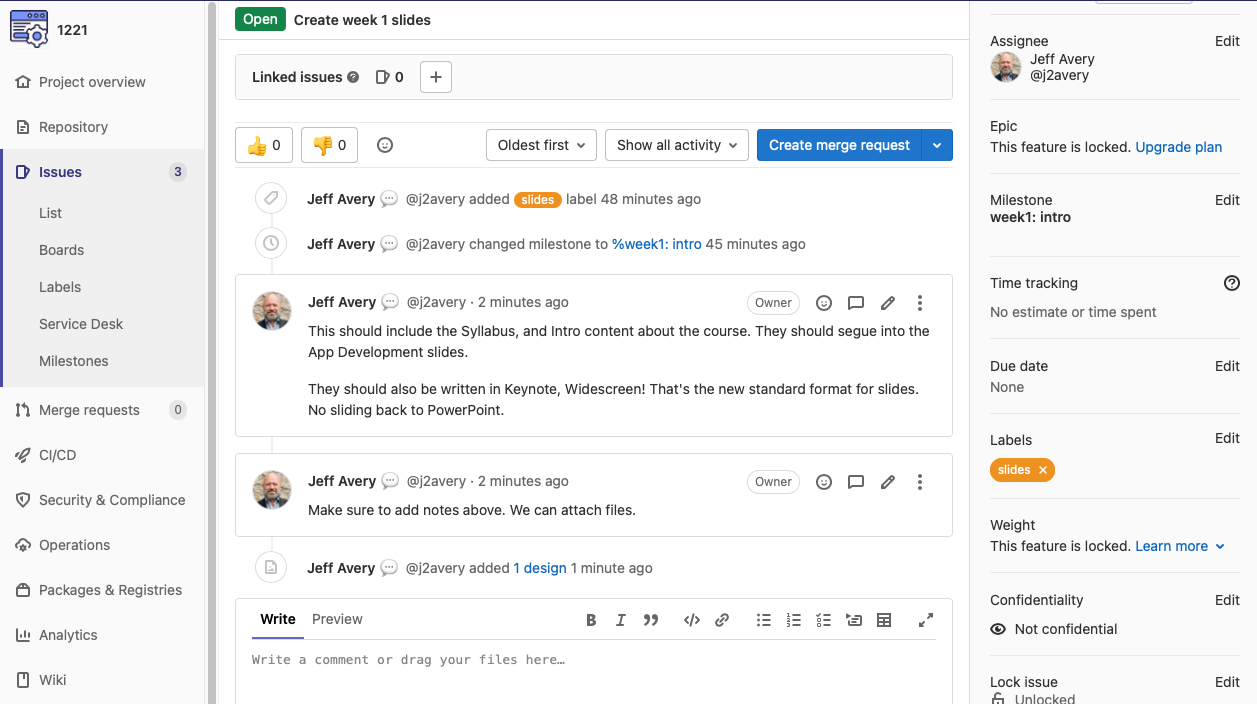

Week 02 - GitLab, Kotlin

How to setup your GitLab project. Getting started with Kotlin!

Wed

Lectures

- Project setup in GitLab - notes, slides

- Documentation - notes, slides

- Toolchain setup - notes, slides

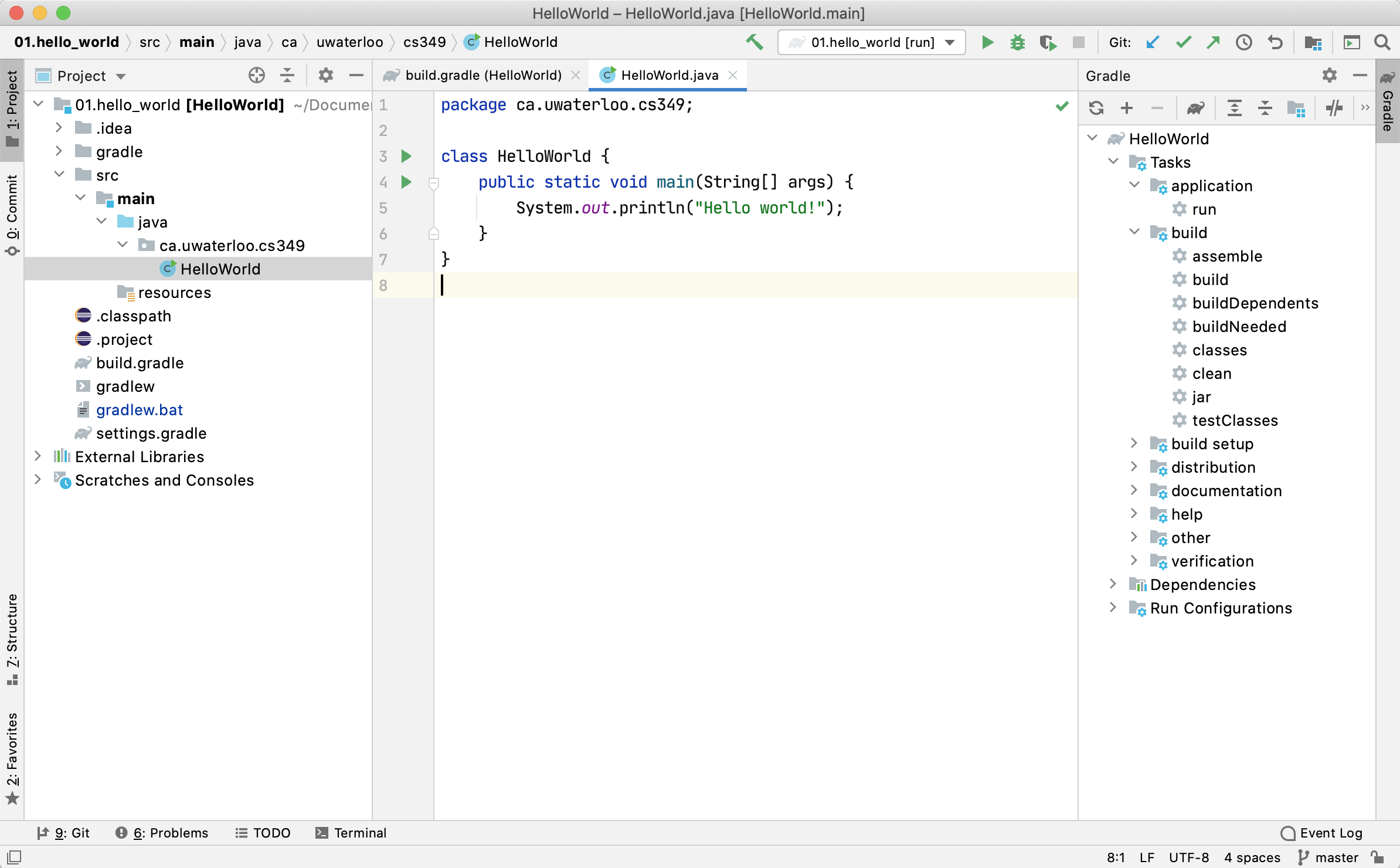

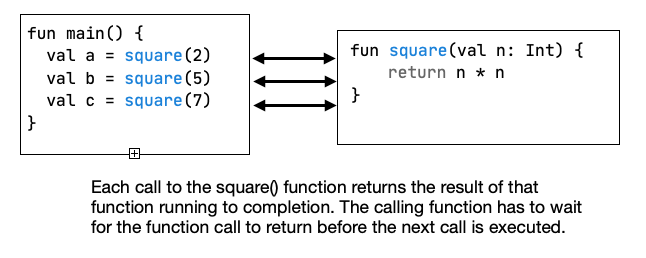

- Introduction to Kotlin - notes, slides

Fri

Lectures

Outside of class

You should complete your project setup. This is due Friday.

Once that is done, you should start working on your project proposal. This is the first part of the Design Thinking process, and involved identifying and interviewing users, creating personas and extracting user stories.

- Submit your project setup.

- Start working on your project proposal. Start early!

Week 03 - Gradle, Architecture & Design

How build systems work and how to configure Gradle to build your project. How your application should be structured; benefits of “good design”.

Wed

Lectures

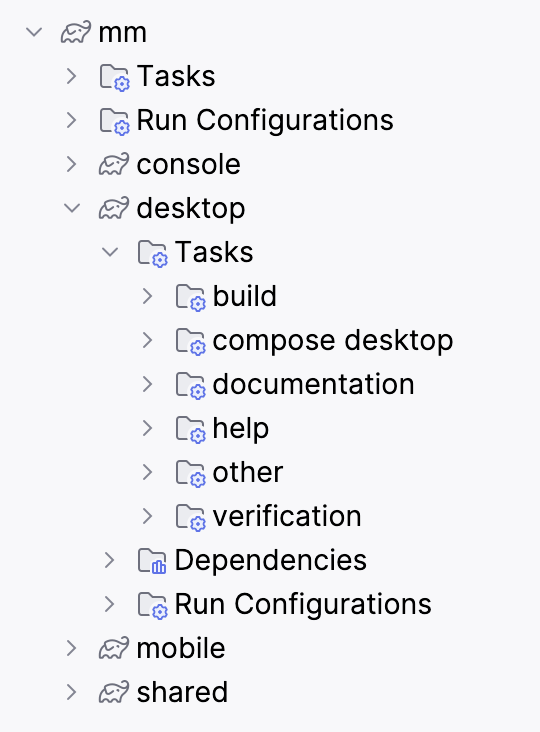

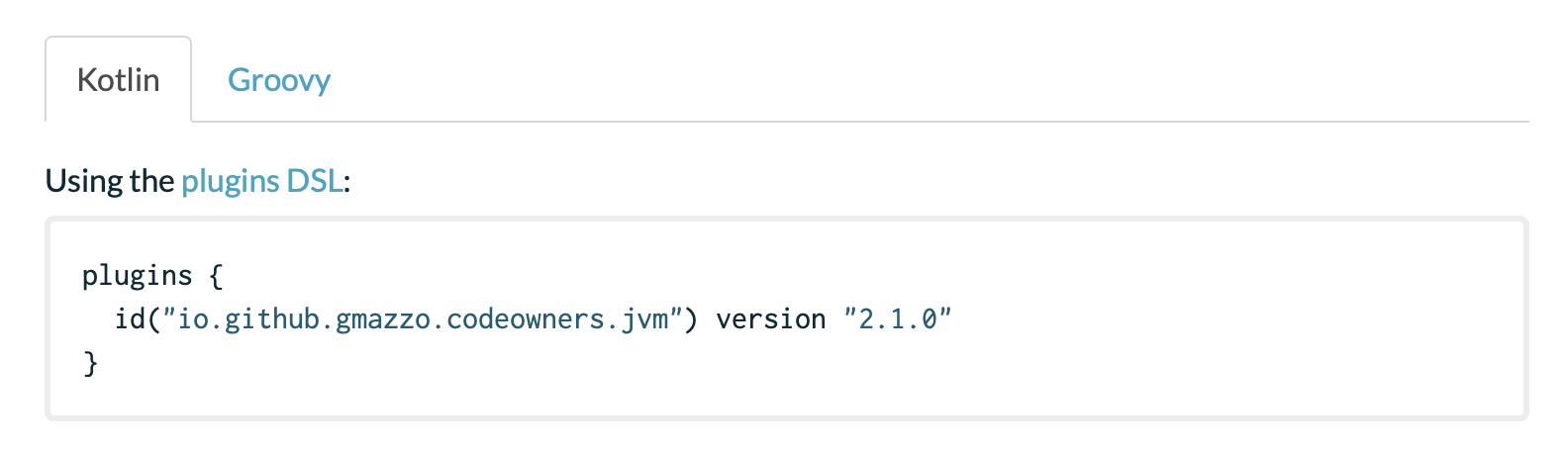

- Gradle - notes, slides, demo project

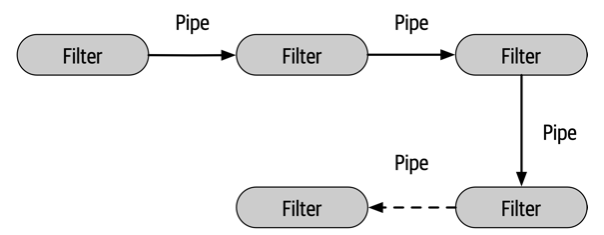

- Architecture - notes, slides

Fri

Lectures

Outside of class

Review project setup feedback in Learn. Things to watch out for:

- Projects should open to the README.md file. It’s supposed to be the landing page and act as a TOC for other documents.

- Project documentation content should be stored in Wiki pages. If you need images, attach them directly to the document. Please don’t attach external files as links to Wiki pages e.g., Word documents.

Submit your project proposal. This is due Friday.

Start working on your design proposal! This continues from your project proposal and provides details on your proposed solution, including low-fidelity prototypes of your screens.

Week 04 - User interfaces, Applications

How to build user interfaces for desktop and mobile applications using Compose.

Wed

Lectures

Fri

Lectures

Outside of class

Review project proposal feedback. TAs have finished grading and will have provided feedback that you should consider.

Submit your design proposal, which is due Friday.

- Make sure to address feedback from the project proposal.

- You will have some time in-class to work on this.

(Optional) Prepare for project kickoff in Week 05!

- Create issues in GitLab for each of your requirements.

- At the start of Week 05, you will plan the release. This includes collectively making decisions on what to implement for the upcoming iteration, and assigning work (i.e., issues) to team members.

Week 05 - Unit testing, Project kickoff

How to add unit tests to your project; planning for Demo 1.

Wed

Lecture

Sprint 1 Kickoff - slides

- This is the kickoff for your first iteration!

- You will choose which features to work on for the next two weeks,

- Work towards a demo of these completed features to your TA on Fri Feb 14 (see the team schedule for details).

- For further details, see:

Fri

Open class

- There are no planned lectures or activities.

- The entire class time is meant for you and your team to work on your project.

- Jeff will be in class to help if you need it! When not answering questions, he’ll be live coding changes to his courses project. Plans for today include:

- Migrating API keys into a settings class and a local configuration file.

- Adding regex support to the front-end.

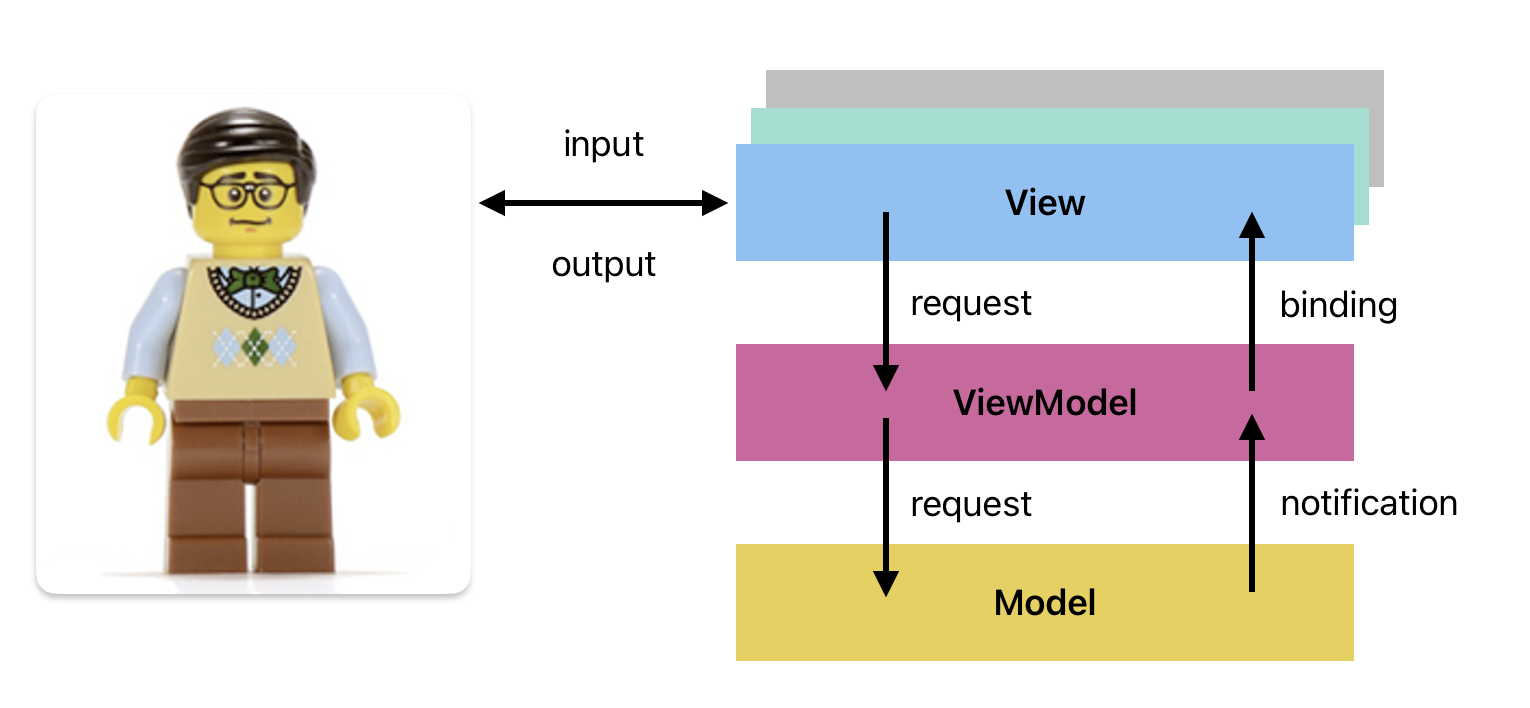

Here’s another sample that demonstrates setting up the MVVM structure: my TODO application from last term.

Week 06 - Databases, Demo 1

How and why to use relational (and document) databases.

Wed

Lecture

Fri

Demo day!

- Demos are conducted in your scheduled classroom.

- See the online schedule for your team’s exact time, and plan to be here 5 mins early.

- Follow the coding & demo guidelines:

- You need to have a software release built, and you should plan on demoing your application from one of your team’s computers.

- Everyone on the team must attend the demo in-person (unless you have coordinated with me ahead-of-time).

Week 07 - Reading Week!

Enjoy your week! We’ll kickoff Sprint 2 when we return on Wed Feb 26th.

Week 08 - Data formats

Wed

Lecture

Sprint 2 Kickoff

- This is the kickoff for your second iteration! Follow the planning guide on the course website

- Make sure to review the feedback from Demo 1 (see Learn: Submit > Dropbox > Project demo 1). You should plan on addressing any feedback before your next demo.

- Demo 2 is Fri Mar 7 - see the team schedule for details.

Make sure to review feedback in Learn > Submit > Dropbox.

Broad feedback:

- Follow the guidelines. Make sure you keep GitLab and issues lists up-to-date.

- Make sure that you have something to demo! User-facing features are best but if that’s not feasible, show us unit tests or diagrams, or … Do NOT show us code in the demo.

- Write unit tests for your work. They don’t have to be complete, but you should make a reasonable effort (and continue adding tests as the project progresses).

- Each person must demo their own work. You cannot have one person present everything.

Fri

Open class

- There are no planned lectures or activities.

- The entire class time is meant for you and your team to work on your project.

- Jeff will be in class to help if you need it! When not answering questions, he’ll be live coding changes to his courses project. Plans for today include:

- Automatic database caching of historical data.

- Scheduled service to automatically run queries.

Week 09 - Web Services, Demo 2

How to access web services/APIs. Using hosted services.

Wed

Lecture

Wed Mar 12: the morning class will be moved to AL 116.

Fri

Demo day!

- Demos are conducted in your scheduled classroom.

- See the online schedule for your team’s exact time, and plan to be here 5 mins early.

- Follow the coding & demo guidelines to prepare.

- Everyone on the team must attend the demo in-person (unless you have coordinated with me ahead-of-time).

Week 10 - Concurrency

Wed

Lecture

The Wed 10:30 AM lecture will be held in AL 116 for this week only. The remaining lectures are held in their regular rooms.

Sprint 3 Kickoff

- Follow the planning guide on the course website.

- Make sure to review Demo 2 feedback in Learn > Submit > Dropbox.

- Demo 3 is on Fri Mar 21 - see the team schedule for details.

Fri

Open class

- There are no planned lectures or activities.

- The entire class time is meant for you and your team to work on your project.

- Jeff will be in class to help if you need it! When not answering questions - if the projector cooperates - he’ll be live coding changes to his courses project.

Week 11 - Kotlin Multiplatform, Demo 3

Wed

Lecture

Fri

Demo day!

- Demos are conducted in your scheduled classroom.

- See the online schedule for your team’s exact time, and plan to be here 5 mins early.

- Follow the coding & demo guidelines to prepare.

- Everyone on the team must attend the demo in-person (unless you have coordinated with me ahead-of-time).

Week 12 - Final Submission Guidelines

Wed

Lecture

- Final Submission Guidelines and Hints - slides

See also

This is your last lecture of the term! The remaining time will be spent working on projects. Don’t forget to:

- Submit your final quiz before Fri @ 6 PM

- Work on your Demo 4 - remember that attendance is mandatory!

- Prepare your final submission

Fri

Open class

- There are no planned lectures or activities.

- The entire class time is meant for you and your team to work on your project.

- Jeff will be in class to help if you need it!

Week 13 - Wrapup

Wed

Open class

- There are no planned lectures or activities.

- The entire class time is meant for you and your team to work on your project.

- Jeff will be in class to help if you need it!

Fri

Demo day!

- Demos are conducted in your scheduled classroom.

- See the online schedule for your team’s exact time, and plan to be here 5 mins early.

- Follow the coding & demo guidelines to prepare.

- Everyone on the team must attend the demo in-person.

Your final submission is due by Sun at 11:59 PM. Make sure that everything is complete and submitted before then! Final grades won’t be released until the end of the exam period.

Project teams

A list of project teams, with links to their project repositories. Demo Time refers to your scheduled time for your team demos. See the course schedule for demo dates.

Morning schedule

LAB101 (Learn)

Amber, Favour, Shaikh (contact info)

| Team Number | Team Members (* TL) | Project | TA | Demo Time |

|---|---|---|---|---|

| LAB101-1 | Alara*, David, Germain, Nicklaus | Roomie | Amber | 10:30a |

| LAB101-2 | Grace, Louis, Rebecca*, Remington | KW Nest | Amber | 10:45a |

| LAB101-3 | David, Jane, Marzuk, Rachel* | Buff Buddies | Amber | 11:00a |

| LAB101-4 | Hans, Nick*, Omar, Ryan | Fantasy Investments | Amber | 11:15a |

| LAB101-5 | Jack, Kaiz, Muj*, Siyeon | WatCourse | Amber | 11:30a |

| LAB101-6 | Jonathan*, Shaili, Neha, Malvika | Flick Pics | Amber | 11:45a |

| LAB101-7 | Erfan, Raiyan, Rohan, Sara* | DuoCode | Favour | 10:30a |

| LAB101-8 | Martin, Rishi, Matthew, Jerry* | washare | Favour | 10:45a |

| LAB101-9 | Vivan, Emily*, Daniel, Christina | DineSafe | Favour | 11:00a |

| LAB101-10 | Devrshi, Nandish, Ashwin, Mehar | WatMarket | Favour | 11:15a |

| LAB101-11 | Marc, Colin, Jacky, Vincent | Recipe Organizer | Favour | 11:30a |

| LAB101-12 | Ashnoor, Nima, Nivriti, Sam* | Youtube Wrapper | Favour | 11:45a |

| LAB101-13 | Raquel*, Estefany, Kyle, Andrea | Pet Care App | Shaikh | 10:30a |

| LAB101-14 | Daniel, Justin, Nikashan, Shaun* | SmartSplit | Shaikh | 10:45a |

| LAB101-15 | Arya, Anson C, Anson Y | RepRadar | Shaikh | 11:00a |

| LAB101-16 | Alex, Gavin, Jerry | Good Samaritan | Shaikh | 11:15a |

| LAB101-17 | Alena, May, Jennifer*, Edward | Chopify | Shaikh | 11:30a |

| LAB101-18 | Joshua, Owen*, Suyeong | Jasper Clinic Flow | Shaikh | 11:45a |

Afternoon schedule

LAB102 (Learn)

Aniruddhan, Paul, Rafael (contact info)

| Team Number | Team Members (* TL) | Project | TA | Demo Time |

|---|---|---|---|---|

| LAB102-1 | Alia, Steven, Johnson, Monica | WatCourse | Aniruddhan | 2:30p |

| LAB102-3 | Anees, Jia*, Bogdan, Vanshaj | Pomodoro Timer | Aniruddhan | 3:00p |

| LAB102-4 | Justin, Hannah, Anne, Laura | Trapezoid | Aniruddhan | 3:15p |

| LAB102-5 | Jason, Saniya, Oliver, Michael* | News Aggregator | Aniruddhan | 3:30p |

| LAB102-6 | Jack*, Conor, Noah, Ashmita | WaterlooMeets | Aniruddhan | 3:45p |

| LAB102-7 | Isabella*, Justin, Jaycob, Ighoise | BudgIT | Aniruddhan | 4:00p |

| LAB102-8 | Momina, Bhaviki, Sarah*, Khadija | Ruby Calendar | Paul | 2:30p |

| LAB102-9 | Amol*, Sophia, Yiwen, Anay | ScanLink | Paul | 2:45p |

| LAB102-10 | Michelle, Ryan, Clifford*, Ted | Zuna | Paul | 3:00p |

| LAB102-11 | Rehan, Kush*, Pranav, Namdar | Housing Hub | Paul | 3:15p |

| LAB102-12 | Jeri, Cindy*, Arjun, Derek | BetterNotes | Paul | 3:30p |

| LAB102-13 | Andrei, Kelvin*, Shixiong, Larry | Student Expense Tracker | Paul | 3:45p |

| LAB102-14 | Max, Eric*, Mario, Brian | AccountaBuddy | Rafael | 2:30p |

| LAB102-15 | Steven, Brasen, Samuel, Richard* | GrubGuru | Rafael | 2:45p |

| LAB102-16 | Nora*, Nikolai, Jagan, Junyi, Gerald | SwapOut | Rafael | 3:00p |

| LAB102-17 | Vignesh, Harishan, Preesha, Ashlyn | brainrot-346 | Rafael | 3:15p |

| LAB102-18 | Daniel*, Ted, Jim, Sumeet | UW Navigation | Rafael | 3:30p |

| LAB102-19 | Jane, Daniel W, Qi kun, Daniel Z* | WATtoEat | Rafael | 3:45p |

Getting started

How do you get started with your project?

Requirements

Guidelines

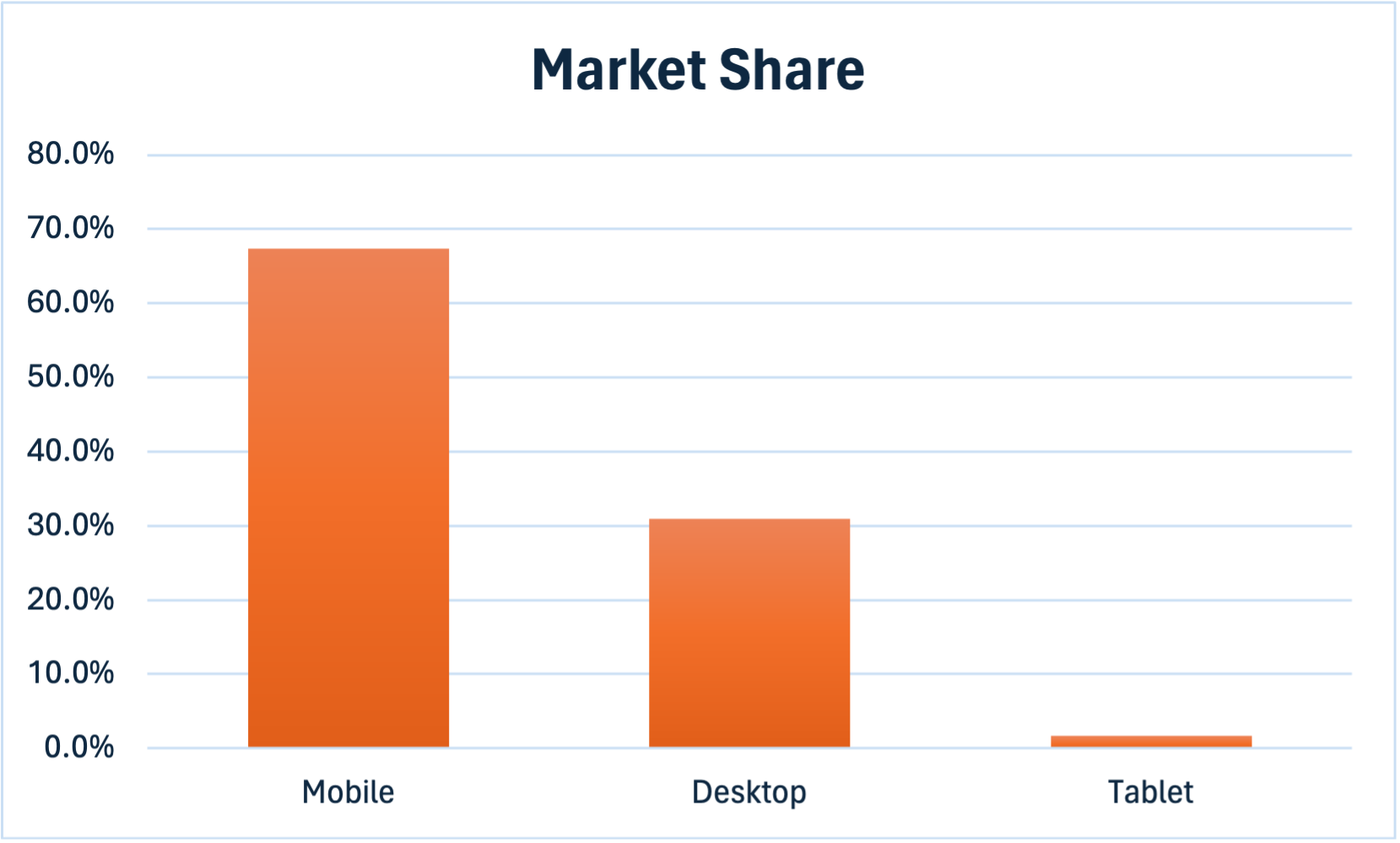

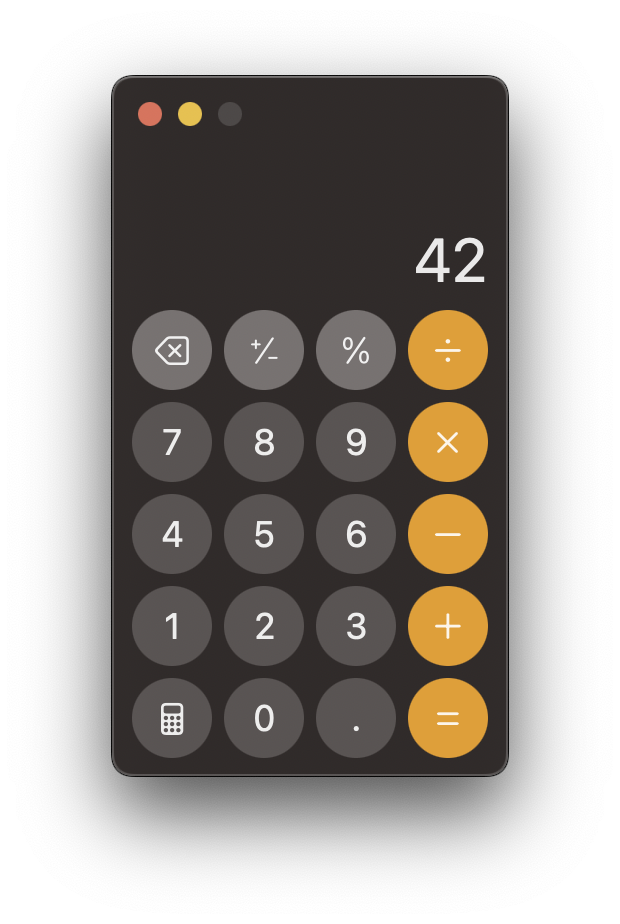

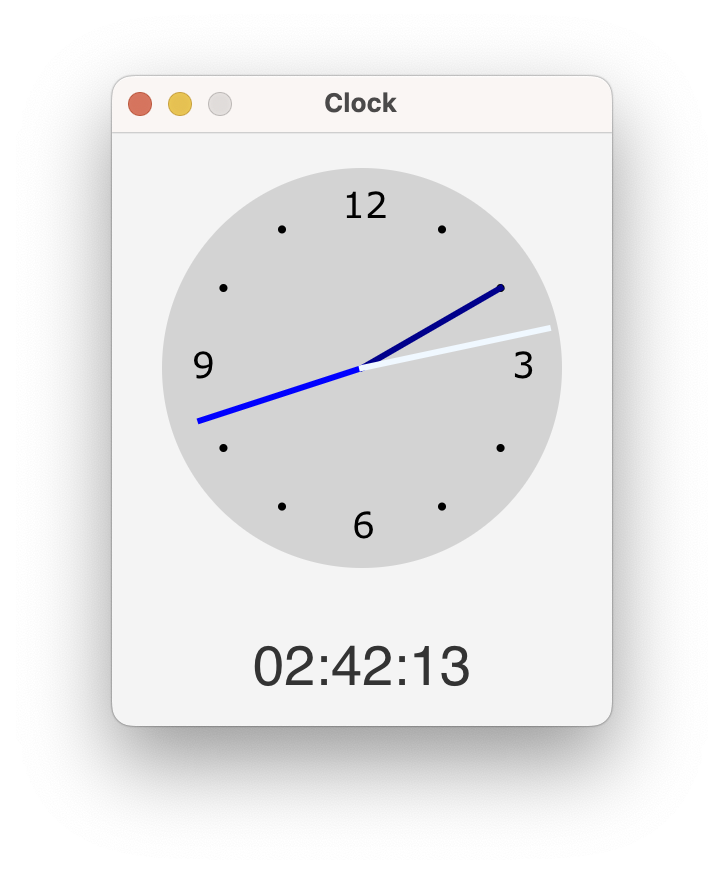

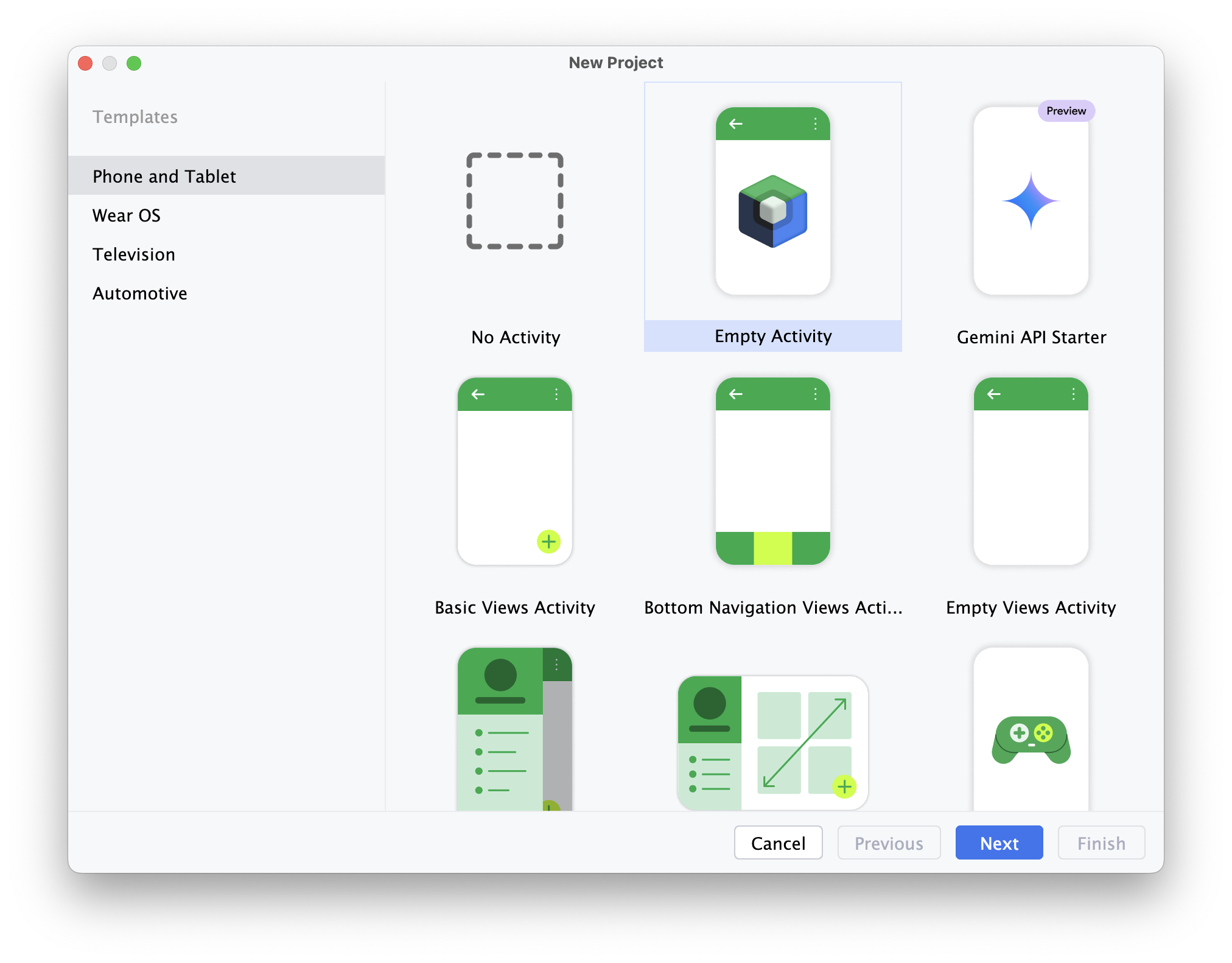

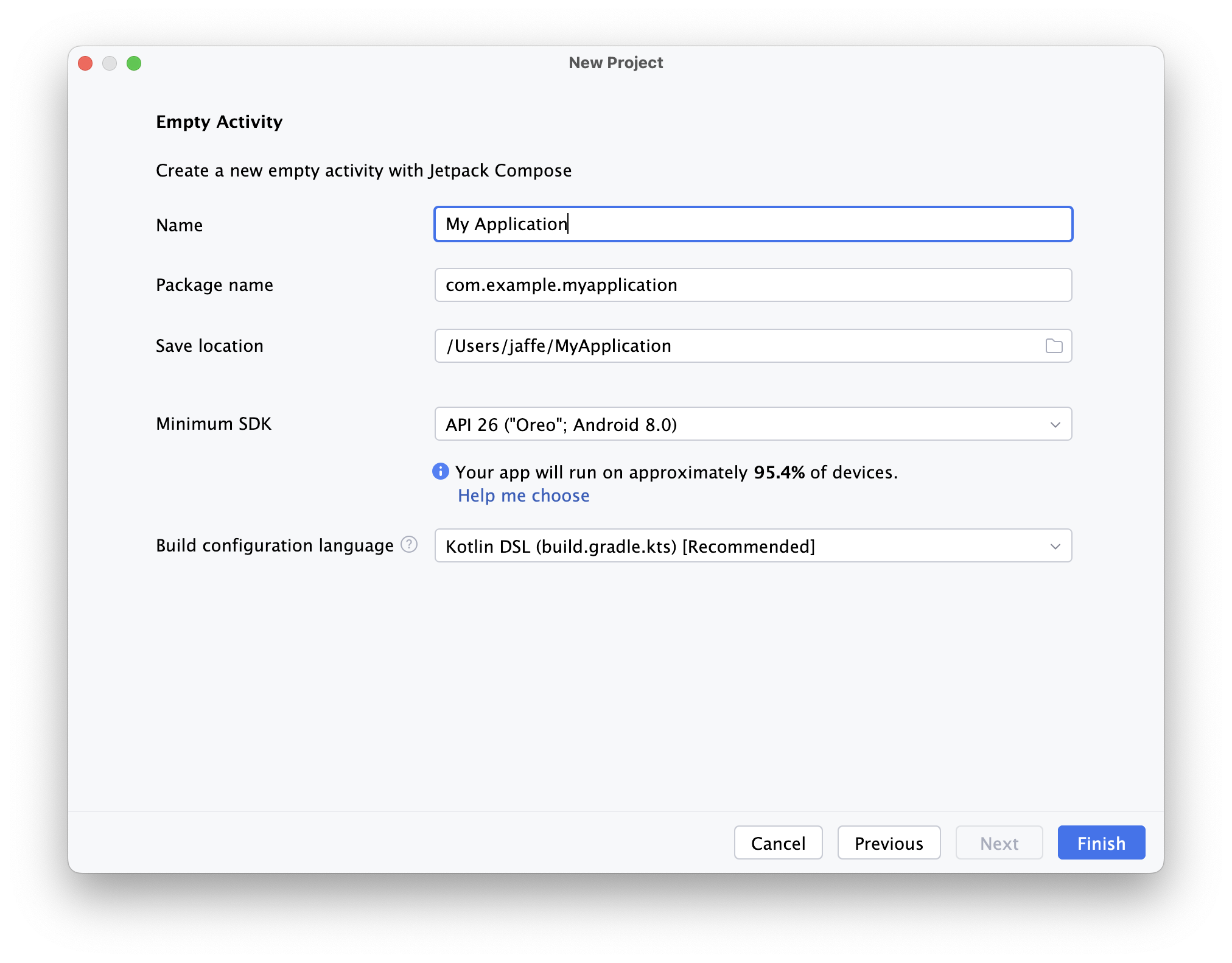

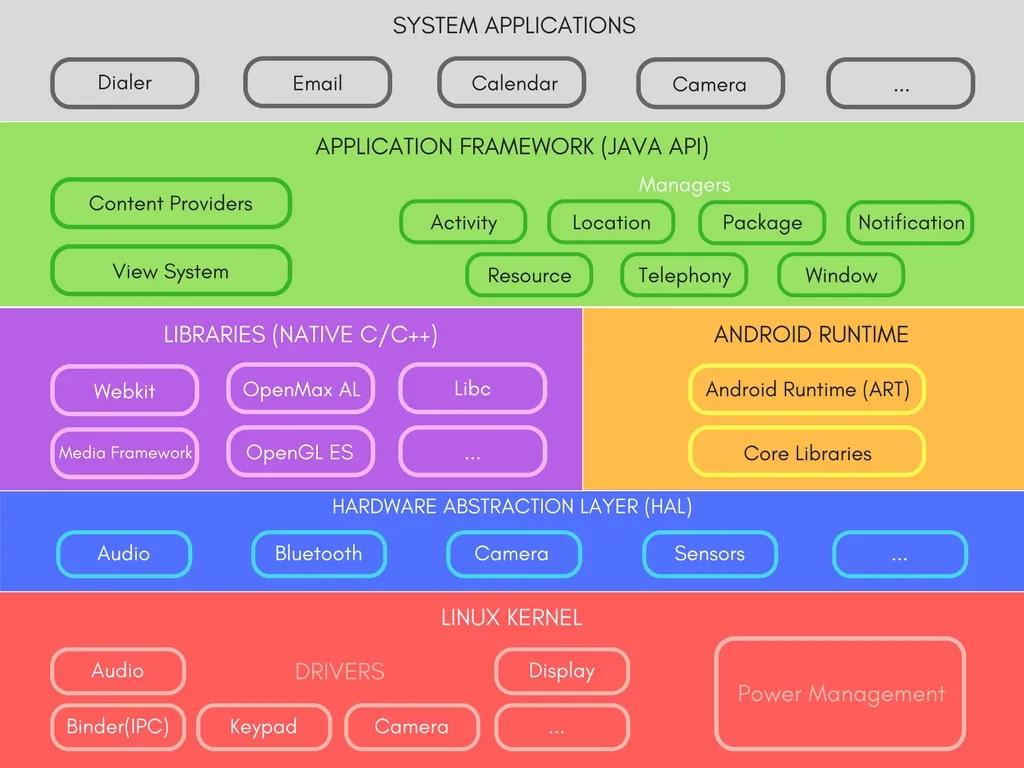

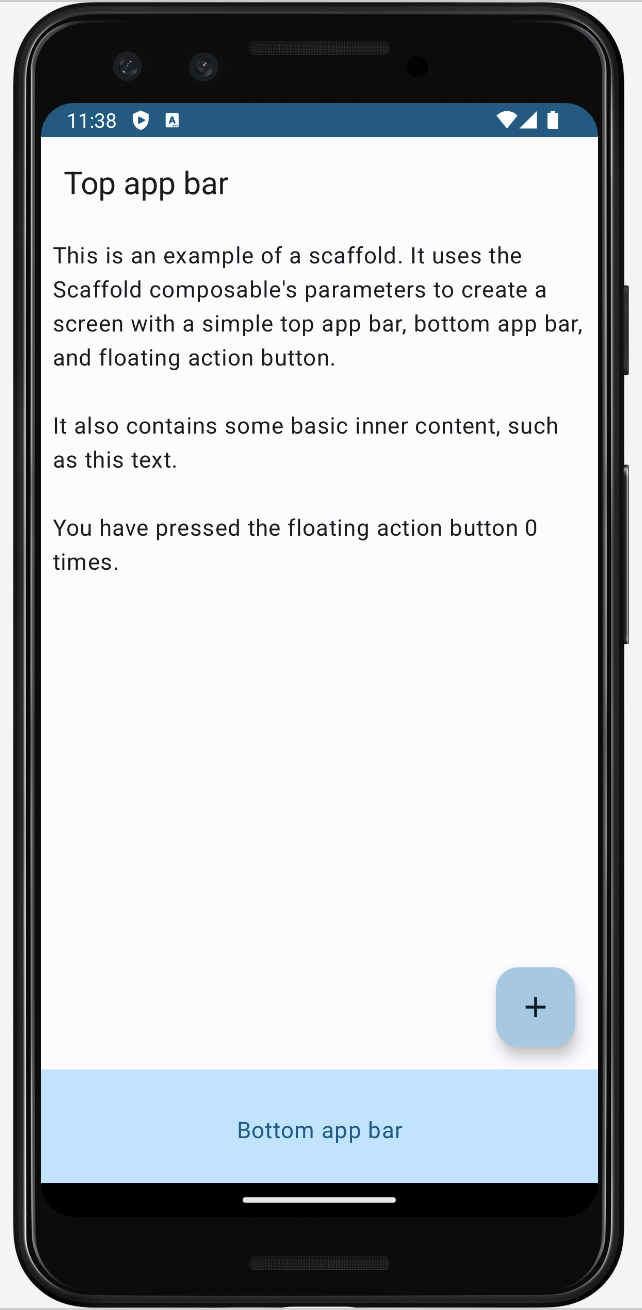

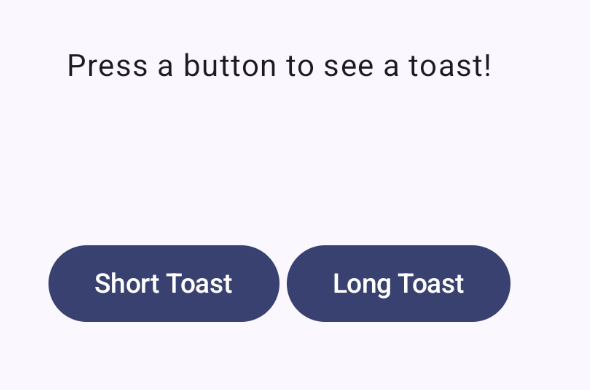

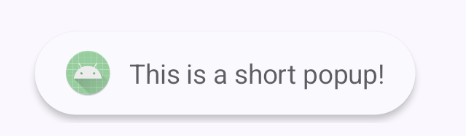

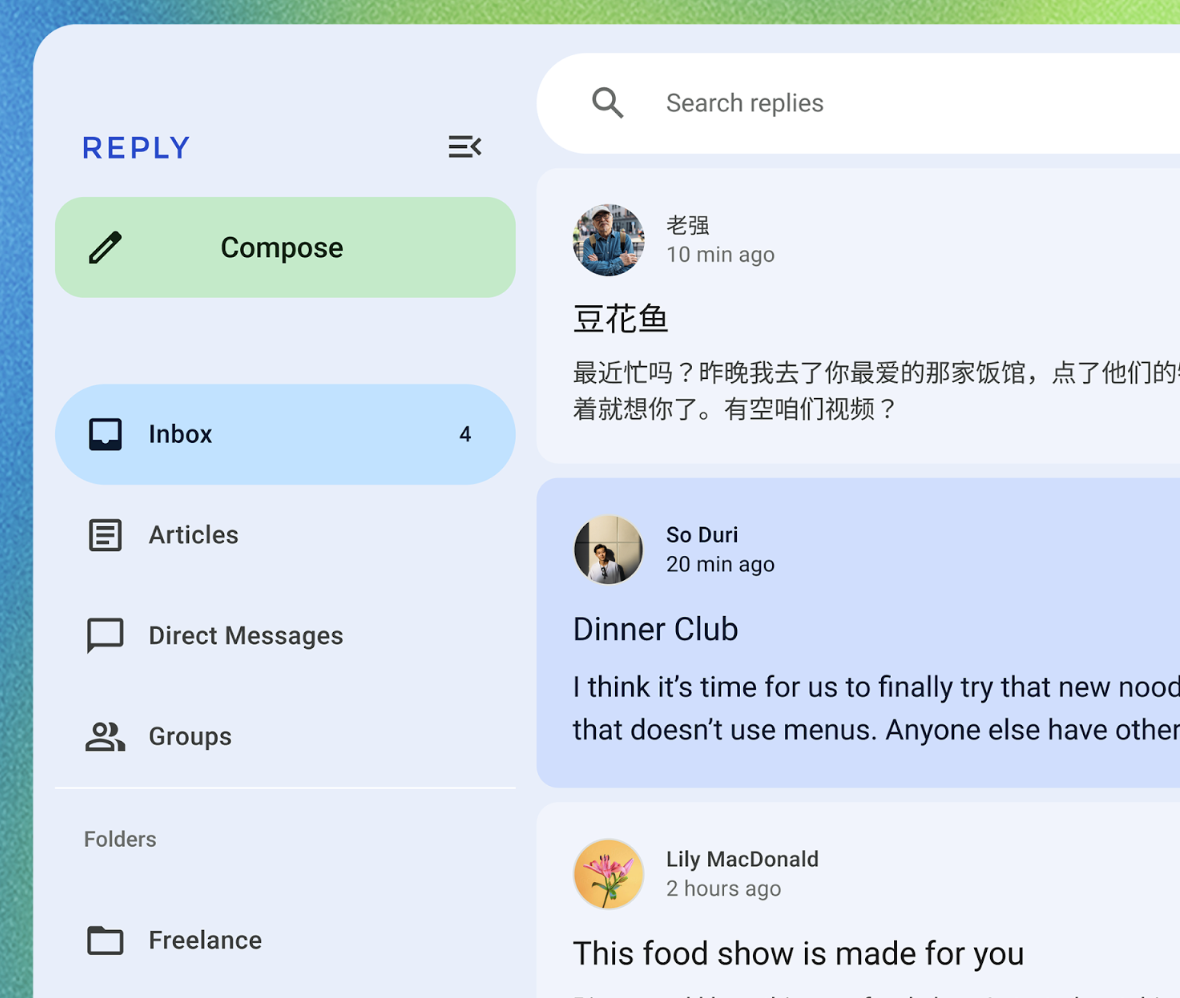

We are designing and building graphical applications that leverage cloud services and remote databases. The project should be a non-trivial Android or desktop application that demonstrates your ability to design, implement, and deploy a complex, modern application.

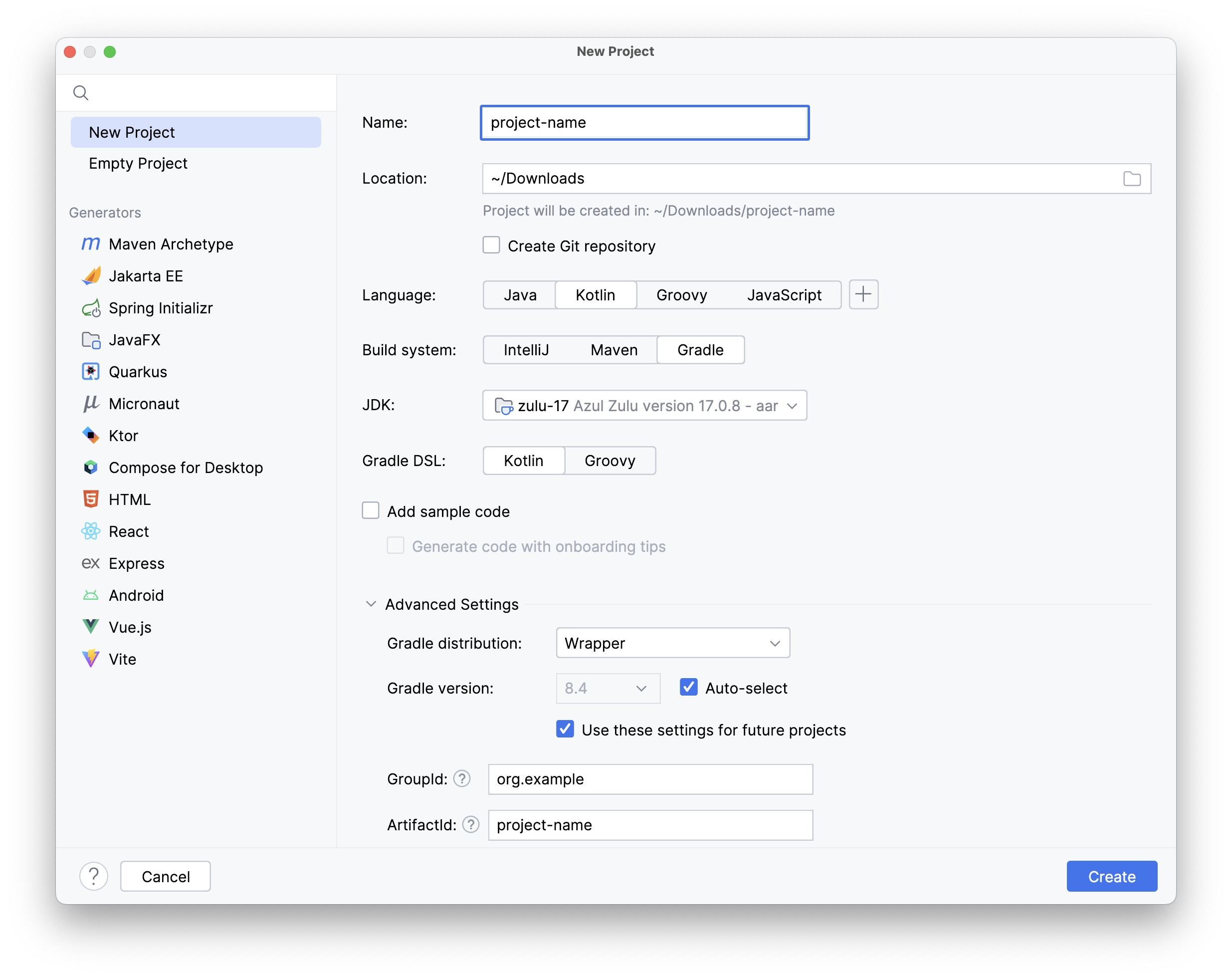

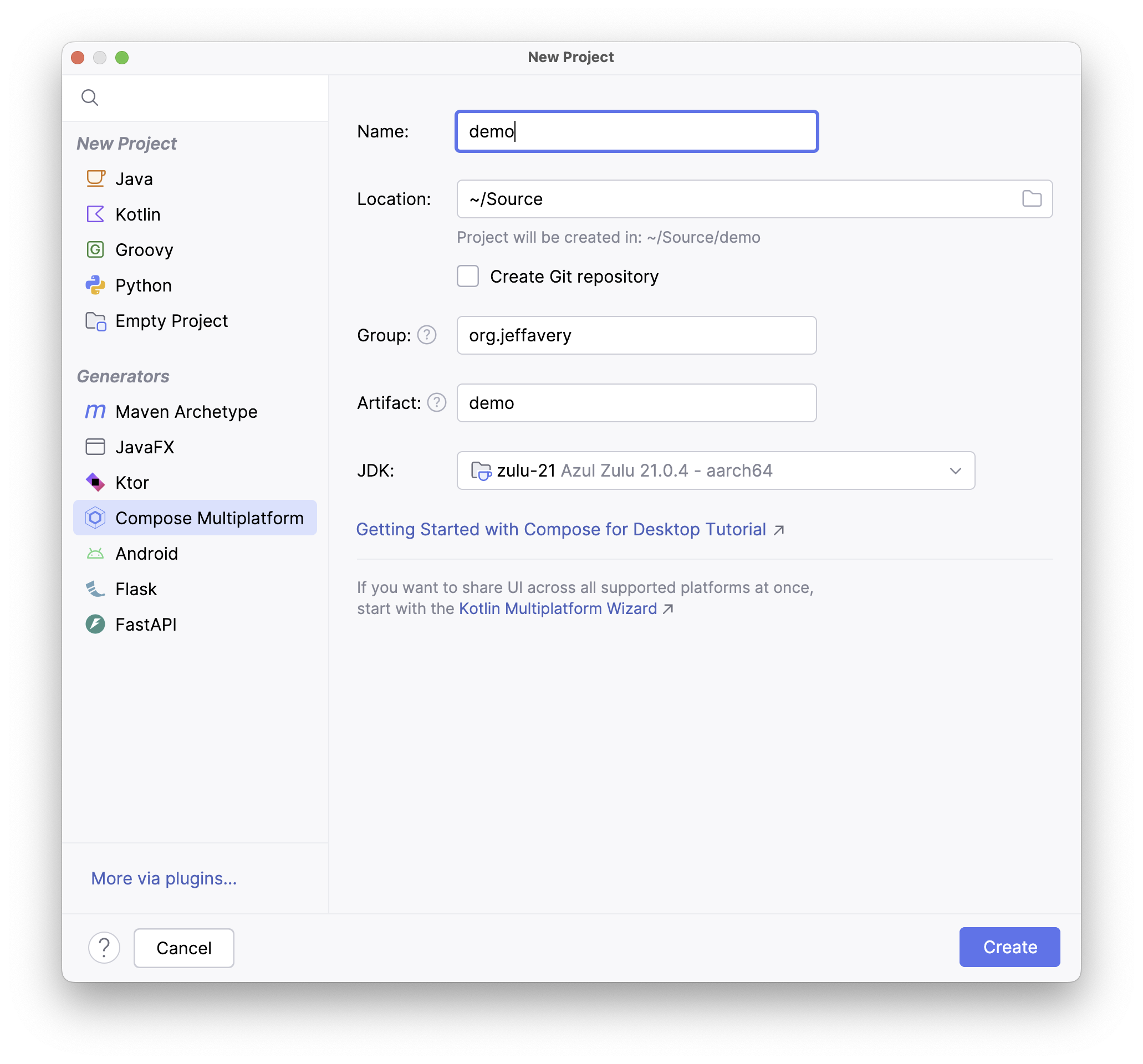

You must use the technology stack that we present and discuss in-class:

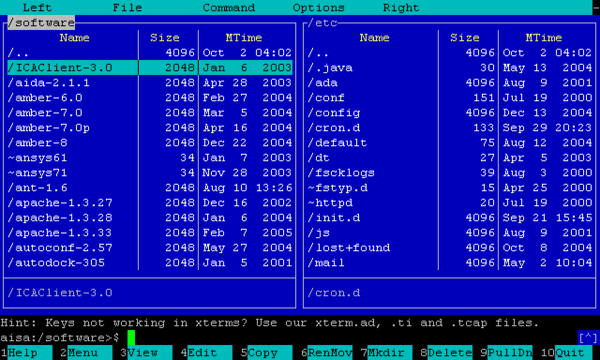

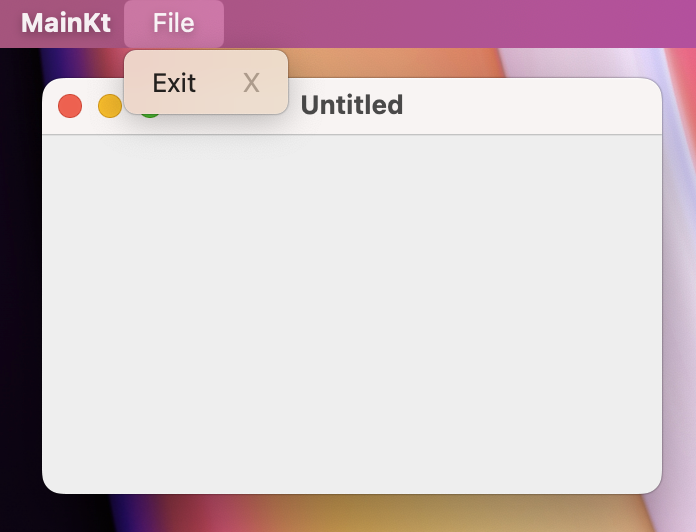

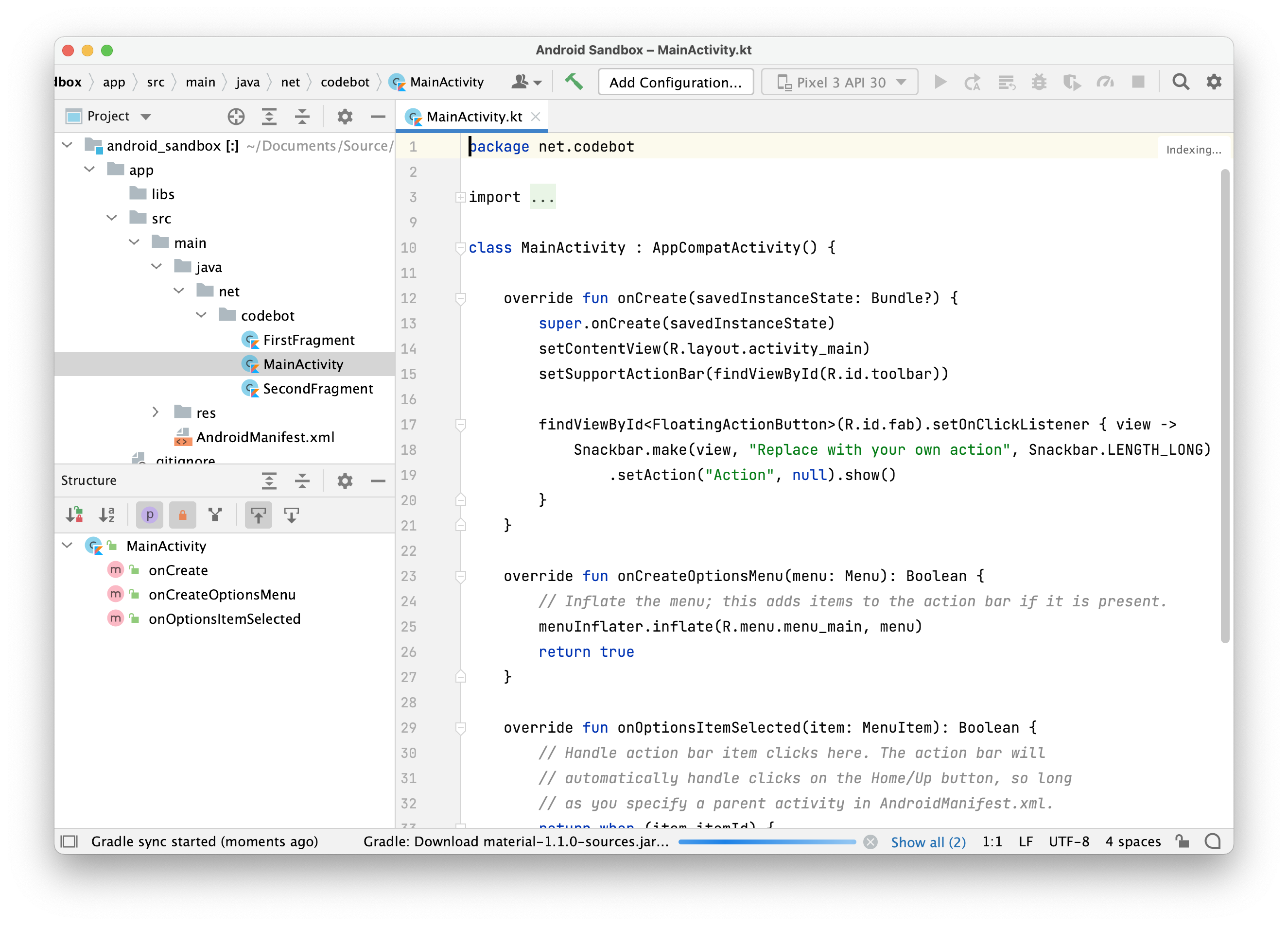

- GitLab for storing all of your project materials, including documentation, source code and product releases. All assets and source code must be regularly committed to GitLab. See specific requirements as the course progresses.

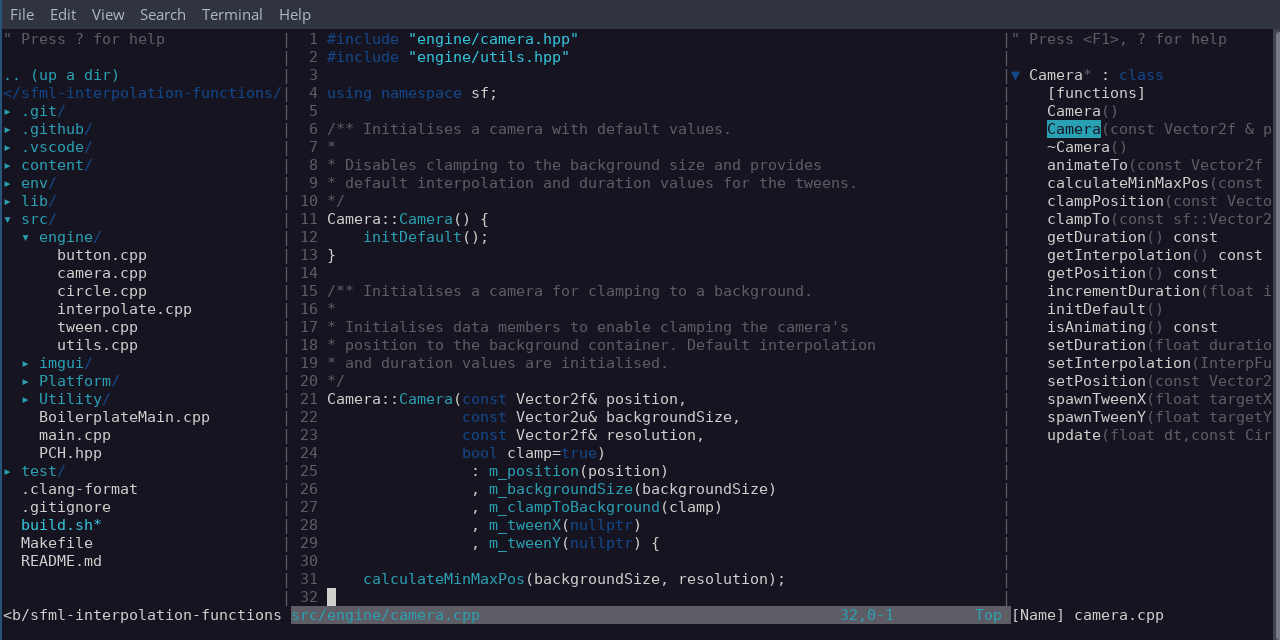

- Kotlin as the programming language for both front-end and back-end. No other languages are allowed.

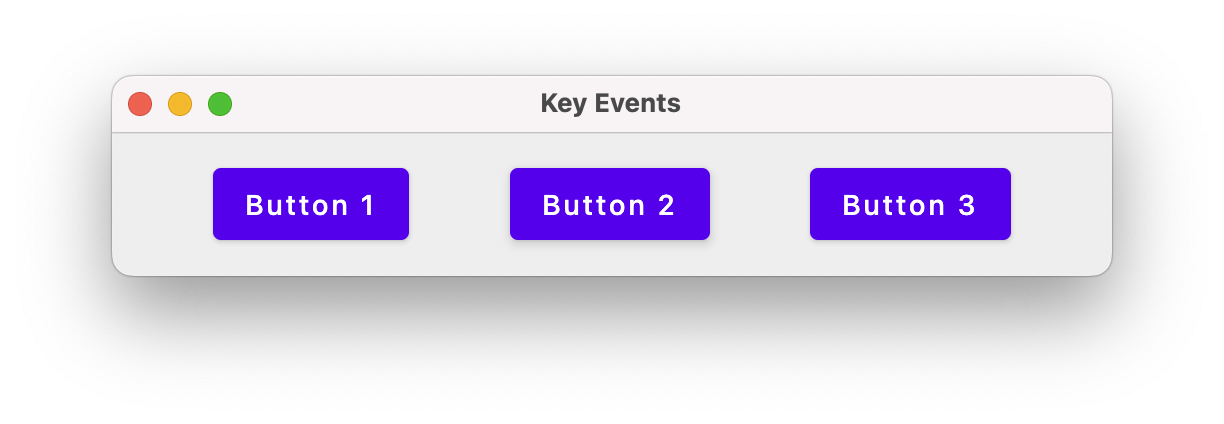

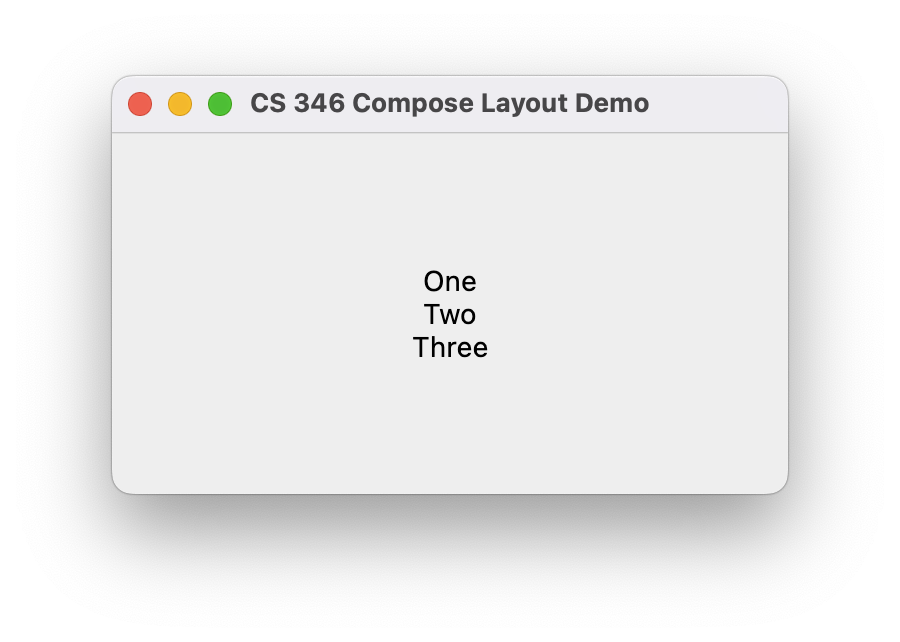

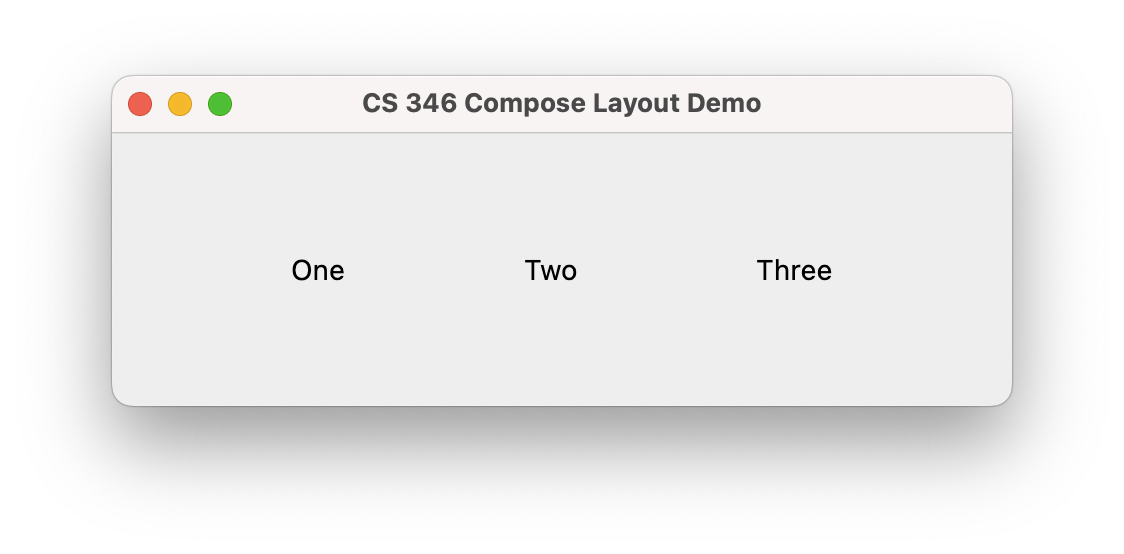

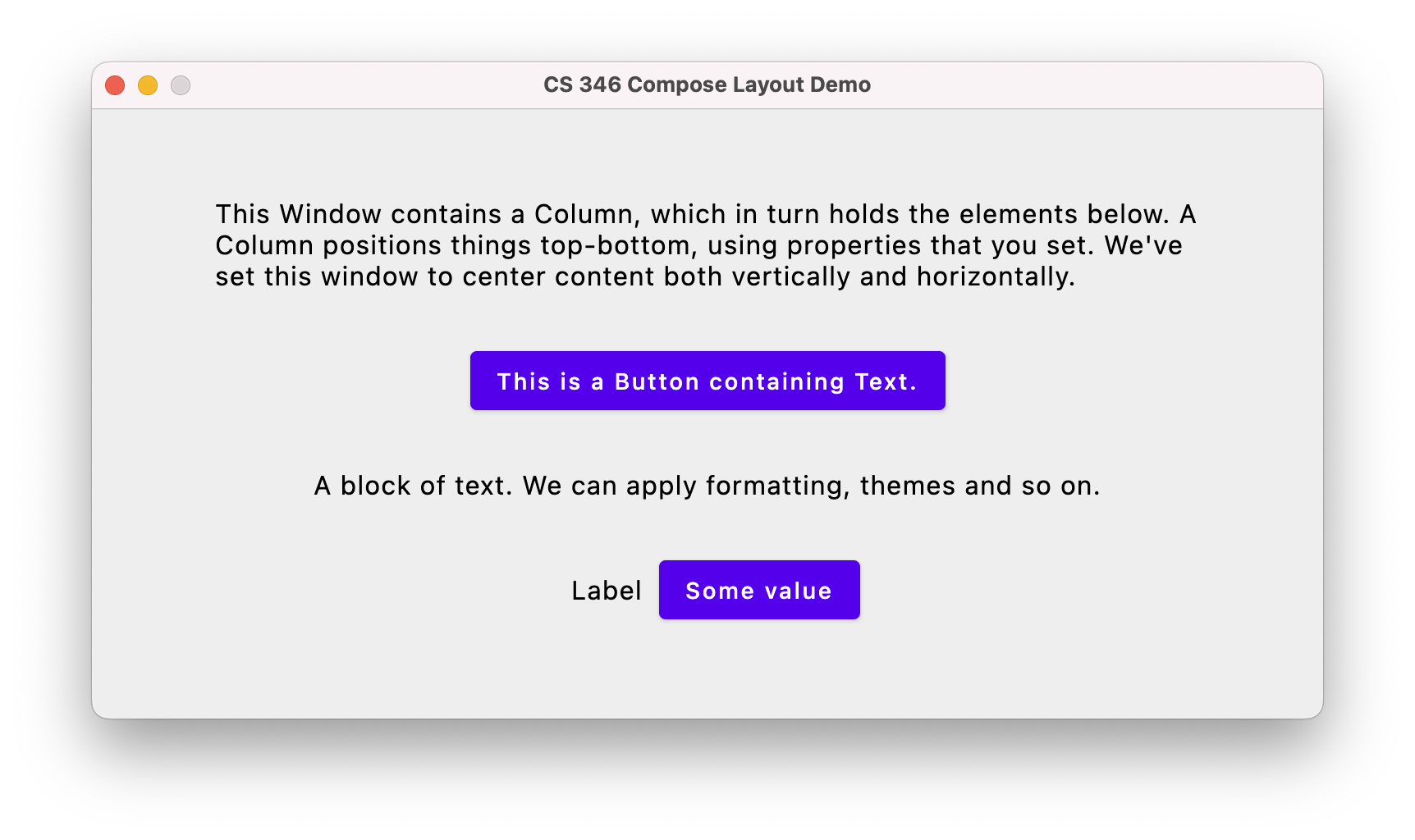

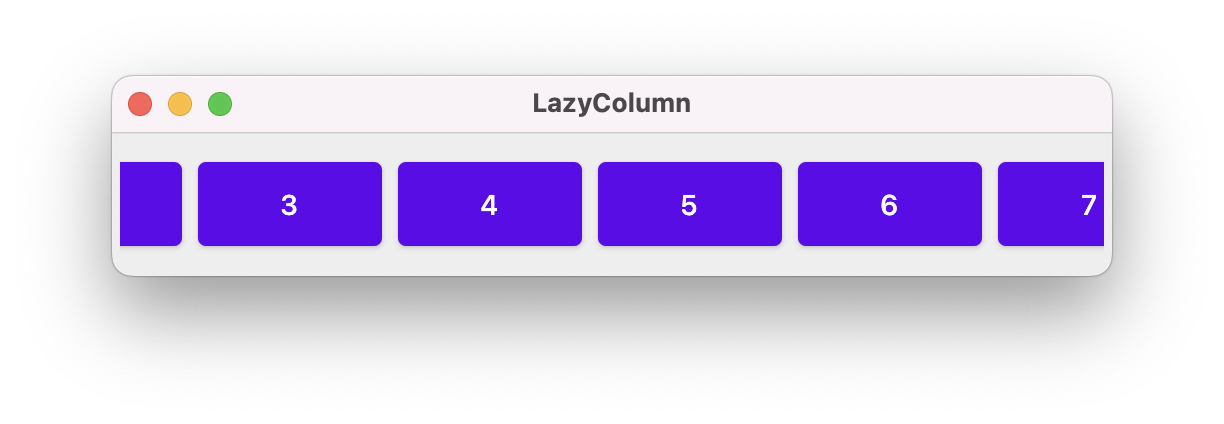

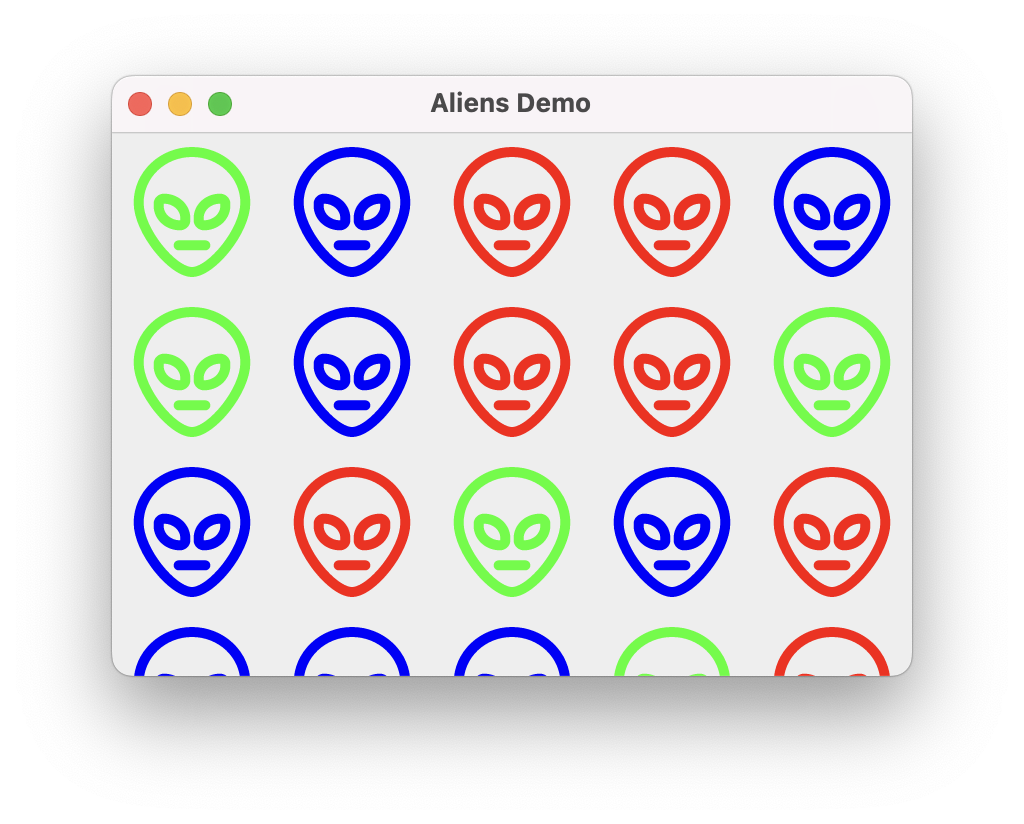

- Jetpack Compose or Compose Multiplatform must be used for building graphical user interfaces.

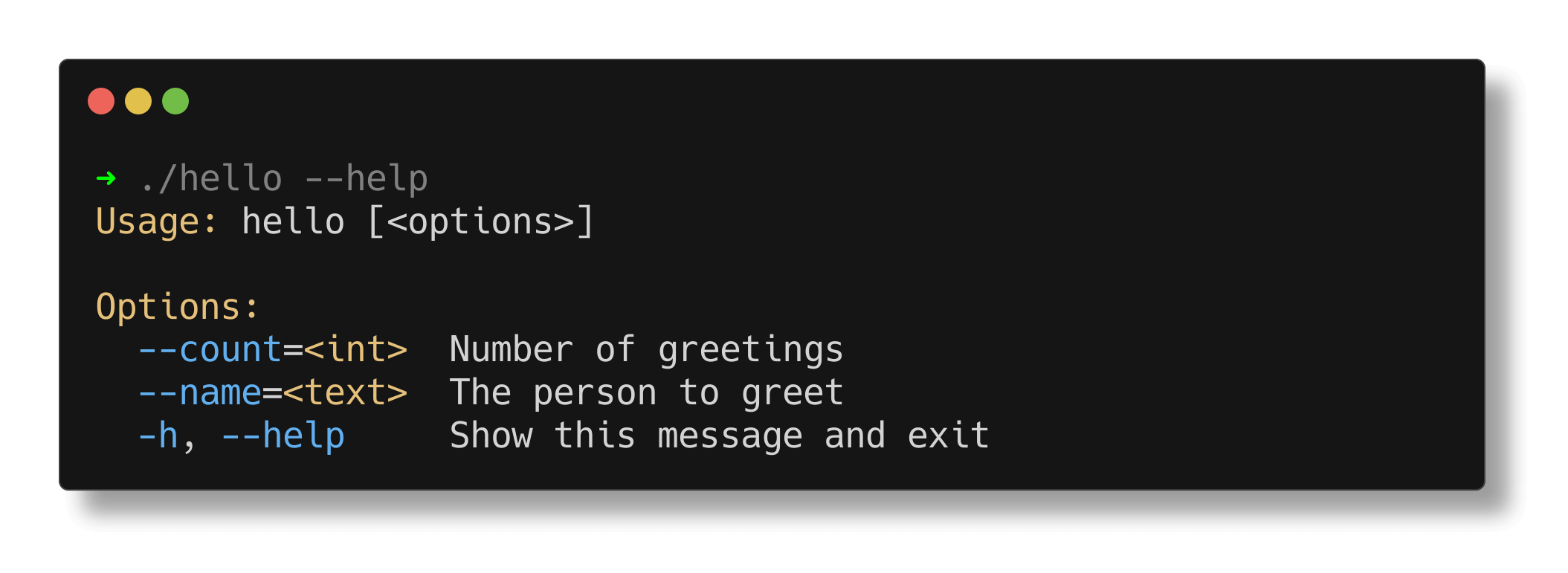

- Ktor for consuming a web API or general networking requirements. Note that for a hosted service, you may instead use their native SDK - see below.

- The kotlin-test classes and JUnit for writing tests.

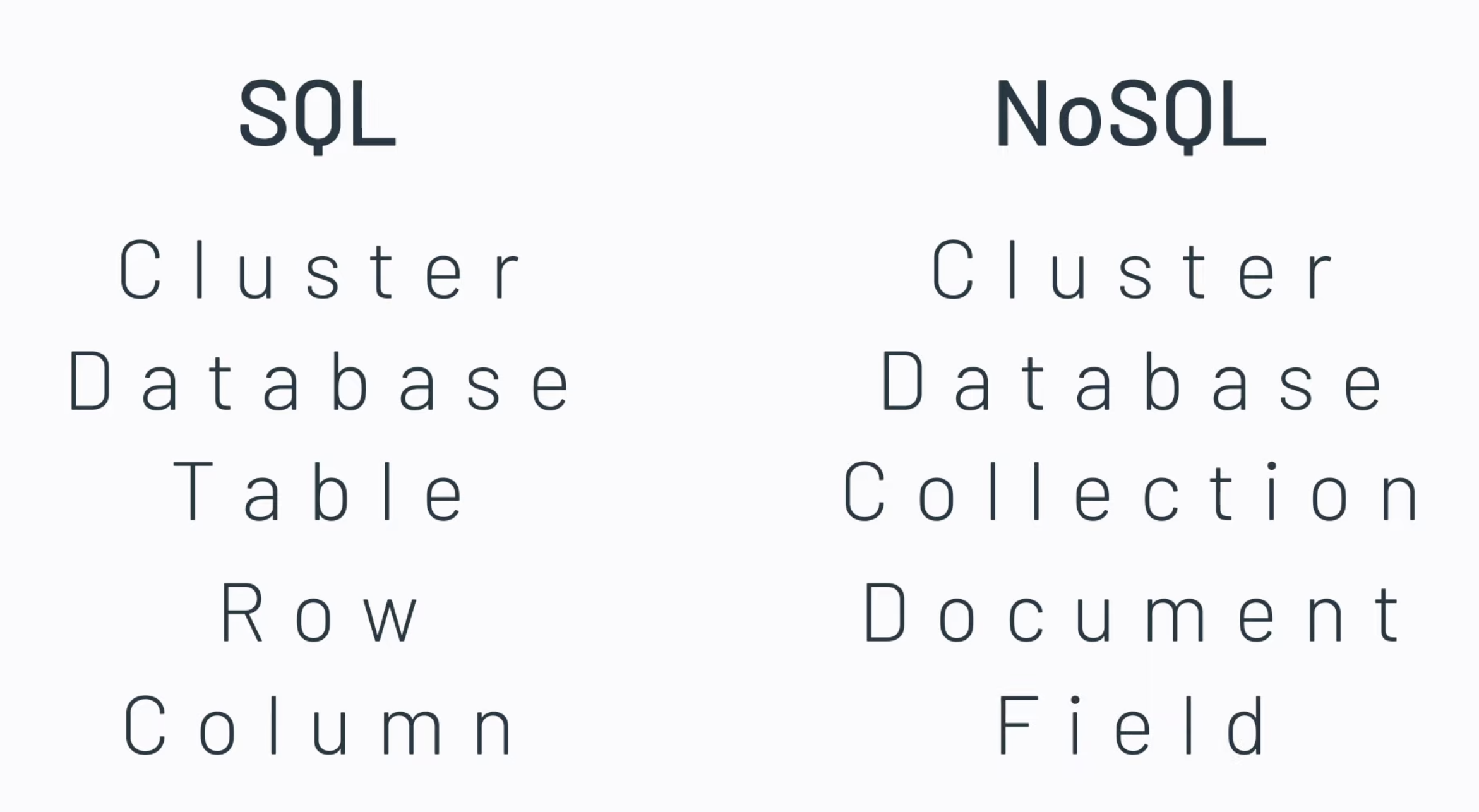

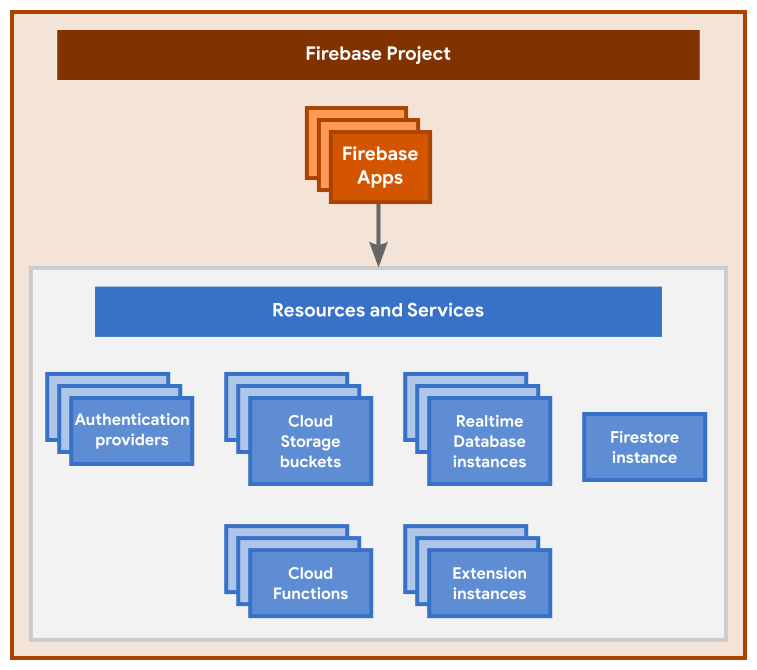

You may choose any hosted cloud/db provider. The following have been used successfully in past offerings of this course, and are recommended: Firebase, Supabase, and MongoDB.

- To connect to these platforms, you are allowed to use either their native SDKs, or Ktor if they support it.

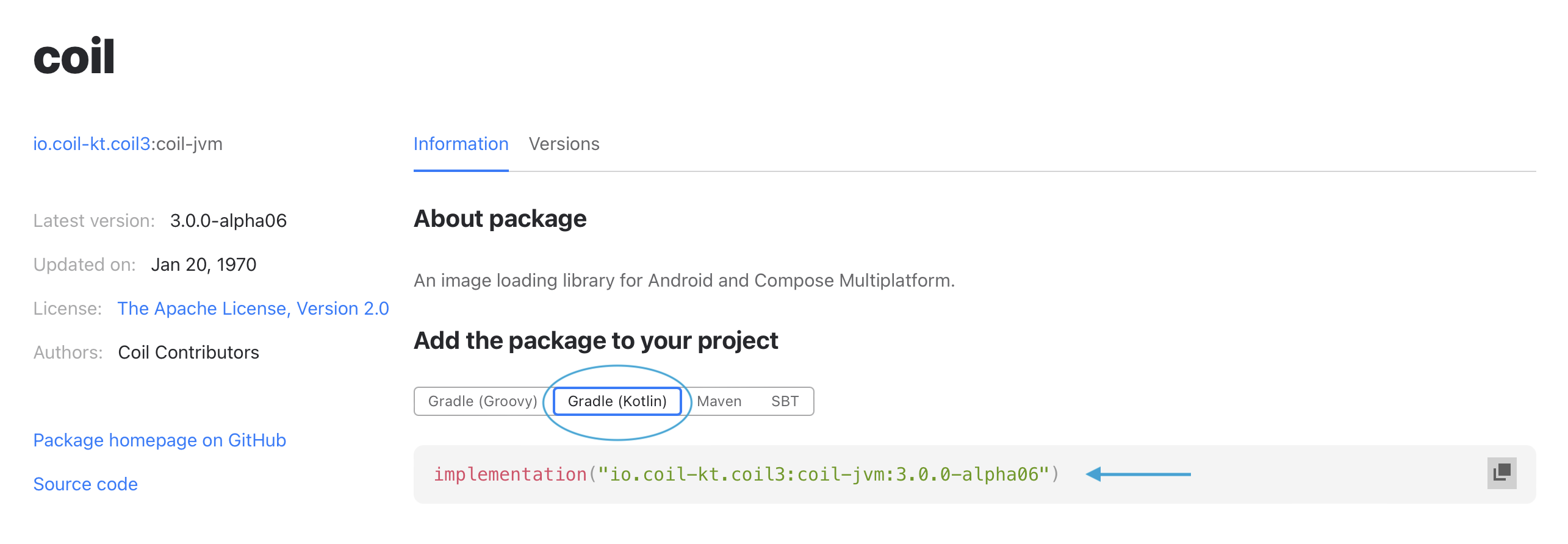

Additional libraries may be used, provided that you follow the course policies.

You are also expected to leverage the concepts that we discuss in class:

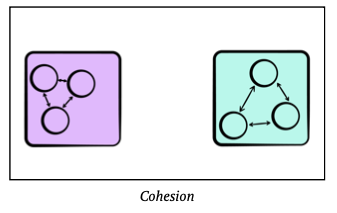

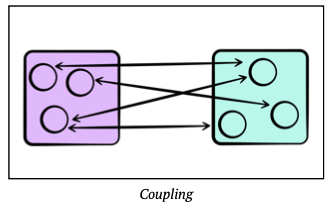

- You should demonstrate design principles that we discuss in lectures.

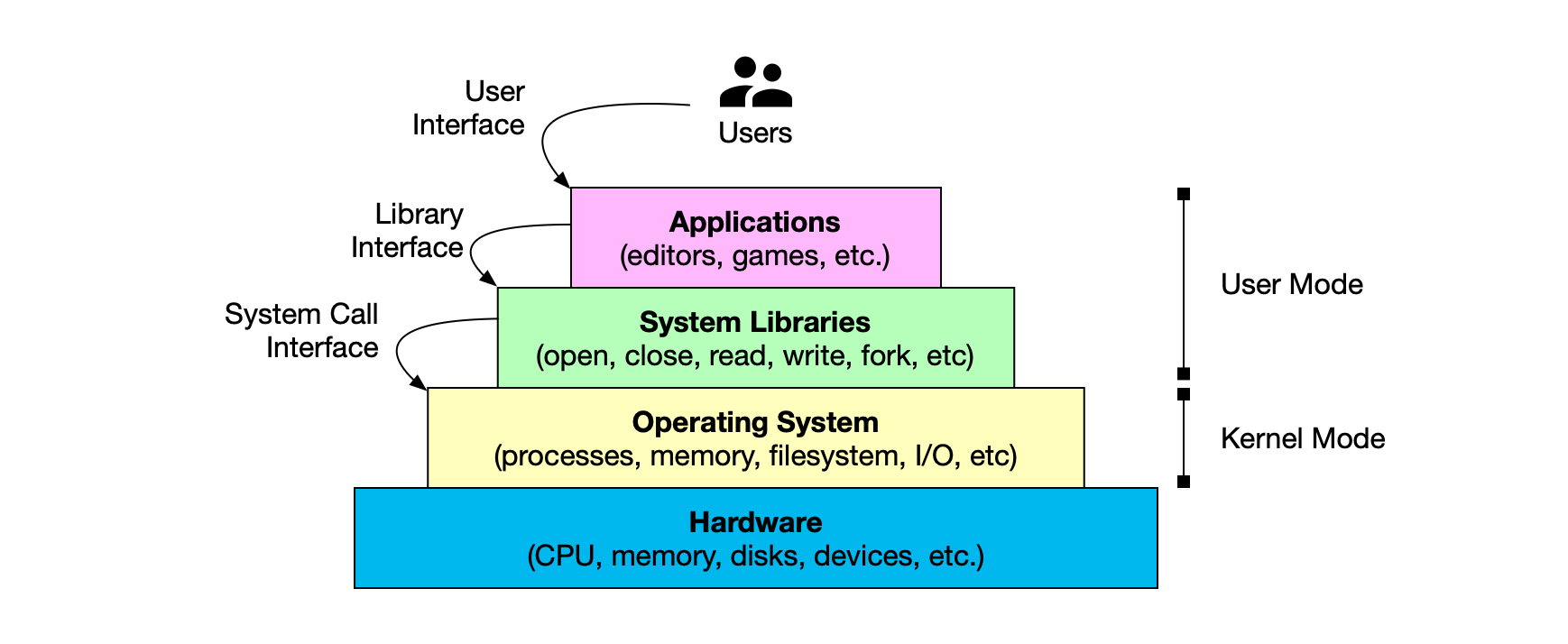

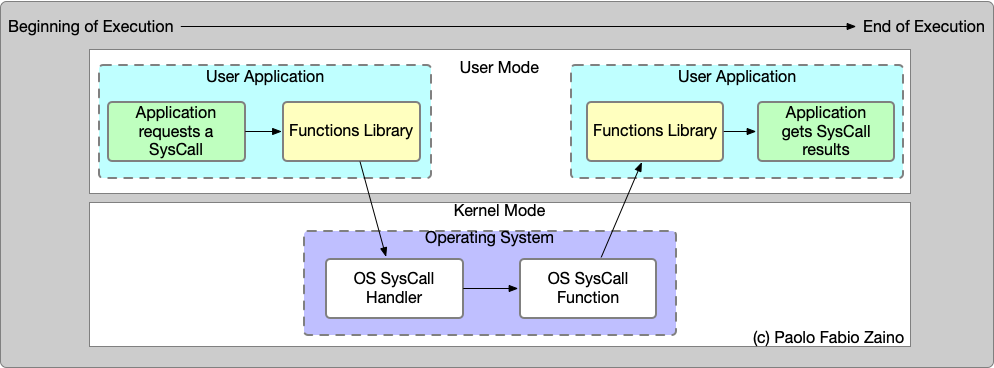

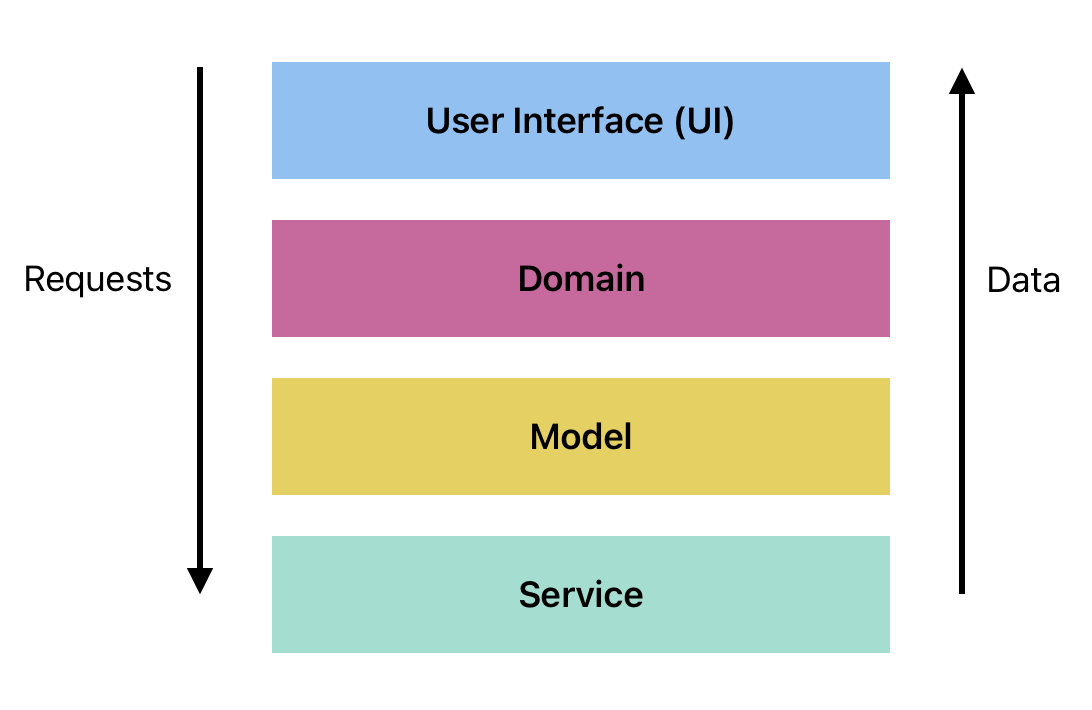

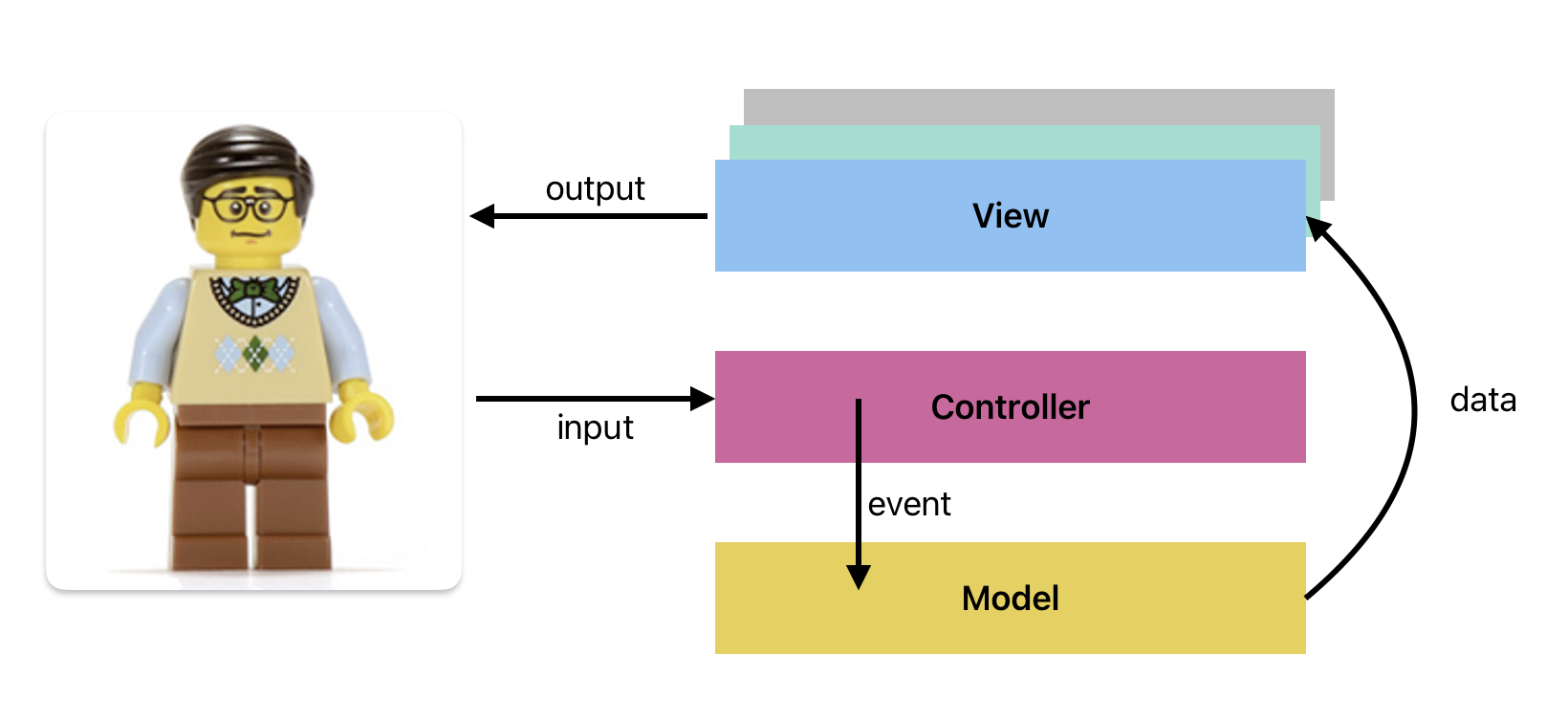

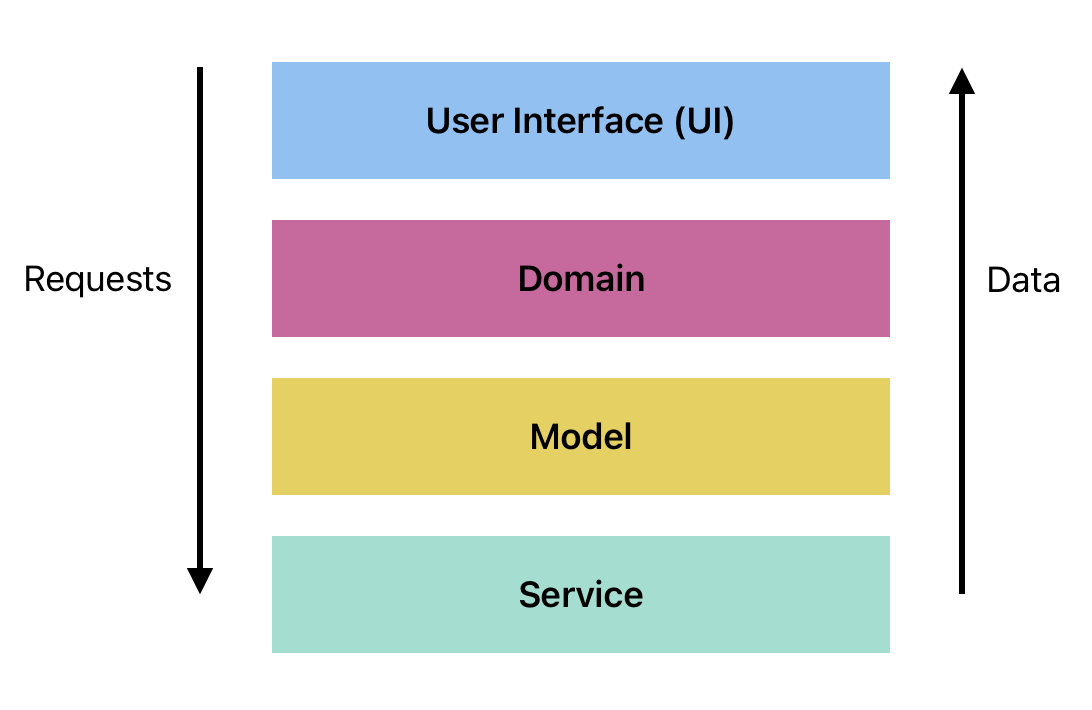

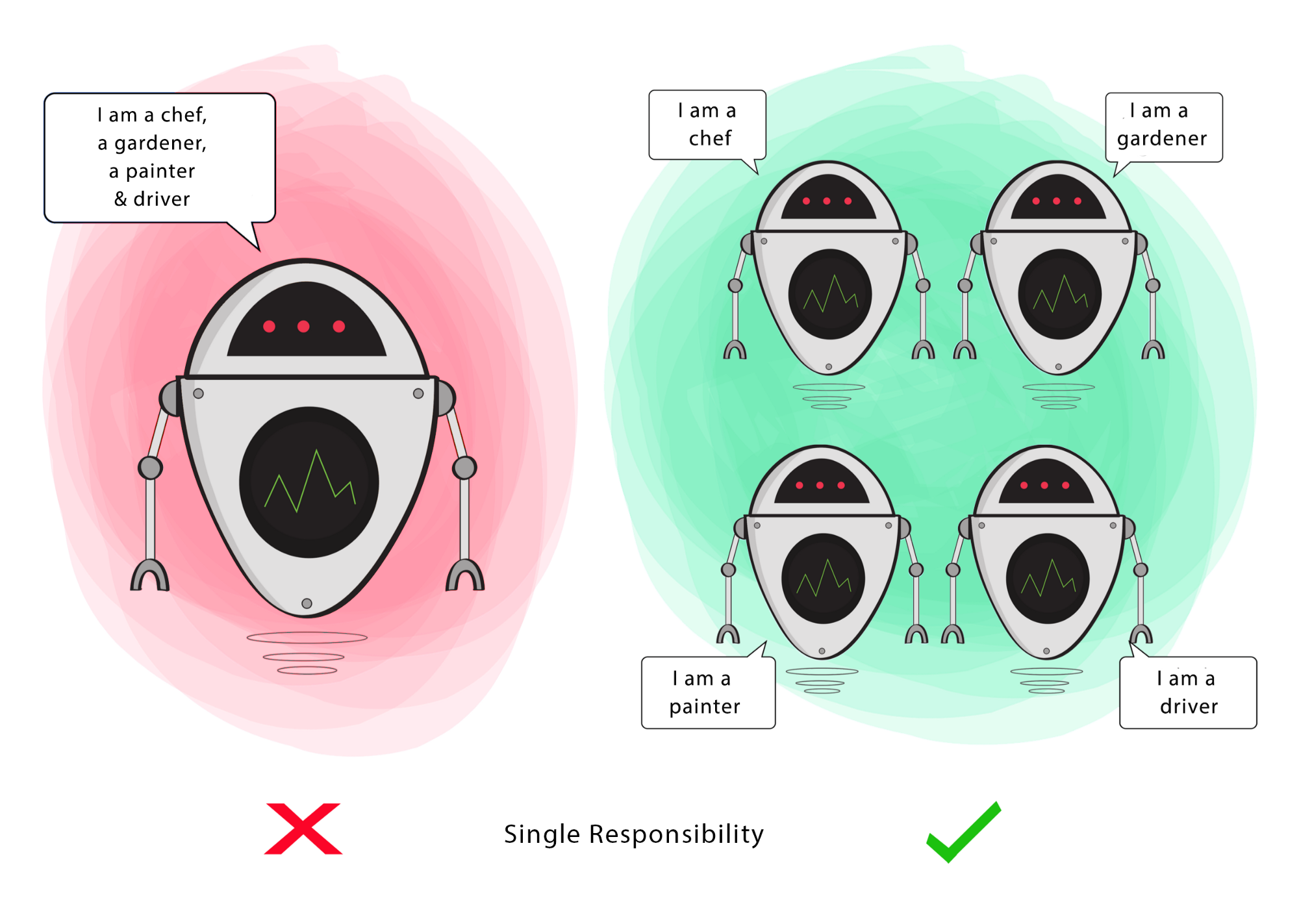

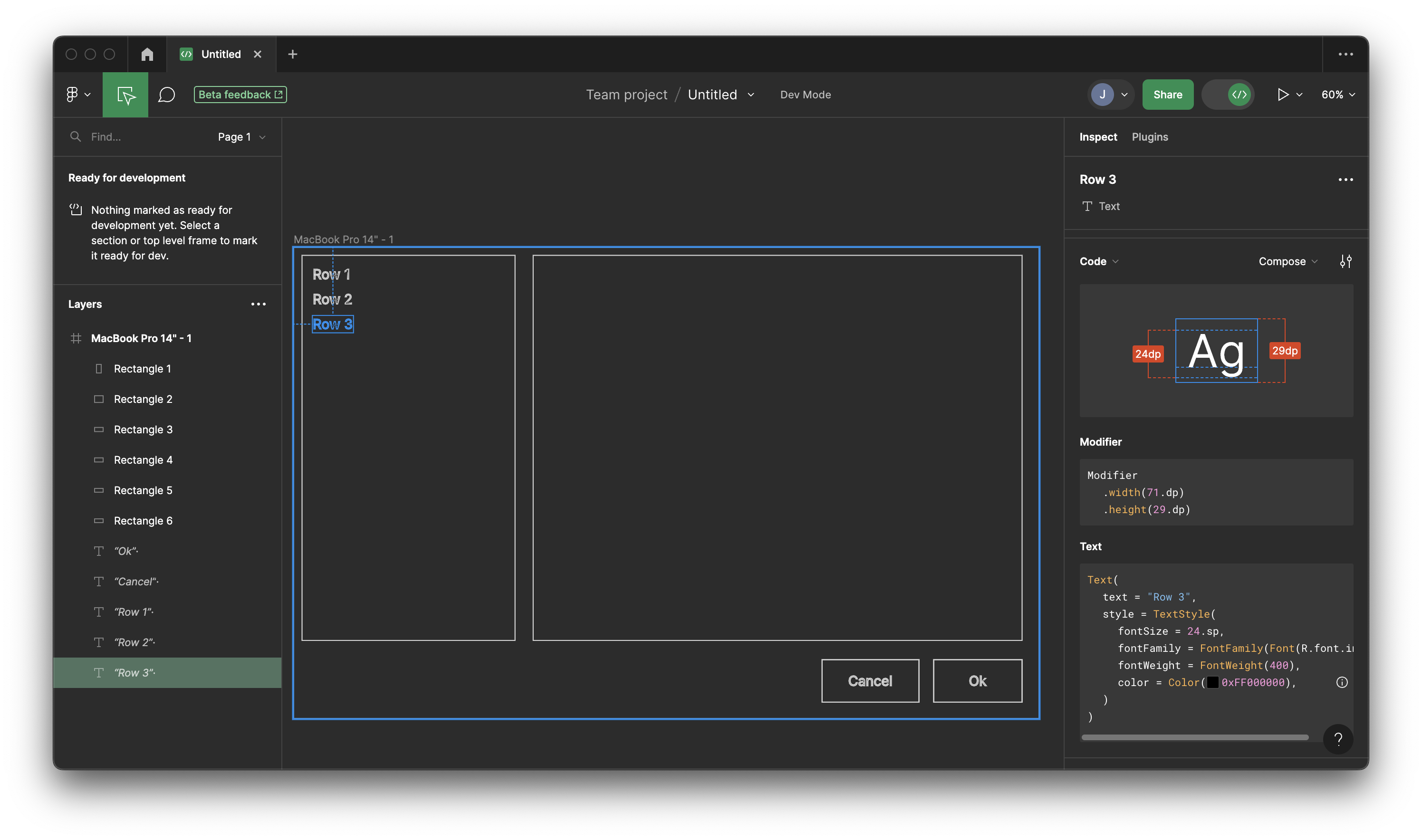

- You must build a layered architecture, using MVVM, MVI or another pattern we discuss.

- You are expected to write and include a reasonable number of unit tests for your application.

You will be assessed based on:

- How well you define the problem you are solving, and understand the users you are solving it for.

- How well you design features to address that problem and the quality of your design.

- The clarity, quality and ease-of-use of your application.

- Your ability to work as a team to incrementally deliver features over time.

Requirements

In your Project Proposal, you will define your target users, a problem to address, and a set of custom features that you intend to implement to address that problem. The details will be unique to each team, but should be non-trivial features that demonstrate your ability to design and implement complex functionality to address a problem. You should be able to articulate how these features make your product different or better than existing applications.

Your application should minimally consist of a desktop or Android application with the following requirements.

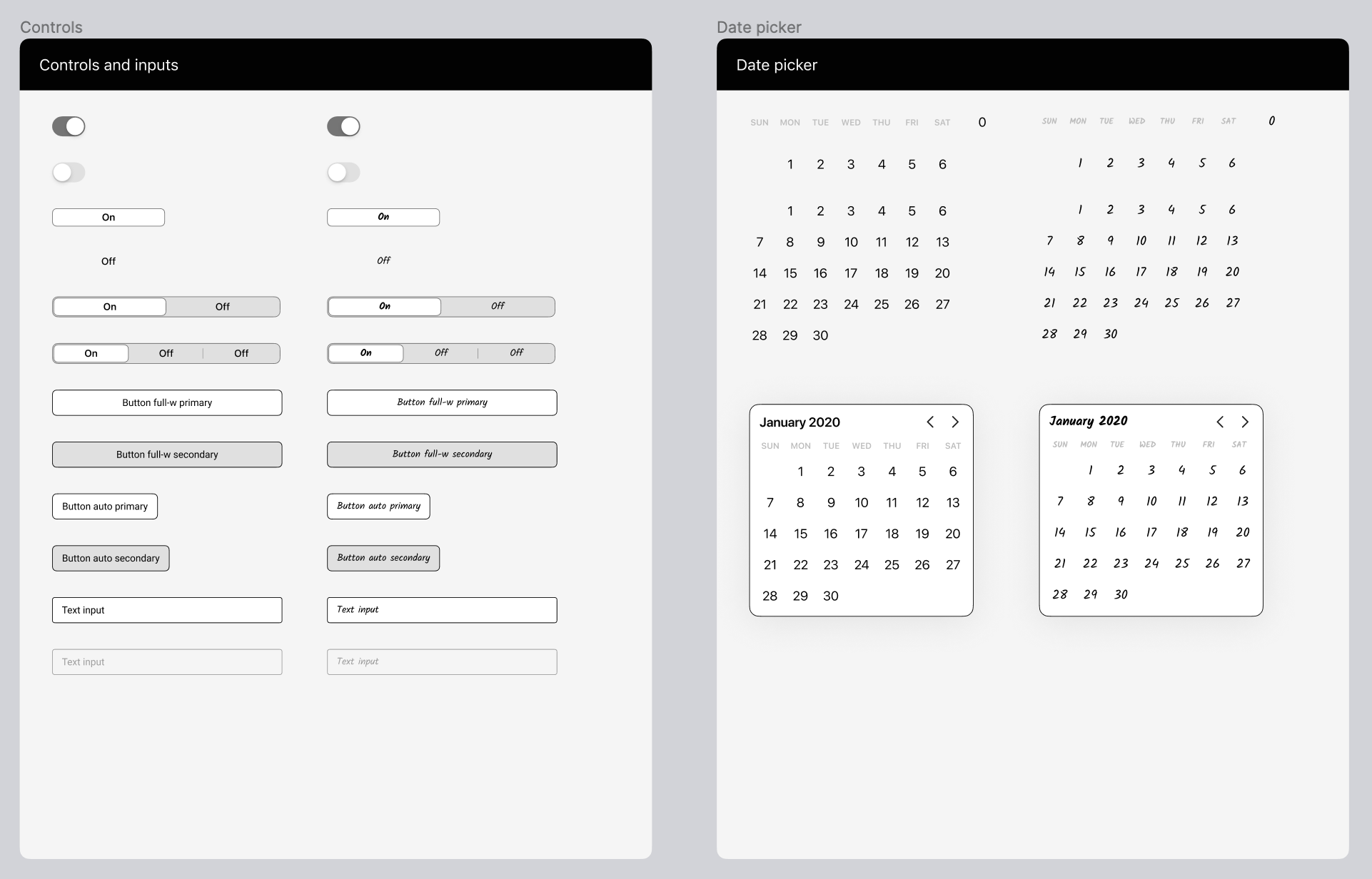

1. Graphical user interface

Your application must have a graphical user interface, created using either Jetpack Compose (Android) or Compose Multiplatform (Desktop).

Your application should include at least three different screens, and you must support navigating between them as part of your normal application functionality.

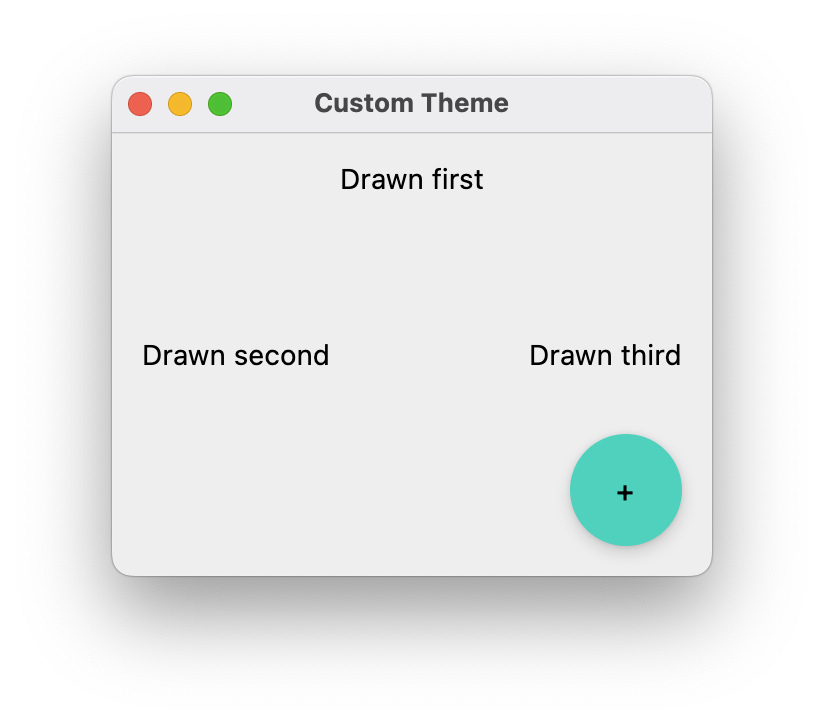

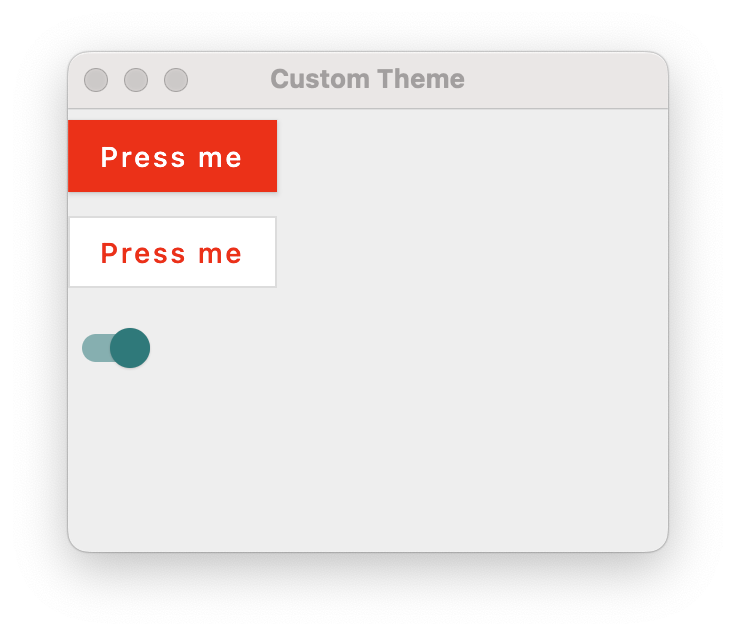

You should have a custom theme that you have produced i.e., custom colors, fonts and so on. Marks will be deducated if you simply use the default Material theme that is included in Compose.

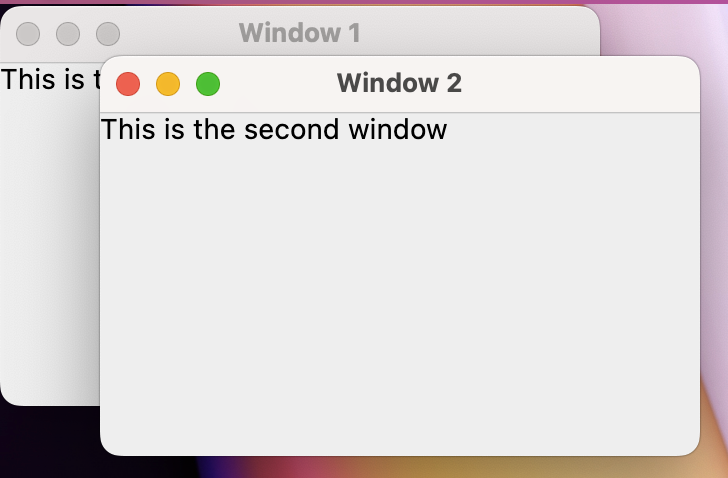

For a desktop application:

- Your screens should be windowed.

- Users should be able to minimize, maximize and resize windows as you would expect for a desktop application, and windows should have titles.

- Your application should have a custom icon when installed.

For an Android application:

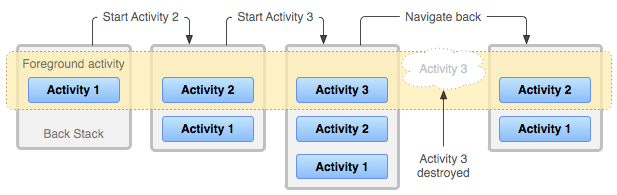

- Users should be able to use normal gestures to navigate between screens. You must support back-stack navigation.

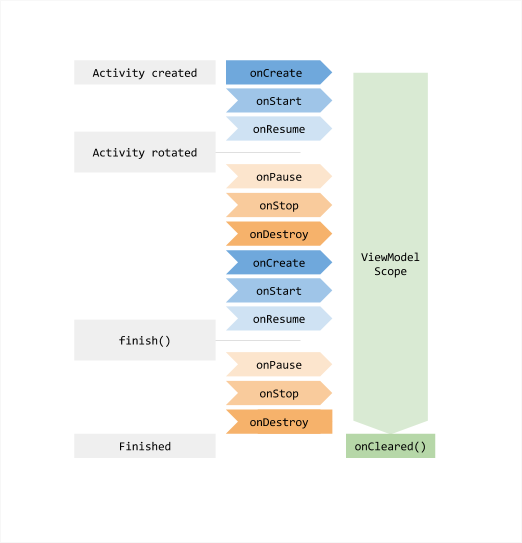

- When rotated, your screens should rotate to match the orientation, and layouts should modify themselves to fit the screen dimensions in either orientation.

You are expected to produce prototypes for these screens as part of your initial design.

2. Multi-user support

Your application should support multiple users. Each user should be able to login, and see their own specific data and application settings.

- Each user should be able to create an account that represents their identity in the application.

- Accessing user data must be restricted by account i.e. users can only see their own data, unless a feature specifically reveals shared data e.g., sharing a recipe among friends using the application.

- You should support standard account functionality:

- Each user should be able to create their own account, and login/logout using that account.

- You should perform standard validation when creating accounts or entering information e.g., do not allow duplicate usernames; mask out the password when entered; passwords must contain special characters.

- Credentials should persist between sessions i.e., your user should not have to login each time they launch the application.

3. Networking and security

You must use either Ktor or a native platform SDK to access your hosted database/platform.

- Network requests do not need to be encrypted, but you should require authentication for any access (i.e., the user has to login before they can access any service or data).

- You should leverage existing authentication services where possible e.g., Firebase Auth or Supabase Auth. Do not “roll your own” insecure system!

4. Data storage

Your application must manipulate and store data related to your problem e.g., recipe for a recipe tracking application; user profile information needed to login; the user’s choice of theme to use in the UI.

Database requirements

- You must store your application data in a hosted database of your choice. This can be a dedicated instance e.g., one that you have installed and control yourself, or a hosted database made available through a provider like AWS, Firebase or Supabase.

- Your database must be configured for network access, and be accessible over the network i.e. not installed locally on the same machine that you use for demos.

- Use of a custom SDK to access your database is allowed, as is a database framework like Room or Exposed.

5. Custom features

Using the guidelines above, you are expected to propose, design and implement multiple features that solve a problem for your users (see the Design thinking lecture for details).

- Your application must manipulate and store some information related to your problem e.g., recipes for a cooking application, markdown documents for a journaling application.

- Your functionality must be more complex than just simple CRUD operations. e.g., your recipe app should also generate a shopping list, or store user reviews of recipes, or suggest alternate ingredients.

Stuck on what to build? See picking a project page for some project suggestions.

Changes

- Jan 8 2025: Original publication.

- Feb 13 2025: Specified that you are allowed to use platform SDKs for hosted service platforms. Removed local database requirement.

Forming Teams

The first, and most important thing to do is to find teammates! Ideally, you should form a project team in the first week of the course.

Rules around team formation

- Teams must consist of four people.

- Team members must all be enrolled in the same sections of the course.

- Everyone must commit to attending team activities, including attending in-person lectures.

Note that you CANNOT take this course if you are unable to attend in-person. This is not a course to take remotely, while on a work term.

Find other people

How do you find team members, or find an existing team to join?

- Join friends who are also taking the course! If you are in different sections, speak with the instructor to see if you can be moved to the same section.

- Use the Search for Teammates post on Piazza to introduce yourself and find teams that are looking for people.

- If you’re in-class, introduce yourself to people sitting near you! We’ll even give you time at the end of lectures to meet.

This course moves quickly, and we need everyone to participate in order for it to work. We will do our best to help you find a team, but you also need to be active participants in the process!

In extreme cases, failing to join a team may result in you being removed from the course.

Discuss how to work together

Before agreeing to work together, make sure that you agree on the basics:

- Are you all registered in the same sections?

- Are you all able to attend class? Can everyone participate equally?

- Do you have free time outside of class to meet?

Make sure to review the team contract guidelines. You will be required to complete a team contract as part of your Project Setup milestone.

Register in Learn

Once you have a team formed, register yourselves on Learn:

- Navigate to

Connect>Groupsand choose an empty team from the list - Add yourself and your team members.

Picking a topic

Before proceeding. make sure to review the project requirements!

Every term, at least one team is “surprised” to learn that they were supposed to use Kotlin, or Compose for the UI, or write unit tests. All of these things are clearly specified in the requirements, so make sure that you understand that document. Ask your instructor if anything is unclear.

We recommend the following steps:

- Have a discussion with your team about any personal goals that you might have. For example, if you’re interested in learning about a particular technology e.g., database or user interfaces, this might be a good opportunity to explore that.

- Brainstorm! Try and identify potential users, and problems that you could solve for them. If you need inspiration, look at existing applications and try and improve on those. This is important: identify the need first, and then second think of how to solve that need.

- Record all of your ideas. You want to capture everything, and as they say, “there is no bad idea” at this point!

- Discuss as a team and narrow down your ideas to a single problem/solution that you would like to address.

Public data/APIs

If you’re stuck for an idea, here are some interesting public APIs that you might be able to leverage. Build an application to track a comic-book collection, or a cool new weather app for your phone!

- Stack Overflow API: https://api.stackexchange.com/

- UWaterloo Open API: https://openapi.data.uwaterloo.ca/api-docs/index.html

- Notion API: https://developers.notion.com/

- Pokemon API: https://pokeapi.co/

- Marvel Developer Portal: https://developer.marvel.com/

- REST Countries: https://restcountries.com/

- NASA Open APIs: https://api.nasa.gov/

- Weather API: https://openweathermap.org/api

- Market Data API: https://polygon.io/

- News API: https://newsapi.org/

- YouTube API: https://developers.google.com/youtube/?ref=apilist.fun

- OMDb (Open Movie Database) API: https://www.omdbapi.com/

- DeckOfCards API: https://deckofcardsapi.com/

- Open Library API: https://openlibrary.org/developers/api

ChatGPT project ideas

More ideas, courtesy of ChatGPT. Choose an idea that aligns with your interests and skills! If you use one of these, you will likely need to add features to make your application stand out.

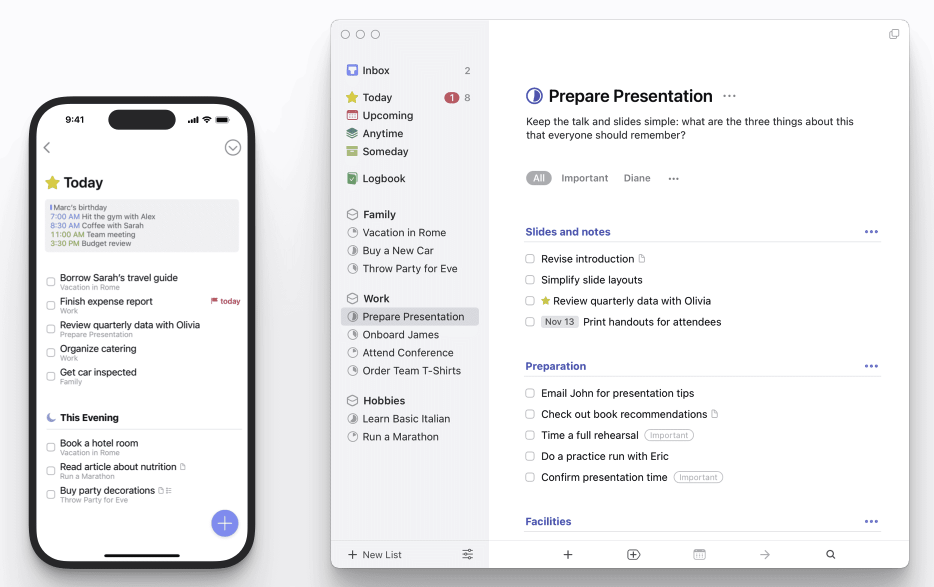

- Task Manager: Create a desktop task manager application that allows users to organize their tasks, set reminders, and prioritize tasks based on deadlines or importance. To make it really interesting, integrate with their Google Calendar.

- Note-taking App: Develop a note-taking application where users can create, organize, and search through their notes. Include features like rich text formatting, image embedding, tagging, and synchronization of notes across devices.

- Expense Tracker: Build a desktop expense tracker to help users manage their finances. Include features like expense categorization, budget tracking, and generating reports or visualizations. Let them export/import data from their banking application.

- Password Manager: Design a password manager application that securely stores and manages users’ login credentials for various accounts. Implement encryption and features like password generation and synchronization. Encrypt everything!

- Weather App: Create a desktop weather application that provides users with real-time weather information, forecasts, and weather alerts for their location or specified locations. Provide alerts based on conditions that they set e.g., “it’s below 10 degrees, so wear a coat”.

- Fitness Tracker: Build a fitness tracker application that helps users track their physical activity, set fitness goals, and monitor their progress over time. Include features like exercise logging, calorie tracking, and workout planning.

- Recipe Organizer: Develop a recipe organizer application where users can save, categorize, and search for recipes. Include features like meal planning, ingredient shopping lists, and recipe sharing.

- Code Snippet Manager: Design a code snippet manager application that allows developers to store, organize, and search through their code snippets. Include features like syntax highlighting, tagging, and integration with code editors.

- File Encryption Tool: Create a desktop application for encrypting and decrypting files to ensure users’ data privacy and security. Implement strong encryption algorithms and user-friendly interface.

- Screen Capture Tool: Build a screen capture tool that allows users to capture screenshots or record screen activities. Include features like annotation tools, customizable capture settings, and sharing options.

- Music Player: Develop a desktop music player application with features like playlist management, equalizer settings, and support for various audio formats. Optionally, include online streaming capabilities.

- Calendar App: Design a calendar application that helps users manage their schedules, appointments, and events. Include features like multiple calendar views, event reminders, and synchronization with other calendar services.

- Language Learning Tool: Build a desktop application to help users learn a new language, with features like flashcards, vocabulary exercises, pronunciation practice, and language learning progress tracking.

- Movie tracker: Build a mobile application to track movies that you’ve seen. Import movie data from IMDB, rate them, add comments and share reviews with your friends!

Examples

Sample Projects

Some contrived (but reasonable) examples.

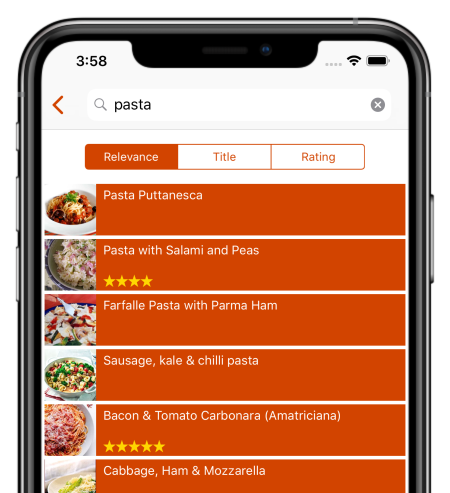

Recipe tracker

Image courtesy of Recipe Keeper

Users

A group of friends that want to cook together e.g., roommates.

Problem

How do you keep track of what recipes you have tried, and which ones each person liked?

Proposal

A recipe planning and tracking application that allows users to:

- Enter recipes (imported from a recipe site or manually entered).

- Browse a collection of recipes, with pictures. Search your recipes and friends recipes.

- Rate recipes, and view what other users have rated.

- Generate a list of “most wanted” recipes, or “most popular” after the group has tried them.

- Extra: track food allergies and support automatic filtering of recipes.

Design

Technical aspects to consider.

- Android application, with screens for all of this functionality

- Multi-user, so each person has a profile (login/logout/edit).

- Local database for caching details (SQLite & file storage)

- Remote database for storing user data and recipes (Firebase)

- OAuth authentication using Google accounts (also Firebase)

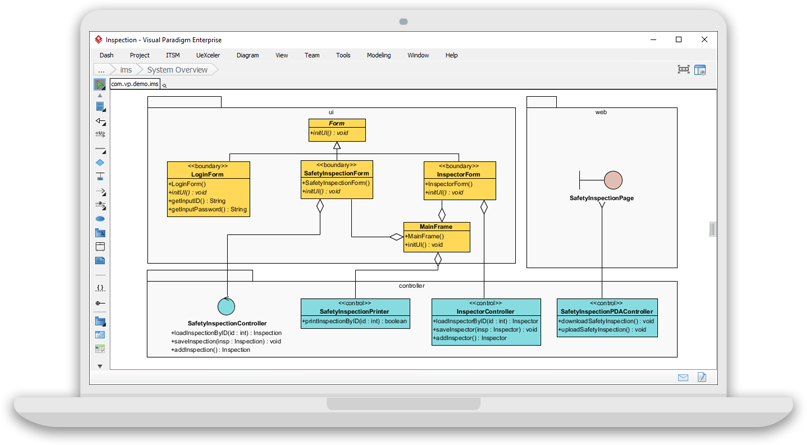

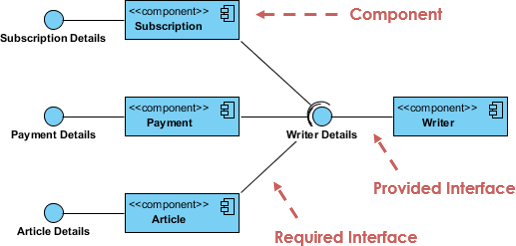

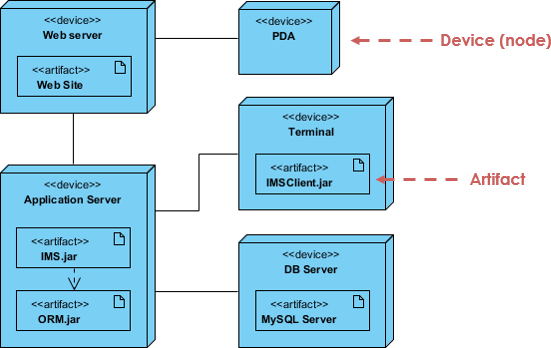

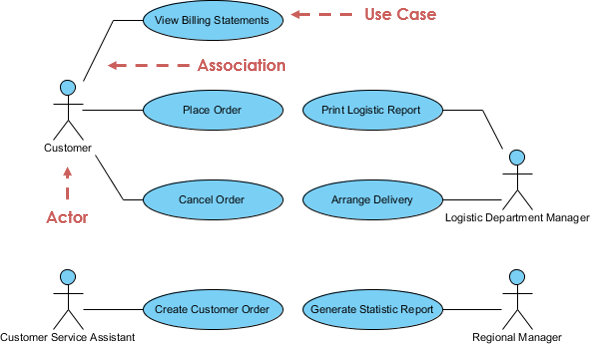

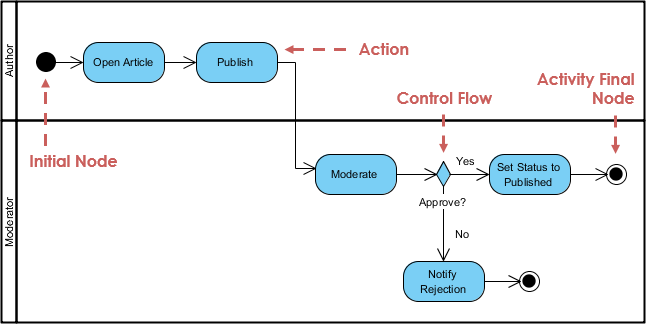

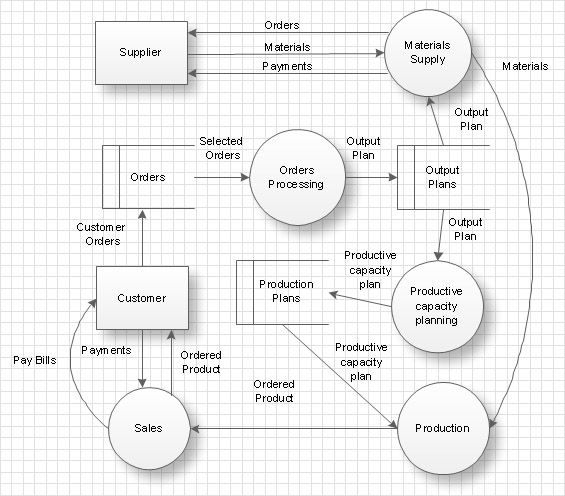

Software design tool

Image courtesy of Visual Paradigm

Users

Software developers that are interested in collaborative design.

Problem

Collaborative tools aren’t tailored towards software design, so we end up trying to create UML documents in Google Docs (awkward). UML tools often don’t support collaborative, which would be incredibly valuable!

Our goal is collaborative UML drawings.

Proposal

An online tool that lets multiple people work together to draw UML diagrams in real-time.

- Can have multiple drawing canvases; on launch choose which one to open and work on.

- Canvases should have a name, date-created, date-edited. Anyone can edit anything.

- Drawing tools: draw shapes; draw lines to connect them; move shapes; change properties.

- Export canvas to JPG (PNG, other formats) for use in other applications.

- Extra: Templates so that you can draw a plain box-arrows diagram, or a specific UML diagram e.g., class diagram, component diagram. (Do not support all UML, but a small subset; focus on infrastructure to add more later).

- Extra: Support more diagrams by expanding the templates!

Design

- Desktop tool, since it’s more precise for drawing.

- Multi-user, so each person has a profile (login/logout/edit).

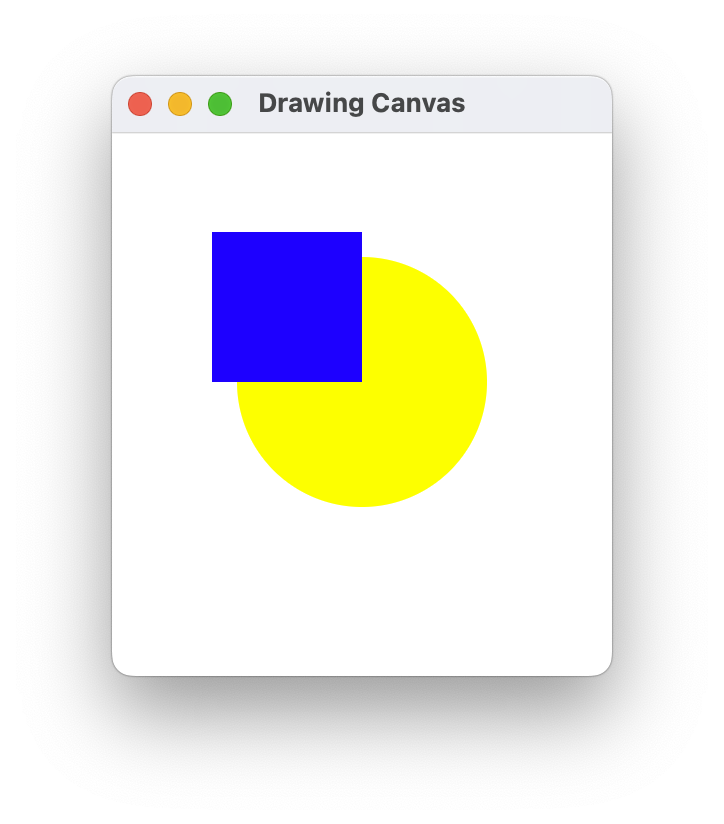

- Investigate

canvasclasses in Compose for drawing arbitrary shapes. - SQL database, since we expect a large collection of templates (predefined shapes) and think that’s a better approach.

- Will likely need a way to handle real-time updates for multiple users working on a document e.g., WebSockets.

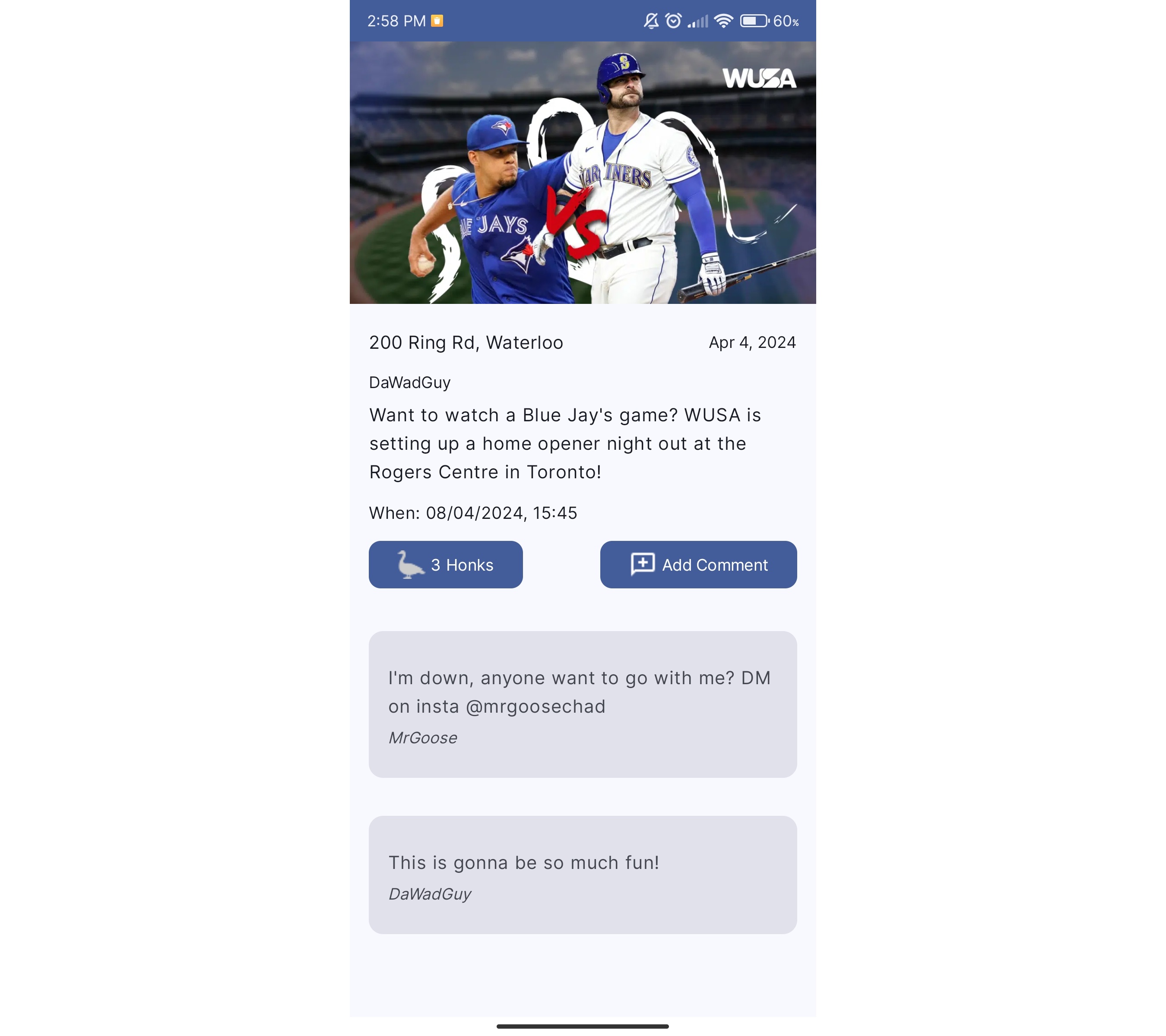

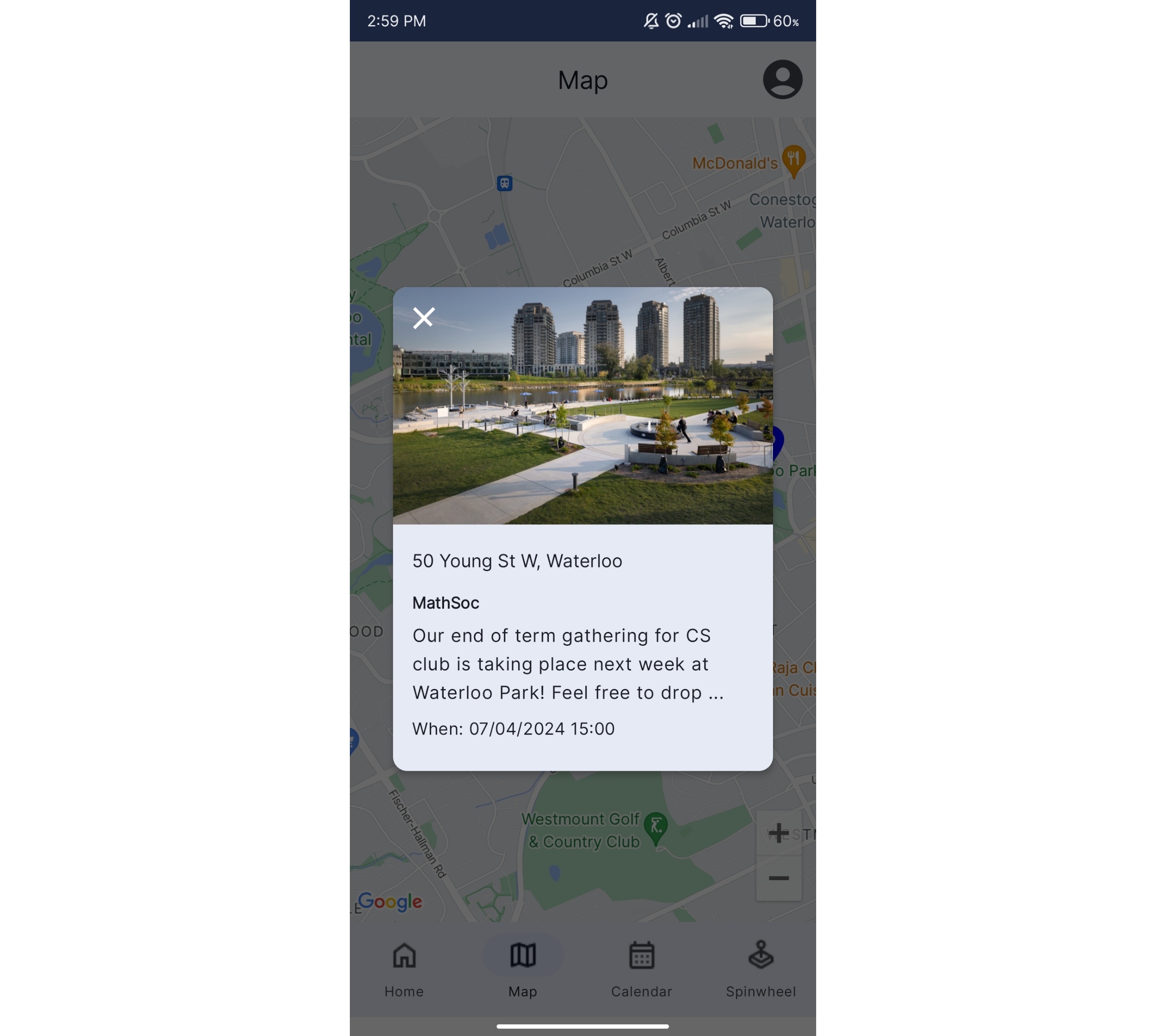

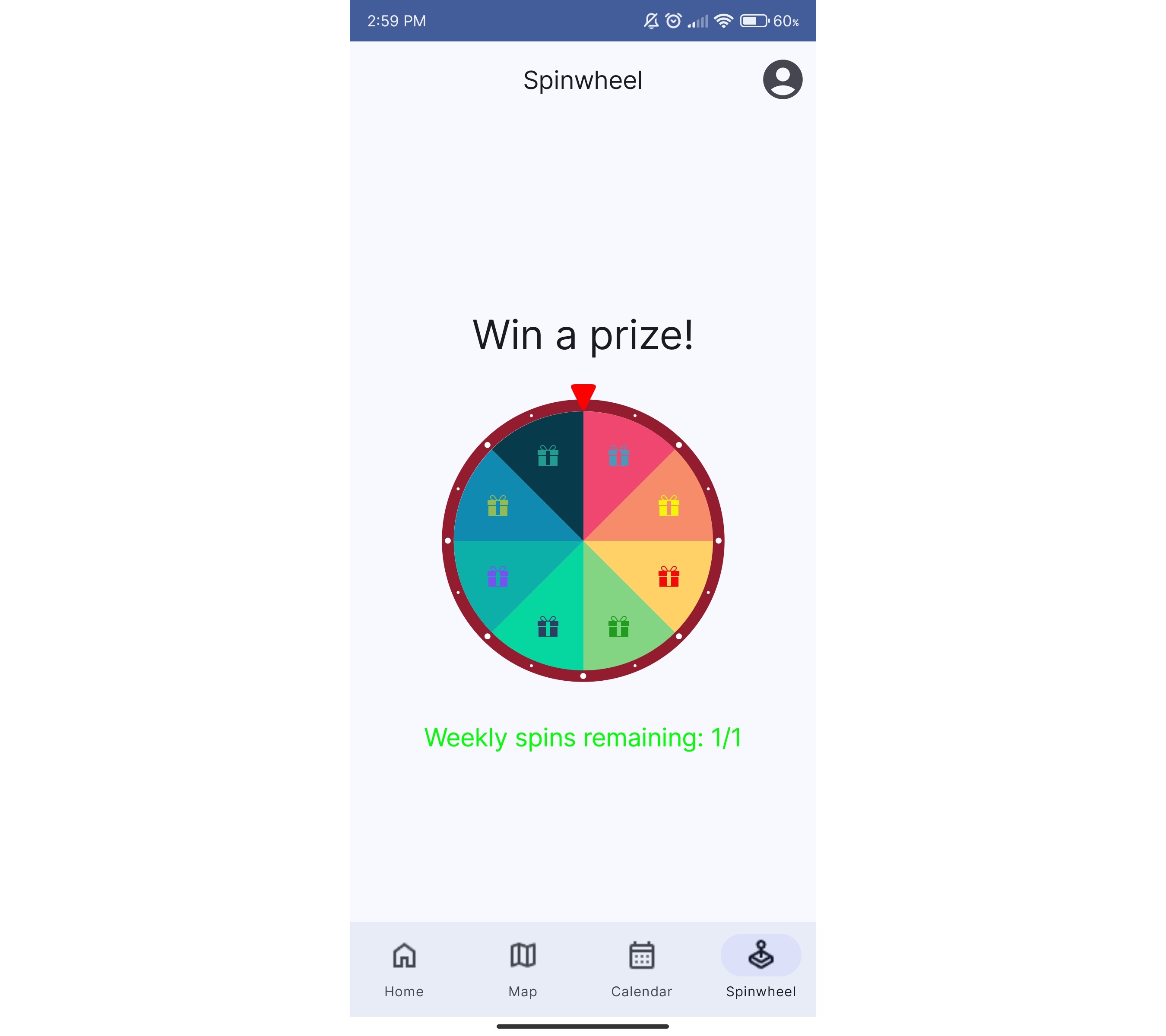

Project Gallery

Showcasing some outstanding Winter 2024 projects.

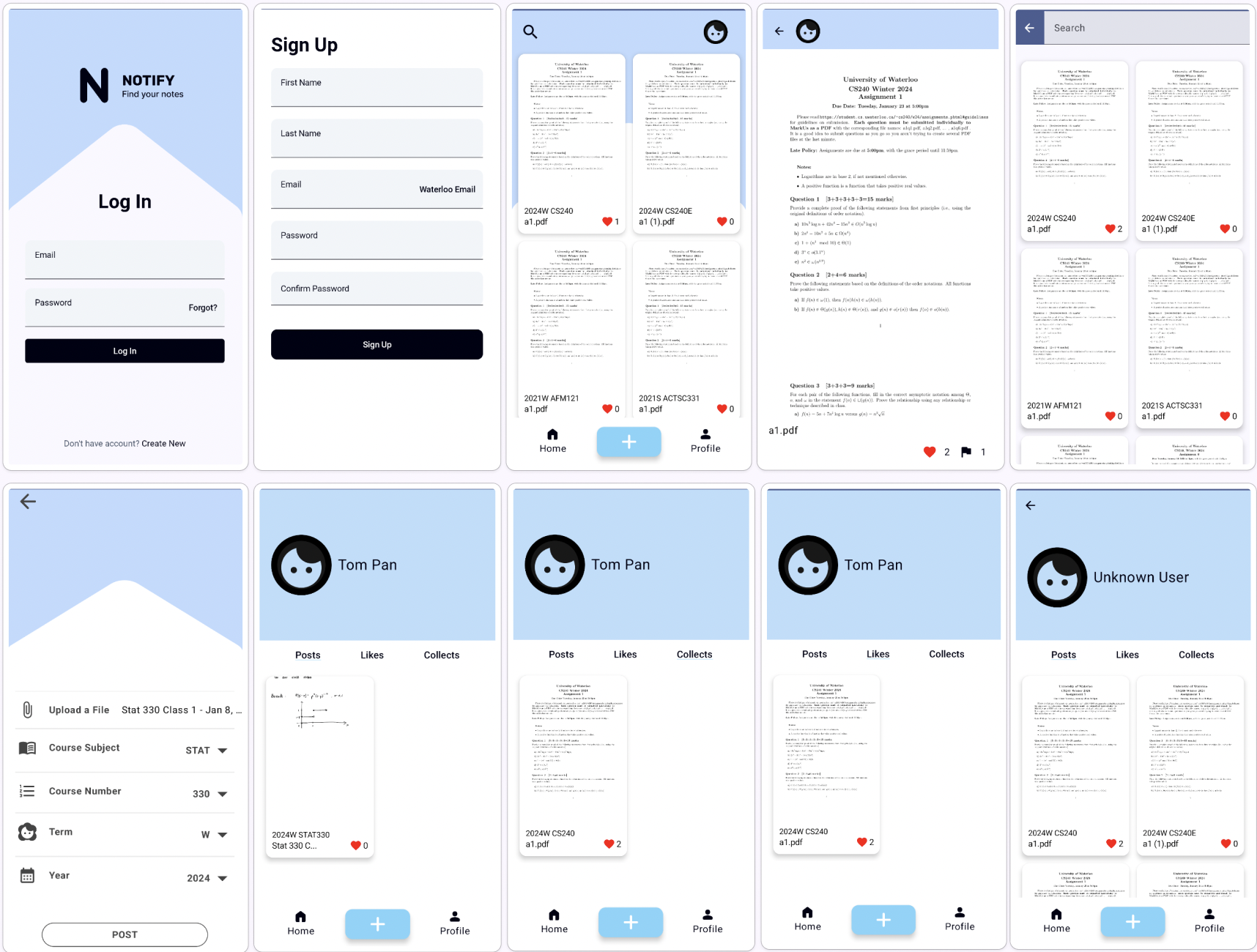

Notify

Jack Li, Tom Pan, Jenny Zhang, Kevin Zhang

Discover Notify, a note-sharing application designed specifically for the University of Waterloo student community. Notify transcends traditional learning boundaries, creating a dynamic and collaborative educational environment where students can seamlessly share, access, and manage academic content.

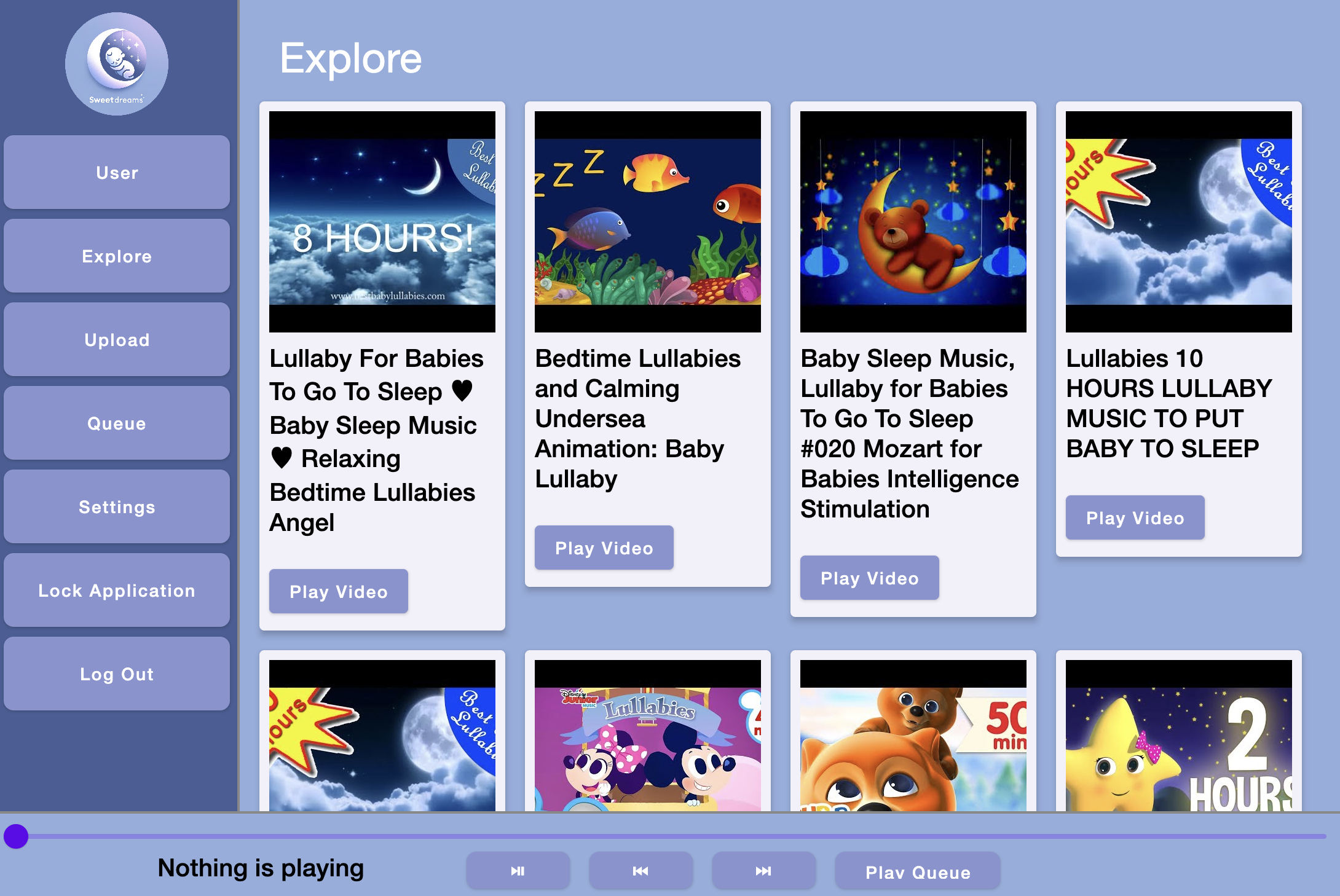

SweetDreams

Rohun Baxi, Akshen Jasikumar, Yun Tao, Areeb Shaikh

This project intends to provide a convenient, one-stop solution for parents to upload and explore lullabies for their children, and for children to play their parents’ selected lullabies. This tool is made for parents and their young children, developed for a CS 346 project in Winter 2024. This app differes from other similar applications by adding an explore page linking lullabies from Youtube and publicly posted ones from other users. Core users will include parents who want to help their children sleep but are unavailable (busy, out of town, etc.).

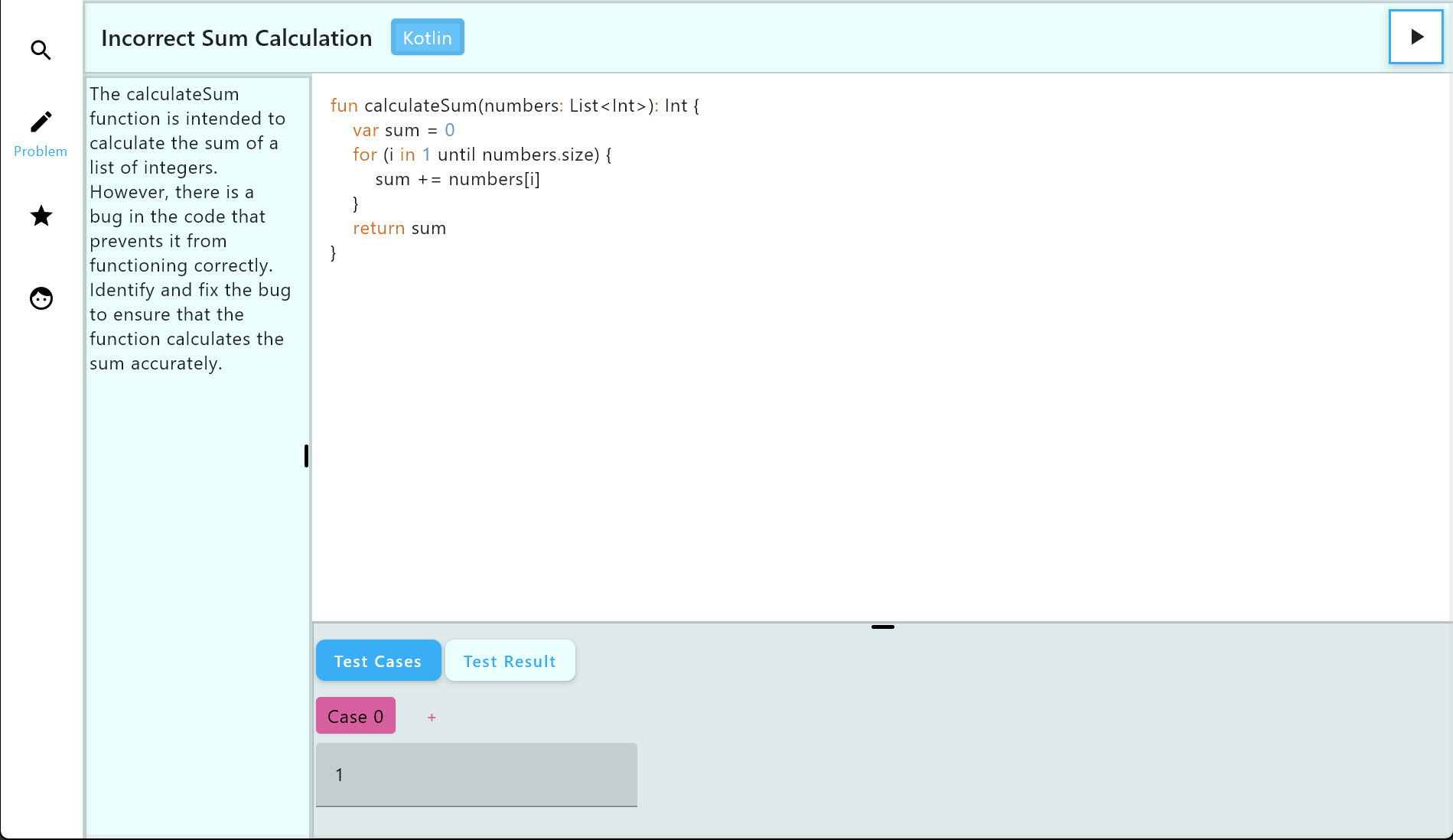

Squash

Stefan Min, Michael Huang, William Behnke, Jason Li

Squash is an app that helps developers practice their debugging skills, developed for a CS 346 project in Winter 2024. Although we are inspired by applications like Leetcode, Squash differs from such apps through its focus on reviewing already completed code.

RemindMed

Gen Nishiwaki, Jacob Im, Jason Zhang, Samir Ali

With RemindMed, never forget to take your medications. With scheduled reminders, your list of medications, your list of doctors, and information on each medication, RemindMed helps patients stay organized and on top of their medications. With support patients and doctors, doctors have the ability to add their patients, send them their prescriptions with usage details, and then have reminders automatically scheduled. As a result, medications can be properly used, leading to improved care for patients, and more time for doctors to help others.

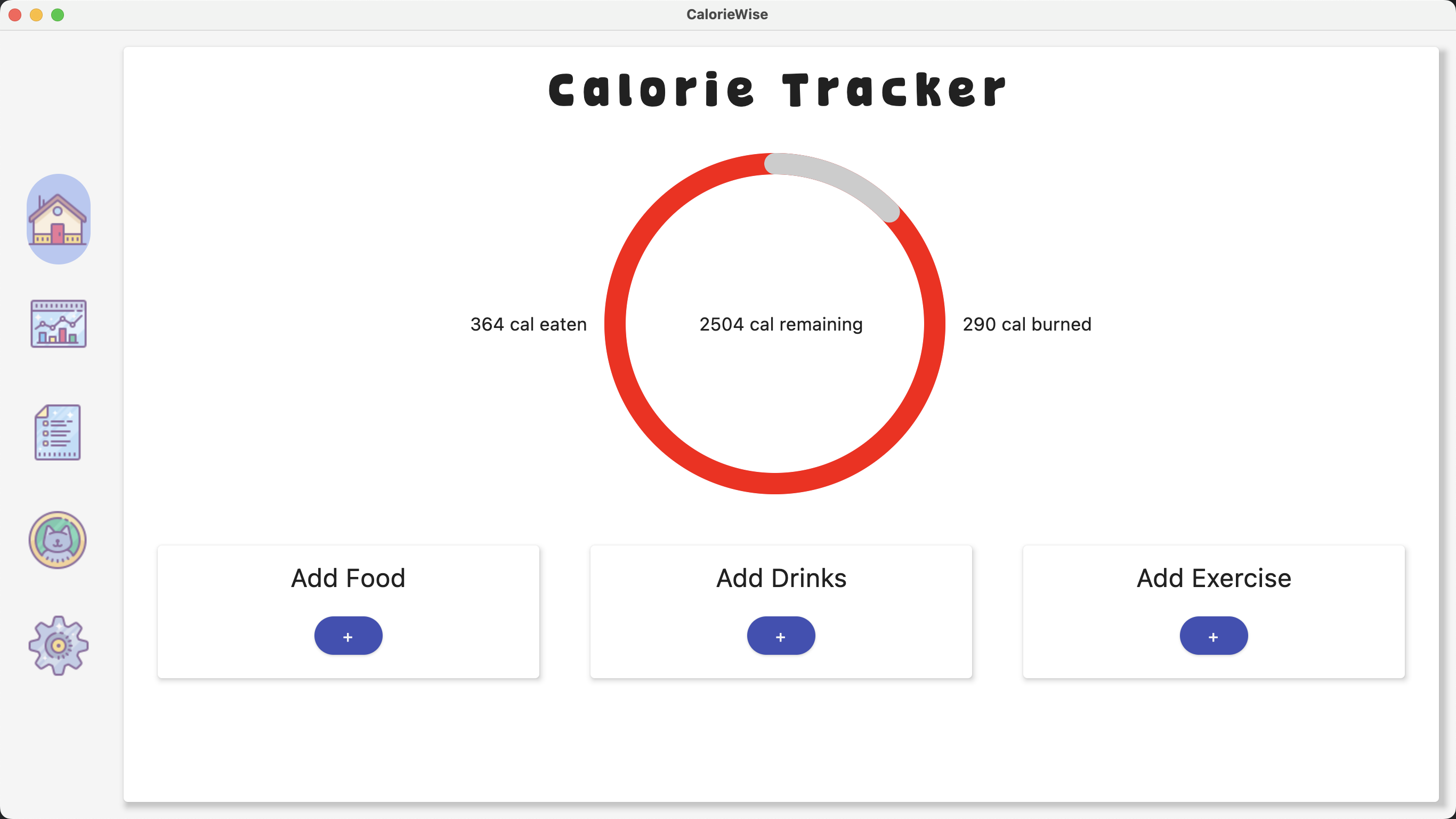

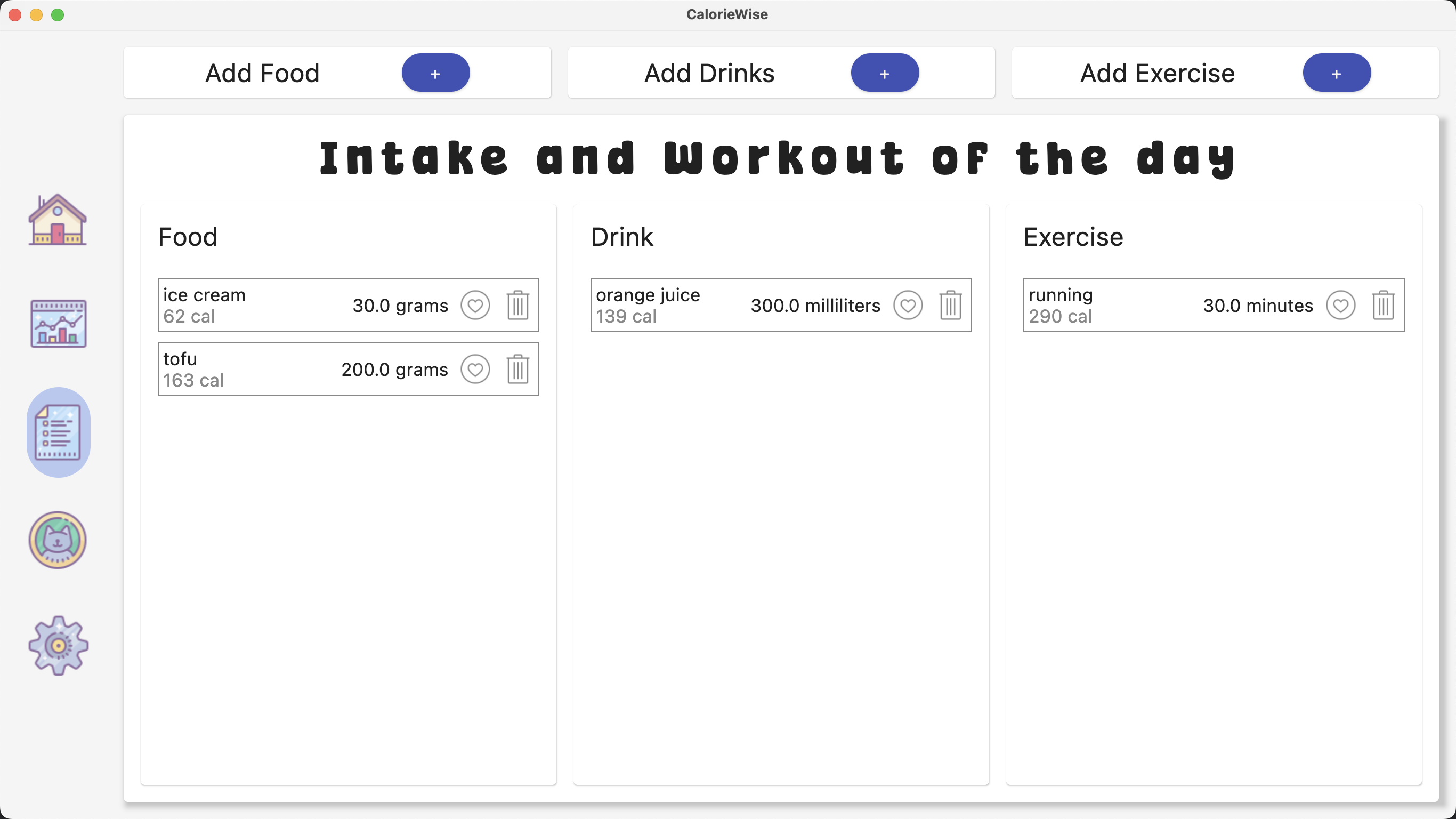

CalorieWise

Dongni Lu, Lynn Li, Peter Li, Yingjia Zhang

CalorieWise is a desktop app that aims to help users better track their diet in order to manage their health more efficiently. The app provides an easy-to-use calorie tracker, breakdowns of your nutrient intake, and an intake/exercise entry page for you to get an overview of your daily calorie consumption. Furthermore, the app recommends a healthy calorie total to better guide you on your wellness journey, which can be updated anytime. Welcome to CalorieWise!

AceInterviewer

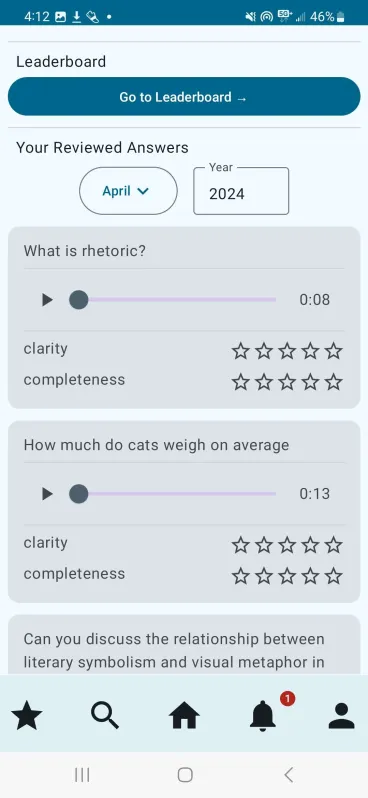

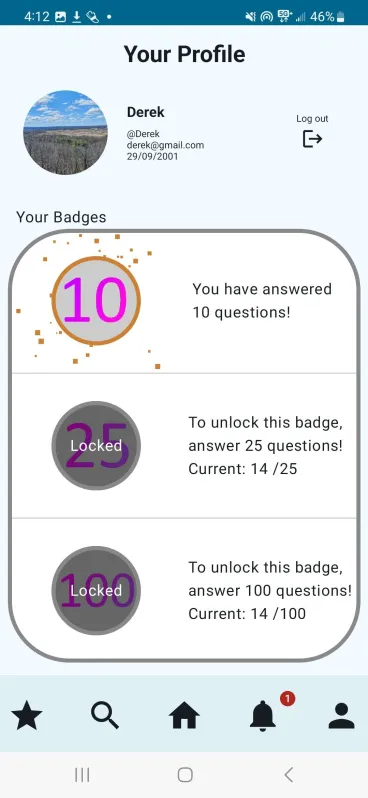

Ryan Maxin, Derek Maxin, Marcus Puntillo, Jia Wen Li Email

Students and professionals alike have limited access to valuable feedback on their responses to common interview questions within their discipline, often leading to solo-preparation with limited feedback on their responses. Our app serves as a forum for common interview questions, responses, and community feedback. Users can answer common industry questions and submit them publicly to be reviewed with feedback.

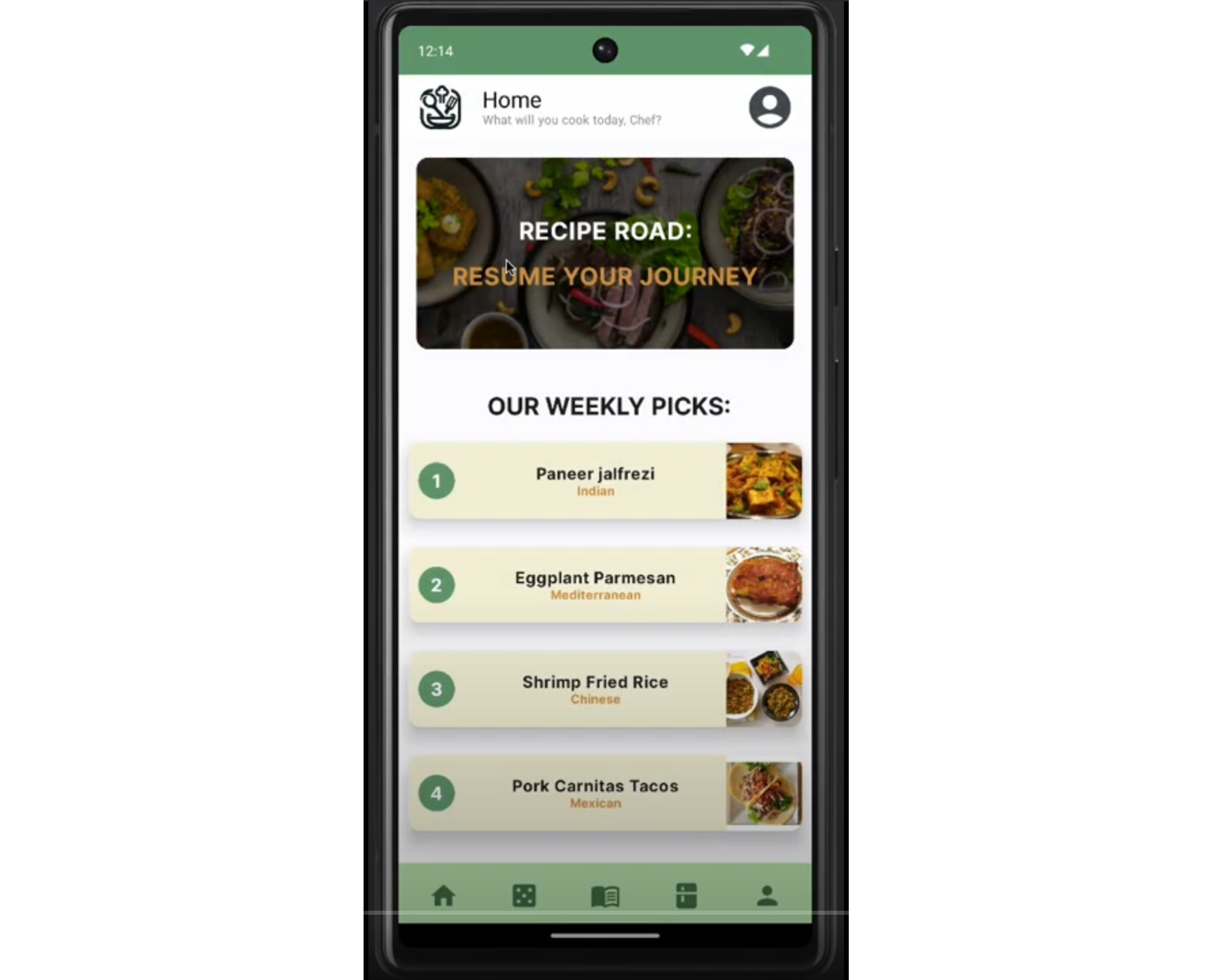

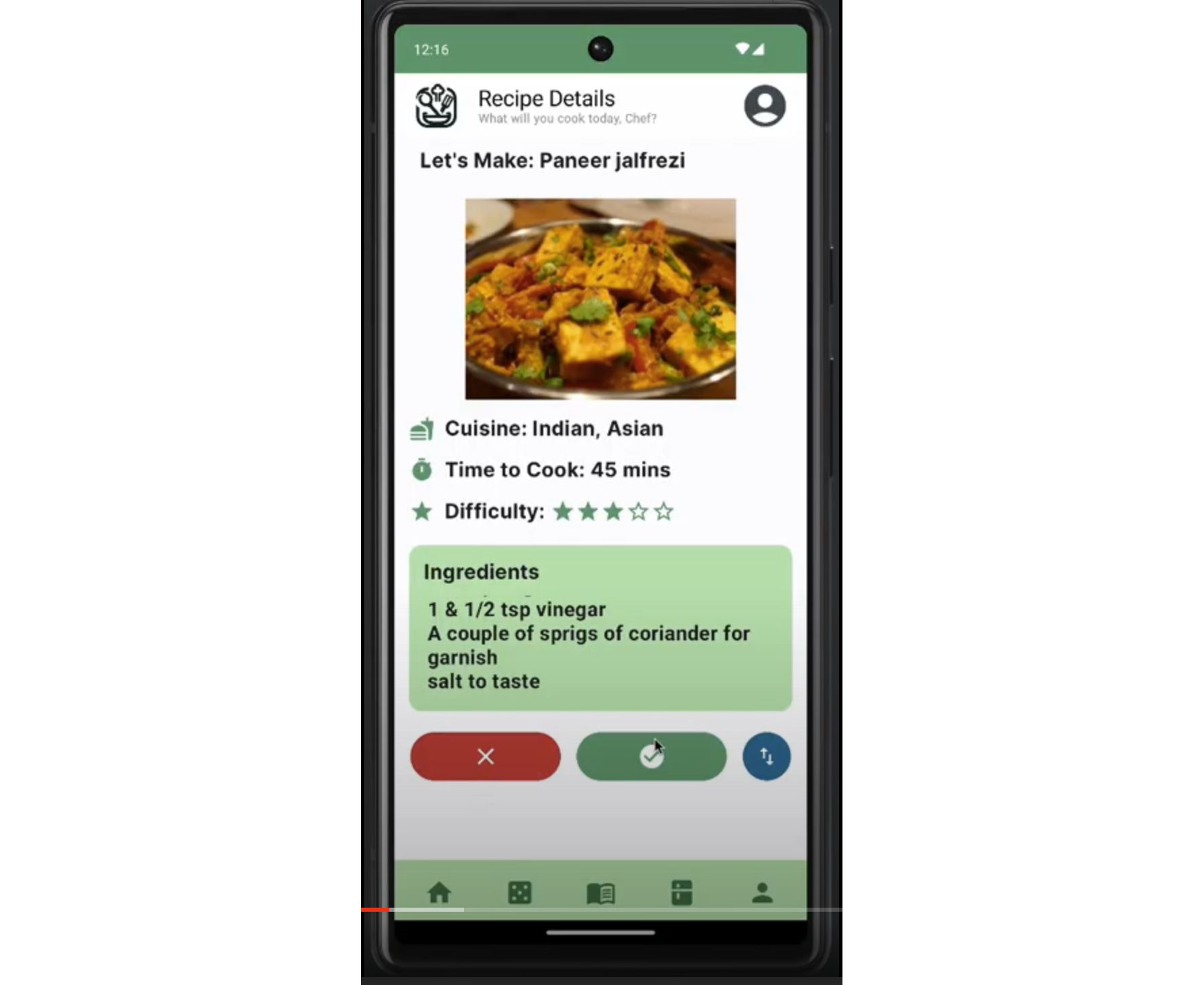

TasteBud

Ayush Shah, Julie Ngo, Lavan Nithianandarajah, Neel Shah

TasteBud is an application that teaches beginner cooks how to cook recipes from different cuisines, but are unsure where to start. This project is being developed for the CS 346 course project in Winter 2024. It differs from other similar applications by using a progression learning method where users can learn the basics of cooking a specific cuisine and gradually increase their expertise. It solves the problem of overwhelming online cooking information and recipes, which often assume prior knowledge of cooking and have limited information on each of the steps for preparing a dish. Youtube videos and other social media platforms are also often too fast-paced and vague. Users are searching for a quick & convenient, user friendly, and fun way to learn cooking!

Univibe

Sowad Khan, Bahaa Desoky, Omer Faruk, Ali Faez

Univibe is a campus exploration app that helps students share and discover interesting places around campus, developed for a CS346 project in Winter 2024. This app will help students share their discoveries and help other students connect and learn more about their campus.

UWConnect

Sagar Patel, Ibrahim Kashif, Alex Yu, Eric Liu

UWConnect is an innovative platform designed to enhance the connectivity and collaboration among University of Waterloo students, faculty, and staff. By facilitating more direct communication and providing a centralized hub for resources and information, UWConnect aims to make campus life more integrated and accessible for everyone involved! Whether you’re looking to join a group related to your interests, find campus events, or connect with peers and professors, UWConnect is your go-to solution!

Deliverables

Items that you are required to submit for grading.

Project setup

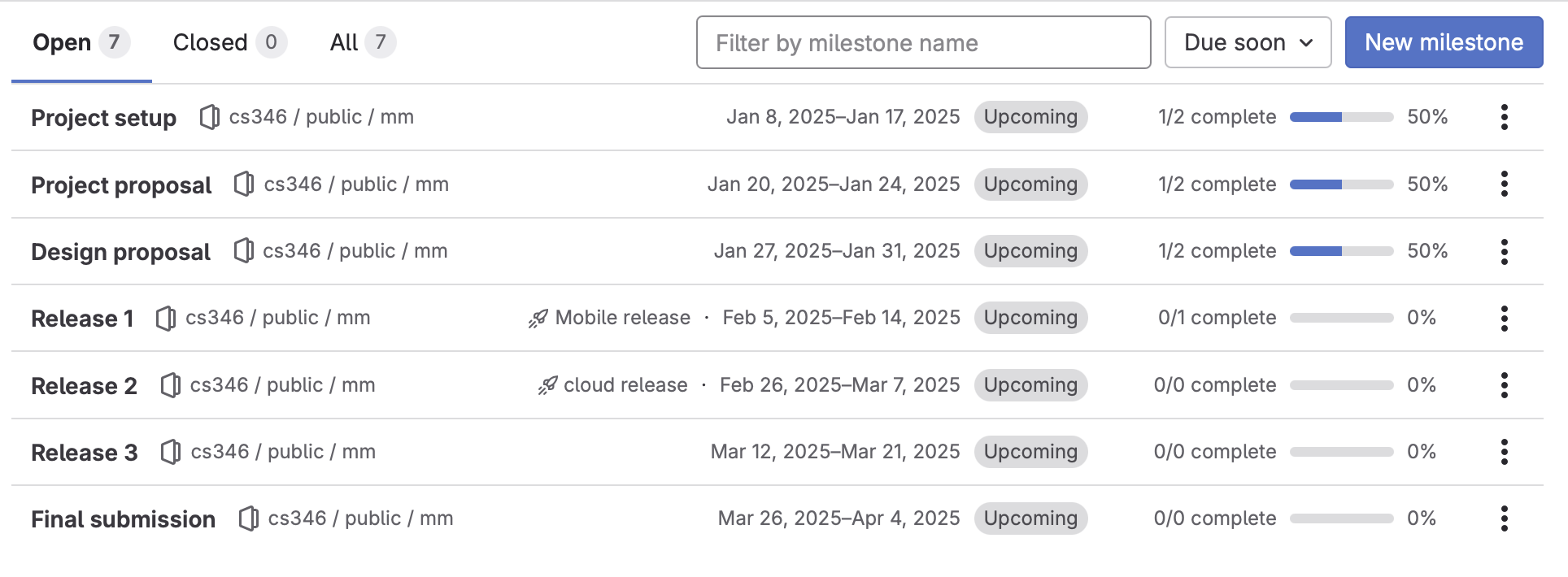

This section describes a project milestone. See the schedule for due dates.

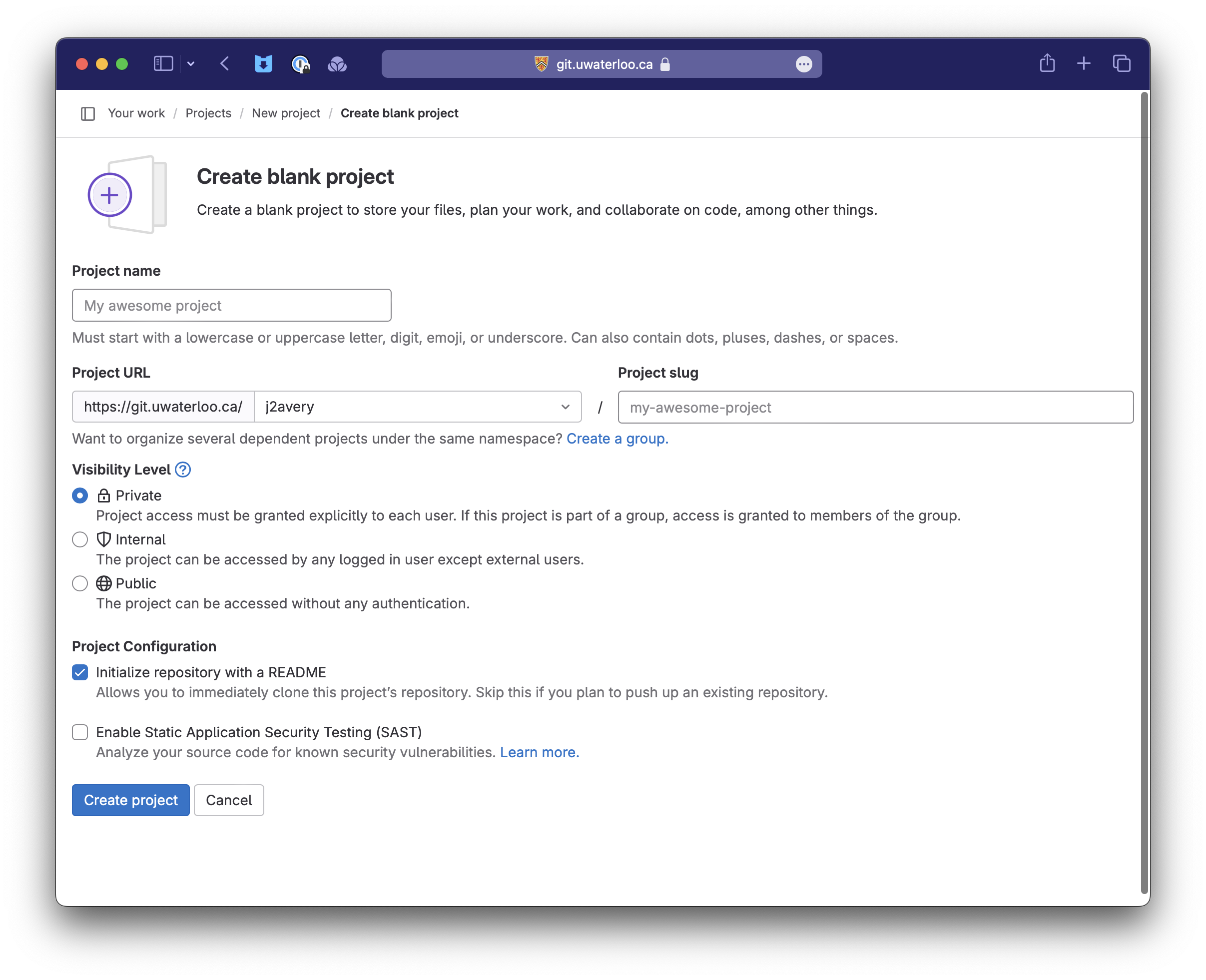

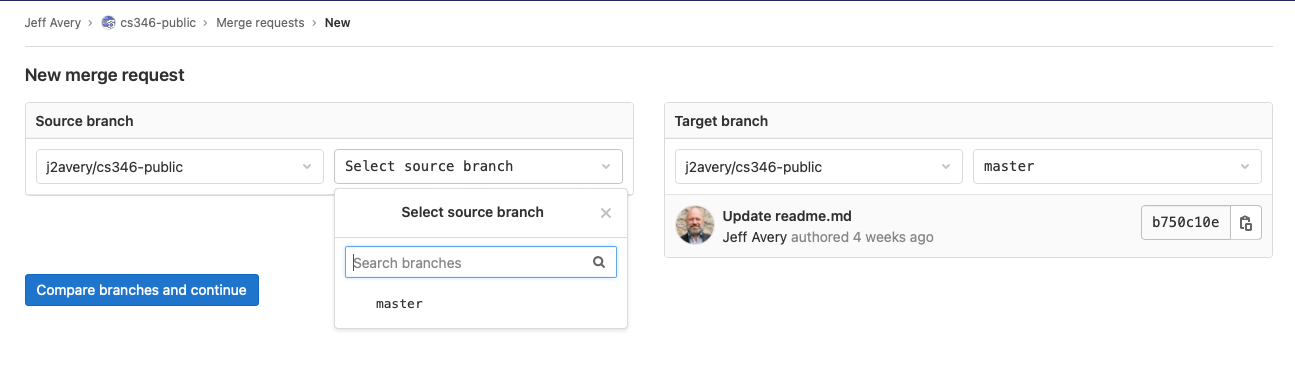

Create a GitLab project

Once your team has been formed, you will need to create a project space in the University of Waterloo GitLab. You should create a single GitLab project for your team, and add the information detailed below. When done, you will need to submit your project details in Learn.

Step 1: New-project wizard

- Open the GitLab home page.

- Select

+>New project/repository>Create Blank Project. - Fill in the form:

- The project should be placed under the Username of one of your team members (e.g.

j2averyin the URL example above). - Visibility should remain

Private. - Your project should have a descriptive name (see Forming a Team). e.g.,

UberTweetsorSocial Calendar.

- Select

Create projectto proceed.

Step 2: Set project security

It’s important that you set permissions on your project. This provides access to those who need it, while preventing others from accessing it!

The person who sets up the project should make the following changes:

- Check project visibility.

Settings>General>Visibility Level- Ensure that it’s set to

Private.

- Add your teammates to the project with full access.

Manage>Members,Invite Members.- When they accept, change their role to

Owner.

- Add course staff with reduced permissions. Add the instructors and TAs to your project.

Manage>Members,Invite Membersand use each person’s email address.- When they accept, set their role to

Developer.

Teams will be assigned TAs in the middle of the second week of the term, before this deliverable is due. You can either wait for those details to be released, or just add all of the TAs and instructors to your project.

Step 3: Add project details

Optionally, you can also add a project icon, and other details.

Settings>General,Project avatar.Settings>General,Project description.

Step 4a: Create a README.md

You should have a markdown file in the root of your source tree named README.md. This will be displayed by default when users browse your project and serves as the main landing page for your project.

You must have at-least the following details included in your README.md.

Simple README.md file

# SUPER-COOL-PROJECT-NAME

## Title

A description of your project e.g., "an Uber-eats app for parakeets!".

## Team Details

Basic team information including:

* Team number

* Team members, listed in in alphabetical order. Include full names and email addresses.

* Link to your Team Contract wiki page.

Step 4b: Create a .gitignore file

Create a single file in the root of your source tree named .gitignore. It should include any directories of files that you want git to not include. This is great way to handle local config files or tmp files that you don’t want in your repository!

Simple .gitignore file

build/

out/

.DS_Store

.idea

.gradle

Step 5: Create a team contract

In your Wiki, create a page for your Team Contract and link it to your README.md under Team Details.

Minimally, your team contract needs to contain:

- Names and contact information of all team members.

- Agreement on how you will meet in-person e.g., how often, and location. You are expected to meet at least twice per week and document it.

- Agreement on how you will communicate e.g., email, Messages, WhatsApp, MS Teams. You need one agreed-up communication channel that everyone will check.

- Agreement on team roles: who is the project lead? Are people taking on specific design responsibilities?

- Agreement on how the team will make decisions. Do you vote? Do you need a majority?

How to submit

When you are done the steps above, please submit for grading.

Note that only one person on the team needs to submit team deliverables. The submission will be associated with your team, not the person that submitted it.

Login to Learn, navigate to Submit > Dropbox > Project Setup, and submit a link to your top-level project page.

This step must be completed by the listed time and date.

Project proposal

This section describes a project milestone. See the schedule for due dates.

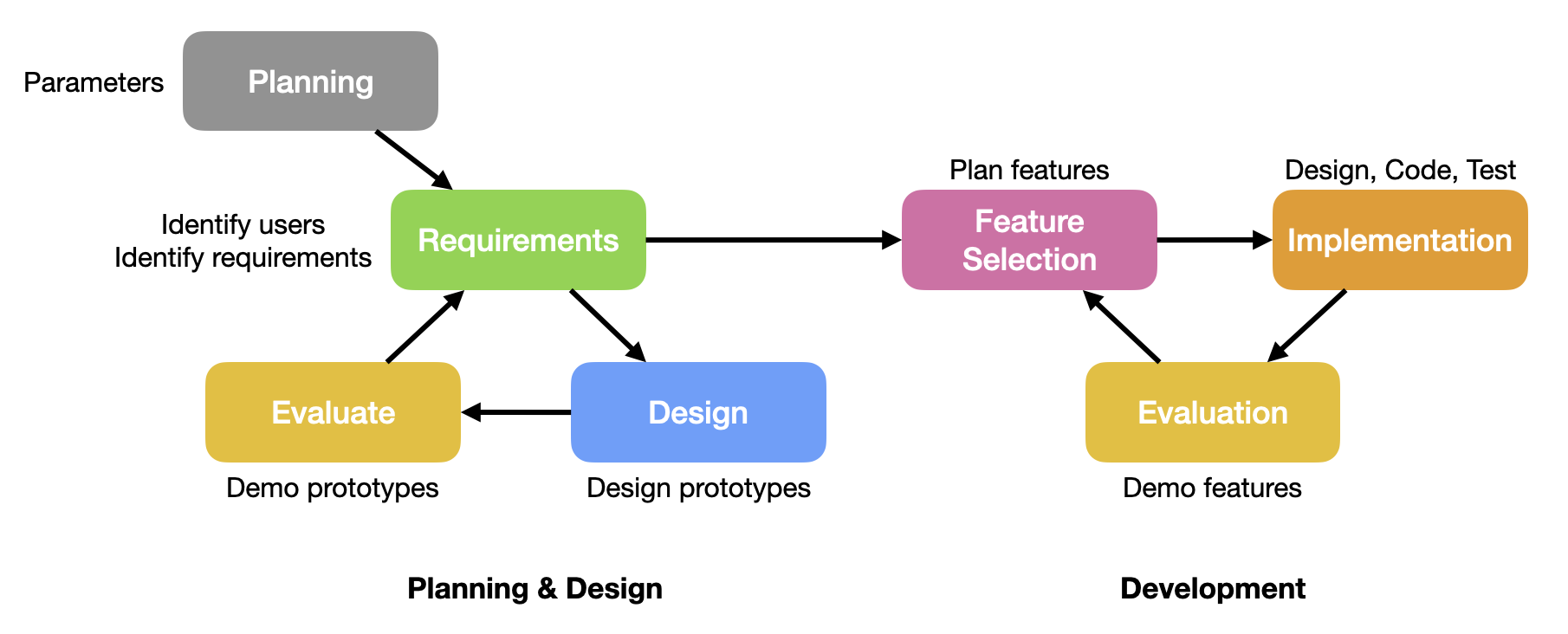

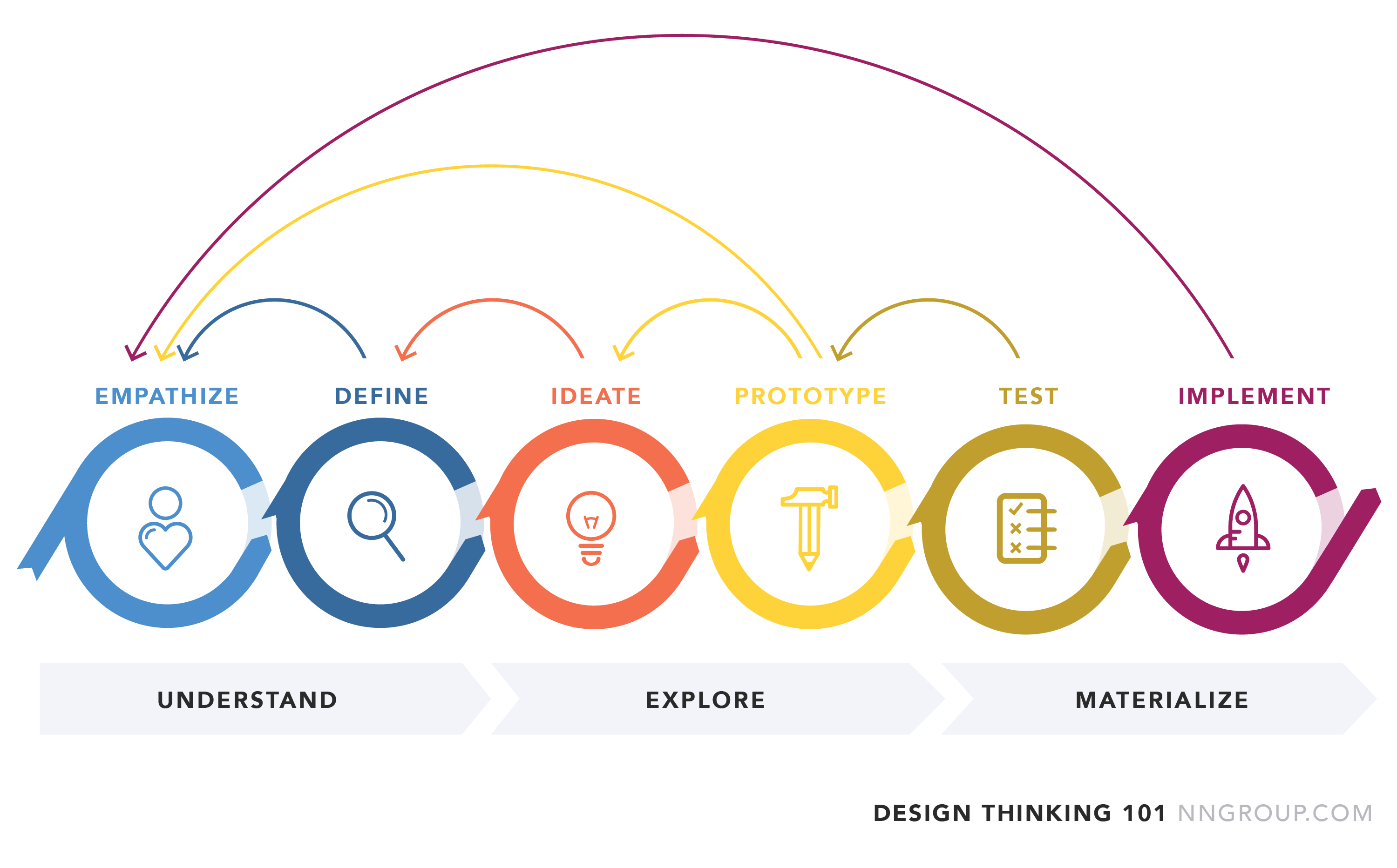

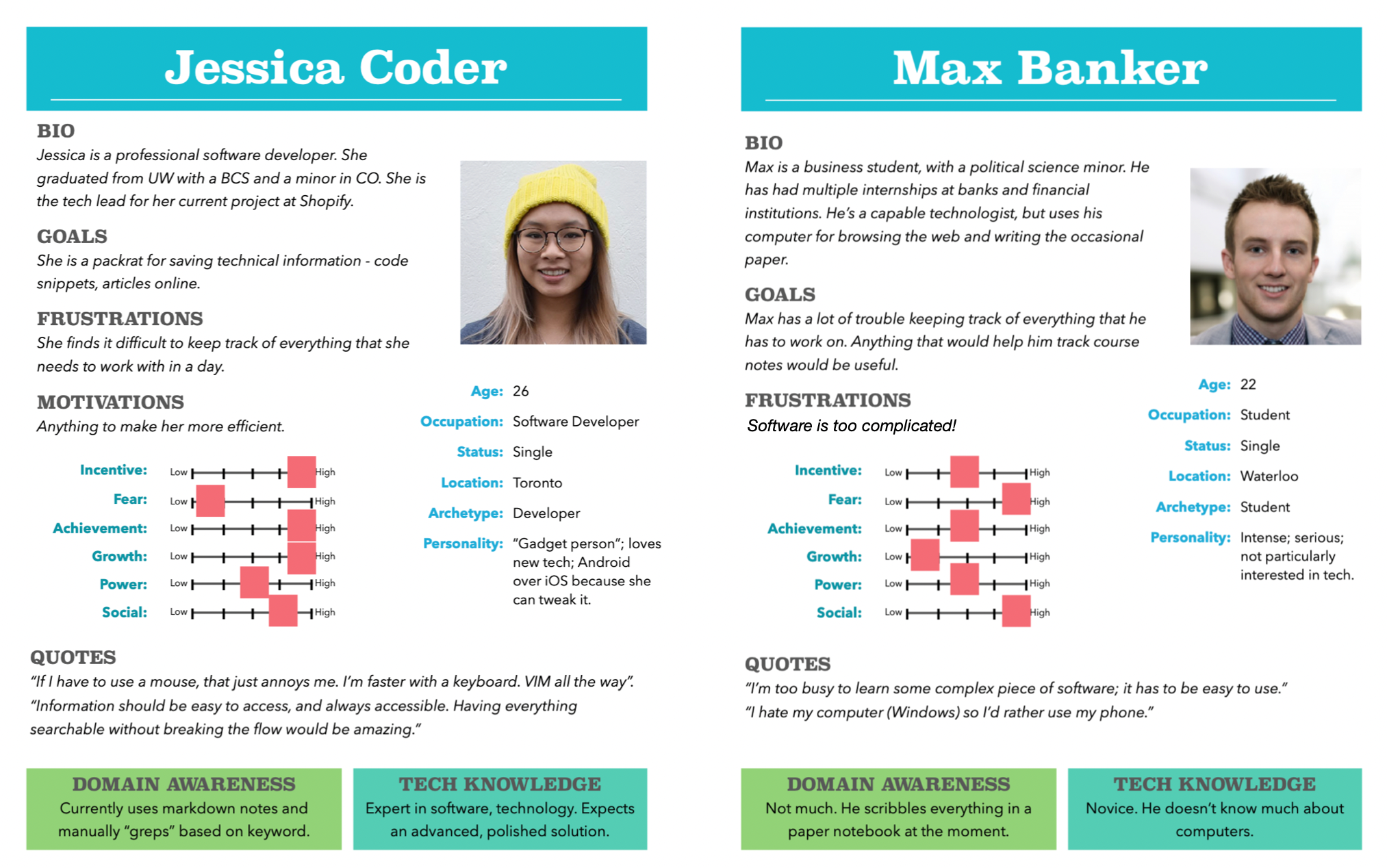

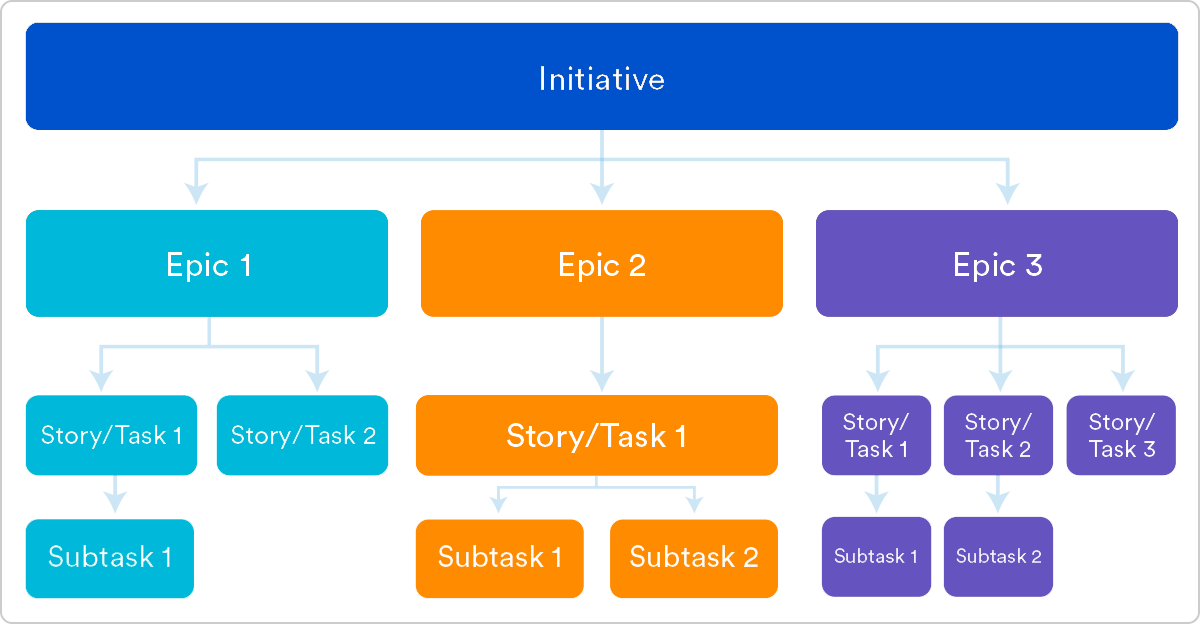

A project proposal identifies your users, and their problems or challenges, and captures some desired outcomes from the point-of-view of the user. This document reflects the Understanding Phase of the Design Thinking process.

What to include

This document should be a wiki page in your GitLab project named Project Proposal. Make sure to link this page to the README.md file so that users can easily find it.

You should include the following sections.

1. Empathize

This section should include the following.

-

A clear statement identifying your users. Be specific about who the group includes, and what specifically what role or tasks you wish to target.

Tip: it’s often helpful to have tasks in mind that you can use to guide questions. Targeting “CS students who want to plan their courses” is better (more-focused and more usable) than “CS students”.

-

Interview notes from at least 2 interviews with people that identify with your group. Write down key insights from the interviews (bullet-points of main points is fine; don’t try and transcribe everything that is said).

Tip: ask open questions either to help you identify some tasks or specifically to understand how they perform some challenging tasks. Make sure to look for things they like/dislike, and try to identify problem areas for them.

-

One or more personas that describe your users. Include goals, motivations, likes and dislikes in your profile.

Tip: the format of a persona isn’t that important, as long as you have key information captured. You can create your own layout, or use the template that is provided in the public repository

2. Define

From your interview data, you should produce the following.

-

User stories. You should be able to list at least 3-4 user stories that describe challenging tasks, problems, or “desirable functionality” from the point of view of your users. These should come directly from the interview data (above).

-

A problem statement. This should be a short paragraph summarizing the user, their context and the underlying problem you will address. We will use this as the basis of your solution.

Tip: It may be helpful to think of this as the “underlying problem” that you are addressing. I also like to think of this as the first half of the “elevator pitch” of what your product is/does, that motivates why we need to build a solution. Do NOT attempt to define a solution in detail; that’s done in the next phase.

Where to store this document

Your proposal should be contained in a Wiki page titled Project Proposal, and linked from the README.md file of your GitLab project.

How to submit

When completed, please submit for grading.

Note that only one person on the team needs to submit team deliverables. The submission will be associated with your team, not the person that submitted it.

Login to Learn, navigate to the Submit > Dropbox > Project Proposal page, and submit a link to your top-level project page.

Proposals must be submitted by the listed time and date.

Design proposal

This section describes a project milestone. See the schedule for due dates.

Your design proposal builds on your Project Proposal.

Where the Project Proposal was about understanding users and their problems, the Design Proposal is about brainstorming possible solutions and generating prototypes for feedback. This document reflects the Explore Phase of the Design Thinking process.

What to include

This document should be a Wiki page in your project named Design Proposal. Make sure to link this page to the README.md file so that users can easily find it.

You should include the following sections.

1. Ideate

This section should include the following:

-

A list of ideas to address the problem statement. You will not likely have “one solution for everything” but instead will have a bunch of ideas for each use case. Your solution will be some combination of these.

Tip: Put down everything; a bullet list is fine. This should be more ideas than you include in your solution e.g., you may initially have 10-20 broad ideas, that you will distill to a smaller number in your solution below.

-

A proposed solution. Which combination of ideas from above do you want to pursue? Which ideas together reflect the best solution to your problem statement (from the Project Proposal)?

Tip: Write a paragraph explaining why you think this combination of ideas is most suitable. You can consider technical reasons, logistical challenges, even design problems like how features will work together.

This does NOT need to be an exhaustive design document! It’s enough to have a paragraph or two explaining your thinking, and a bullet list of 5-10 sentences describing features that you expect to implement. You want enough detail to know what to prototype, but no more than that.

2. Prototype

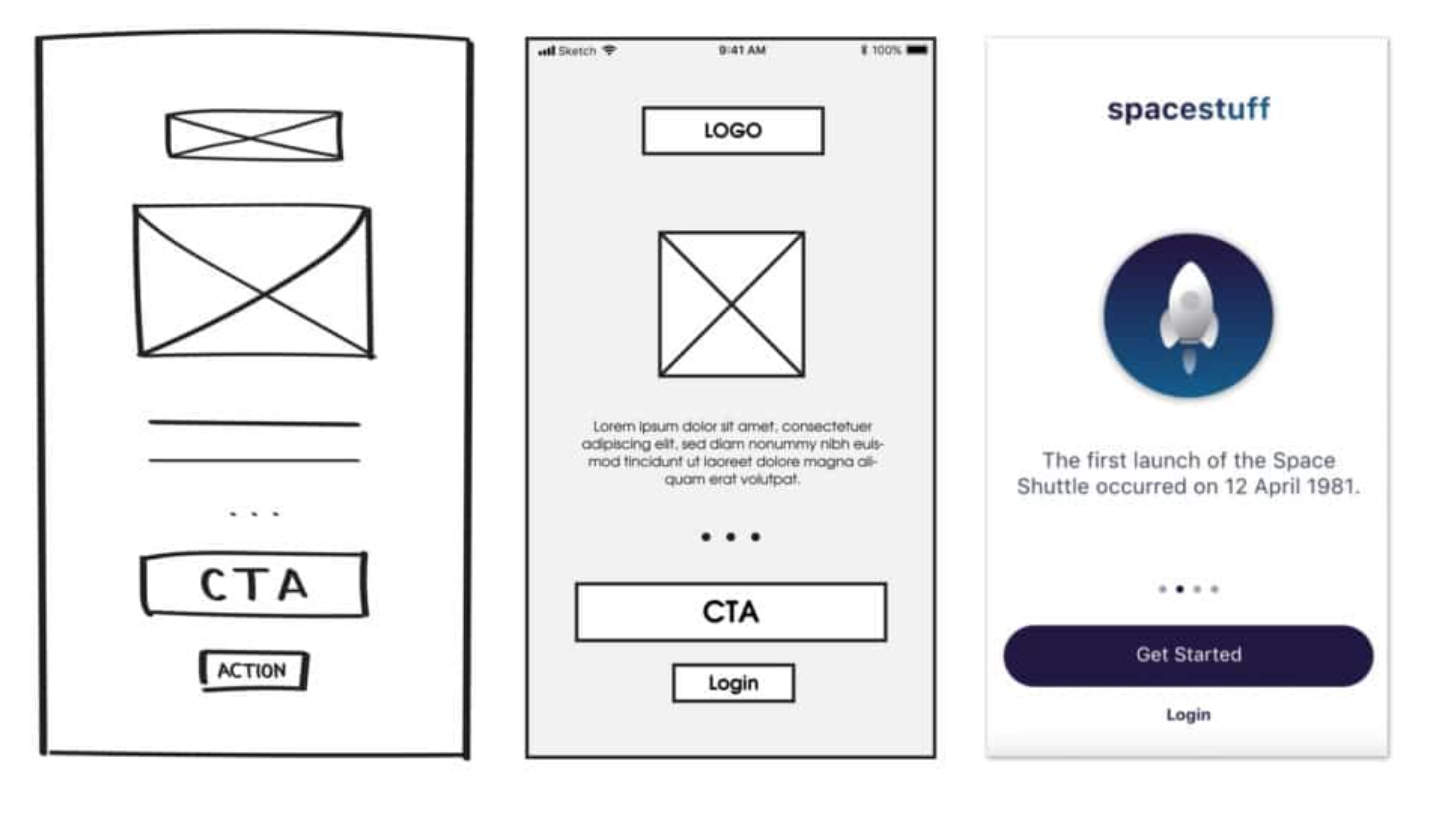

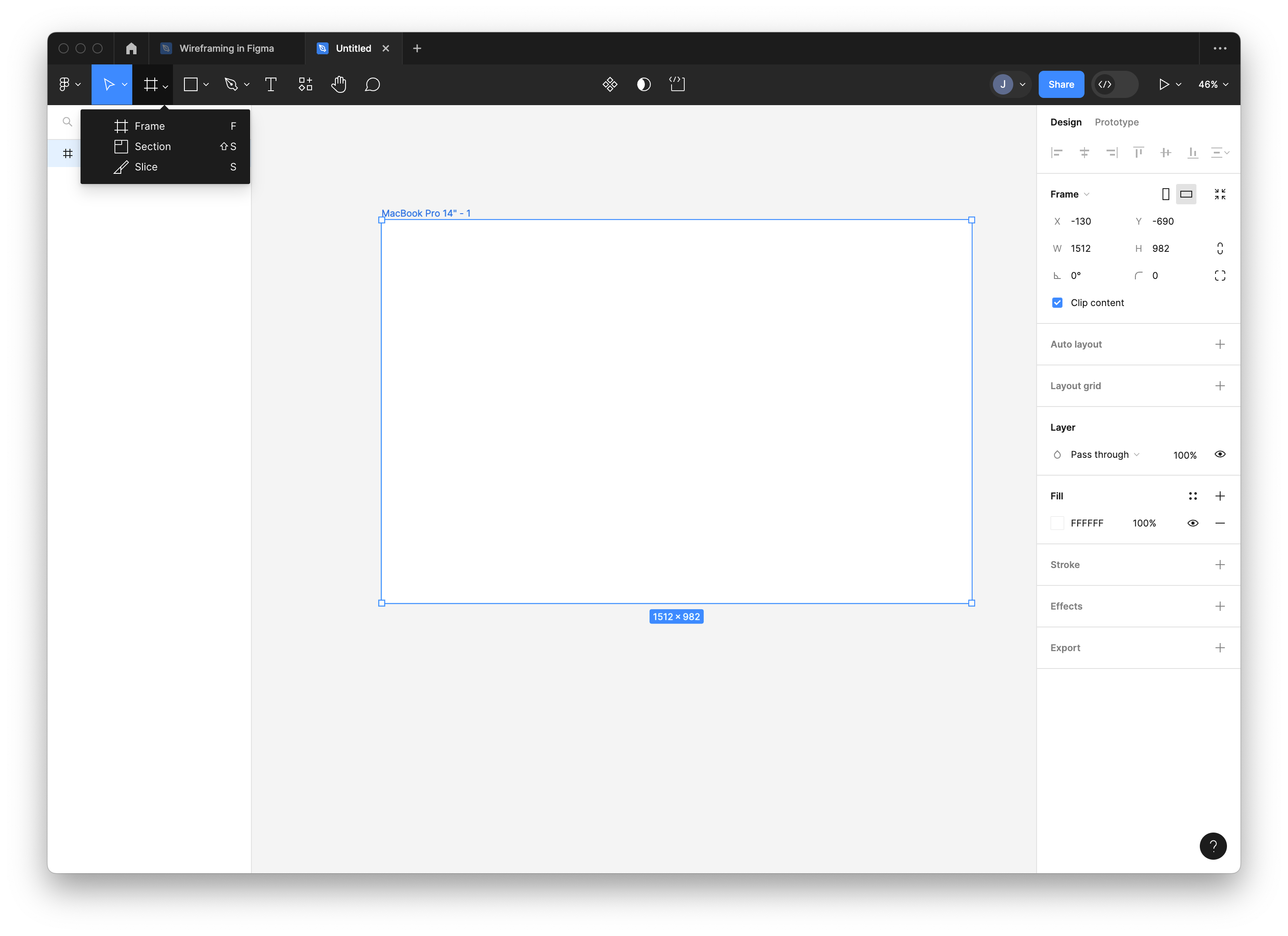

Finally, you want to generate low-fidelity prototypes that reflect your solution.

You should have:

-

Mockups of every screen that you anticipate needing. Generally you will have one or more screen to address each user story. These should be low-fidelity or wireframe diagrams i.e., screens showing a sketch of the screen contents, with labels if necessary to make the sketch clear.

-

Some indication of progression through the application. Explain to us how the user progresses though the application (e.g., “clicking on the Create account button on this screen moves to the Create dialog”). Drawing arrows between screens is fine, as long as you label them to make it clear how and when transitions occur.

Tip: It’s highly recommended to create prototypes in Figma, or some other online wireframe tool. Make sure to include these diagrams in your actual design document i.e., as screenshots or images. You cannot just provide links to Figma!

Where to store this document

Your proposal should be contained in a Wiki page titled Design Proposal, and linked from the README.md file of your GitLab project.

How to submit

When complete, submit for grading.

Note that only one person on the team needs to submit team deliverables. The submission will be associated with your team, not the person that submitted it.

Login to Learn, navigate to the Submit > Dropbox > Design Proposal page, and submit a link to your top-level project page.

Proposals must be submitted by listed time and date.

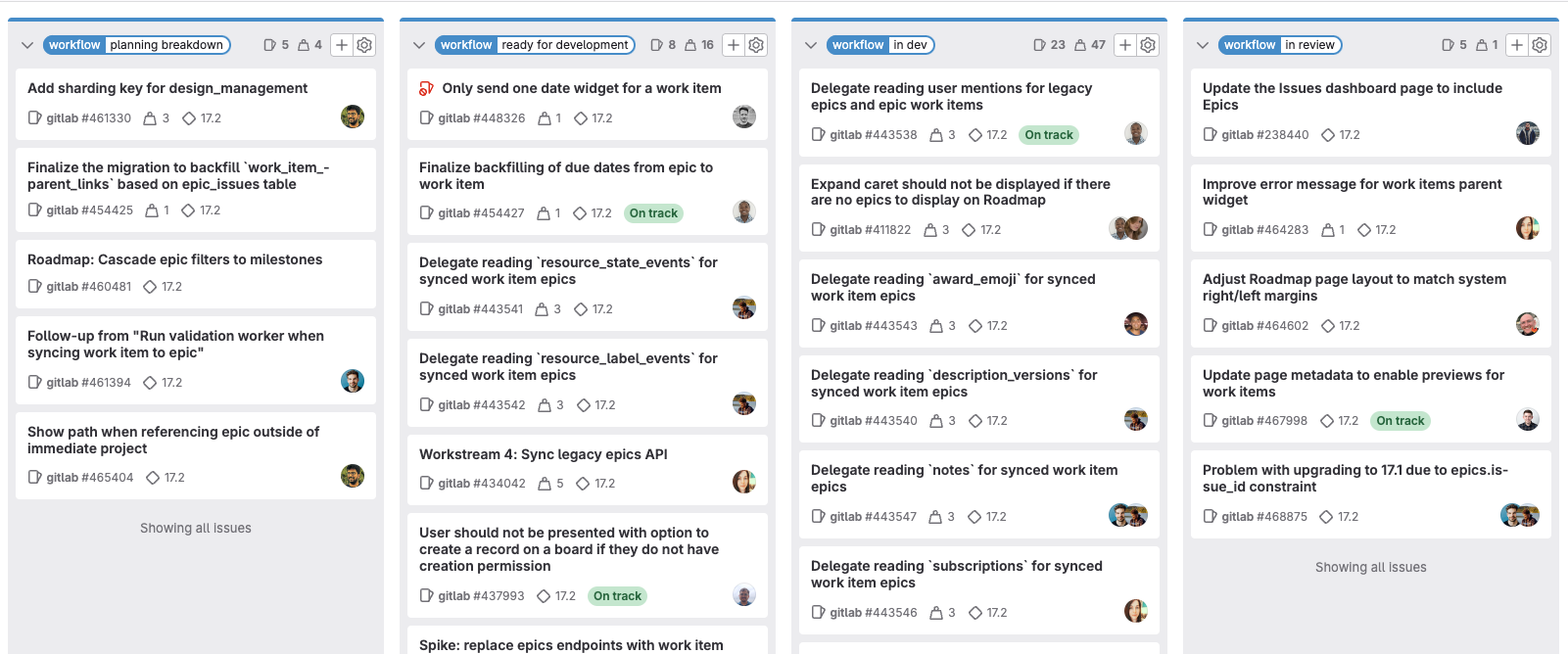

Coding & demos

This section describes a project milestone. See the schedule for due dates.

You are working through product iterations, where you plan, design, and code in two-week cycles. You always start with planning, and end with a demo of what you accomplished.

First day: planning

The first day of an iteration should include be a planning meeting, where you:

- Review the results from the previous demo.

- Review work that was not completed and decide if you wish to continue working on it.

- Plan out the remaining work, taking into account everyone’s availability, priority of issues and so on.

By the end of your meeting on the first day, everyone should have issues and work assigned to them.

Middle days: design, coding

Over the remaining two weeks, you should meet occasionally to coordinate your work.

You and your team should meet at least twice each week to review your progress.

- Record meeting minutes and store in the wiki. Meeting minutes need to include: date, who attended, what you decided.

- See gitlab/templates for a sample of a meeting minute format.

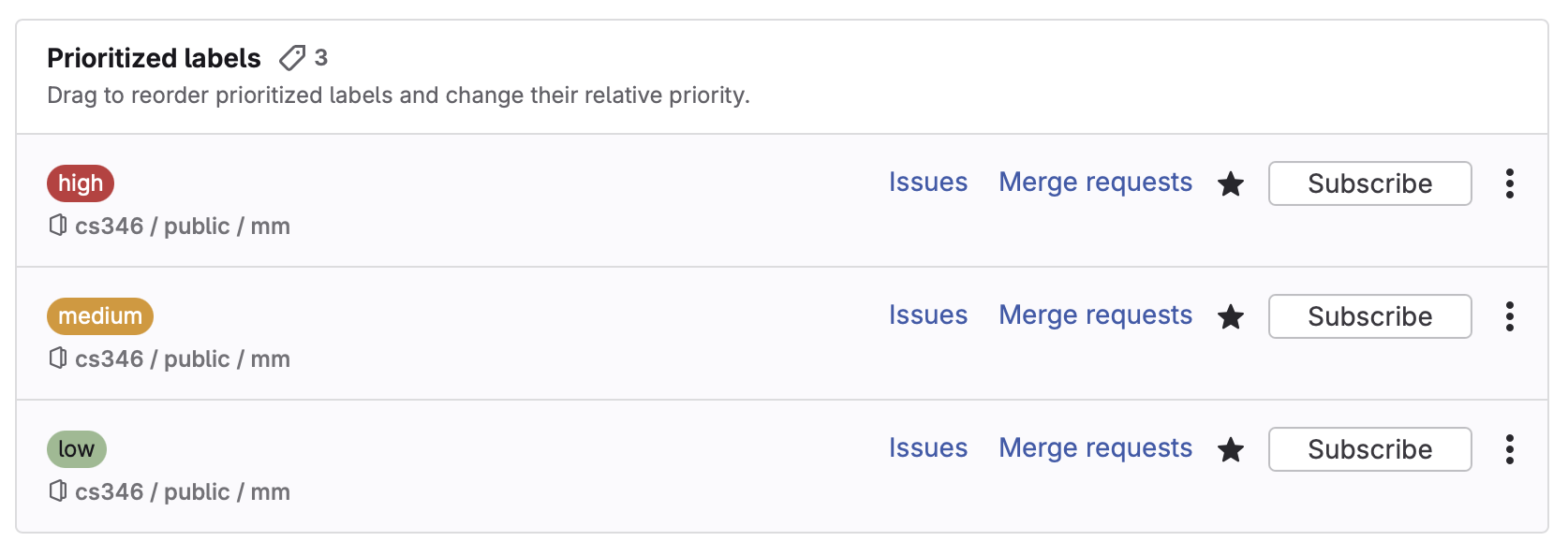

Issues must be maintained and updated as you work. Document your work indicating what was done. Use GitLab issues as the primary place to store information about what you’re working on.

- The issue list in GitLab should always reflect the state of the project.

- Issues always stay assigned to the person responsible for that work.

Last day: demo

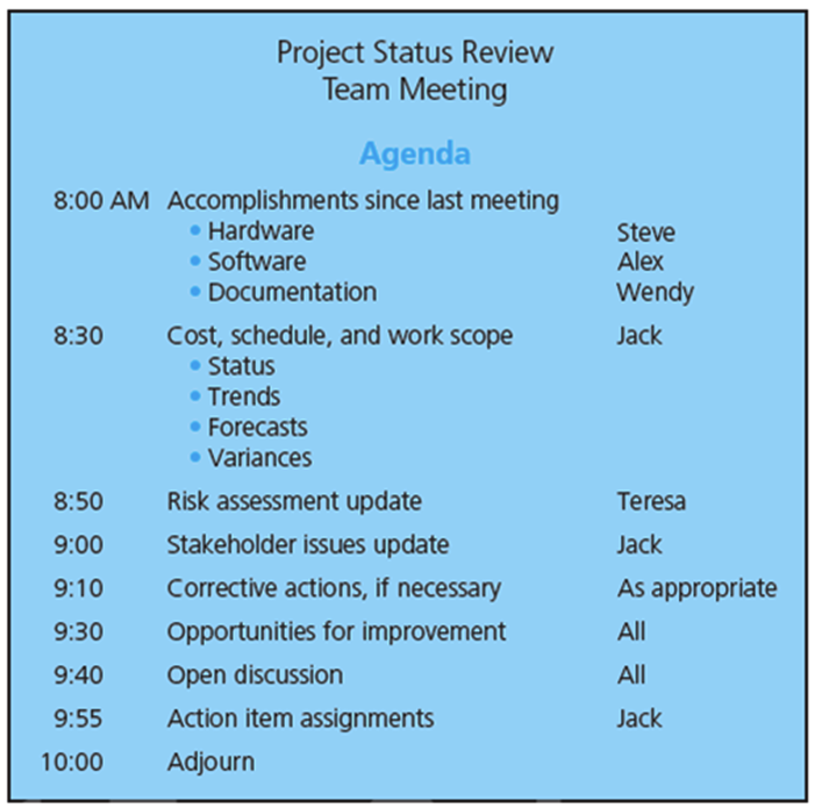

On demo days, you will meet your TA in class and present your progress.

- See the project team schedule for your assigned timeslot.

- You only need to be there for your assigned time.

- You have 15 minutes for the demo.

Before the demo:

- Meeting minutes should be complete and posted.

- All code must be committed and changes merged back to the

mainbranch. This includes implementation code and associated unit tests (which should pass). - All issues in GitLab should be up-to-date i.e., comments added and issues closed if completed.

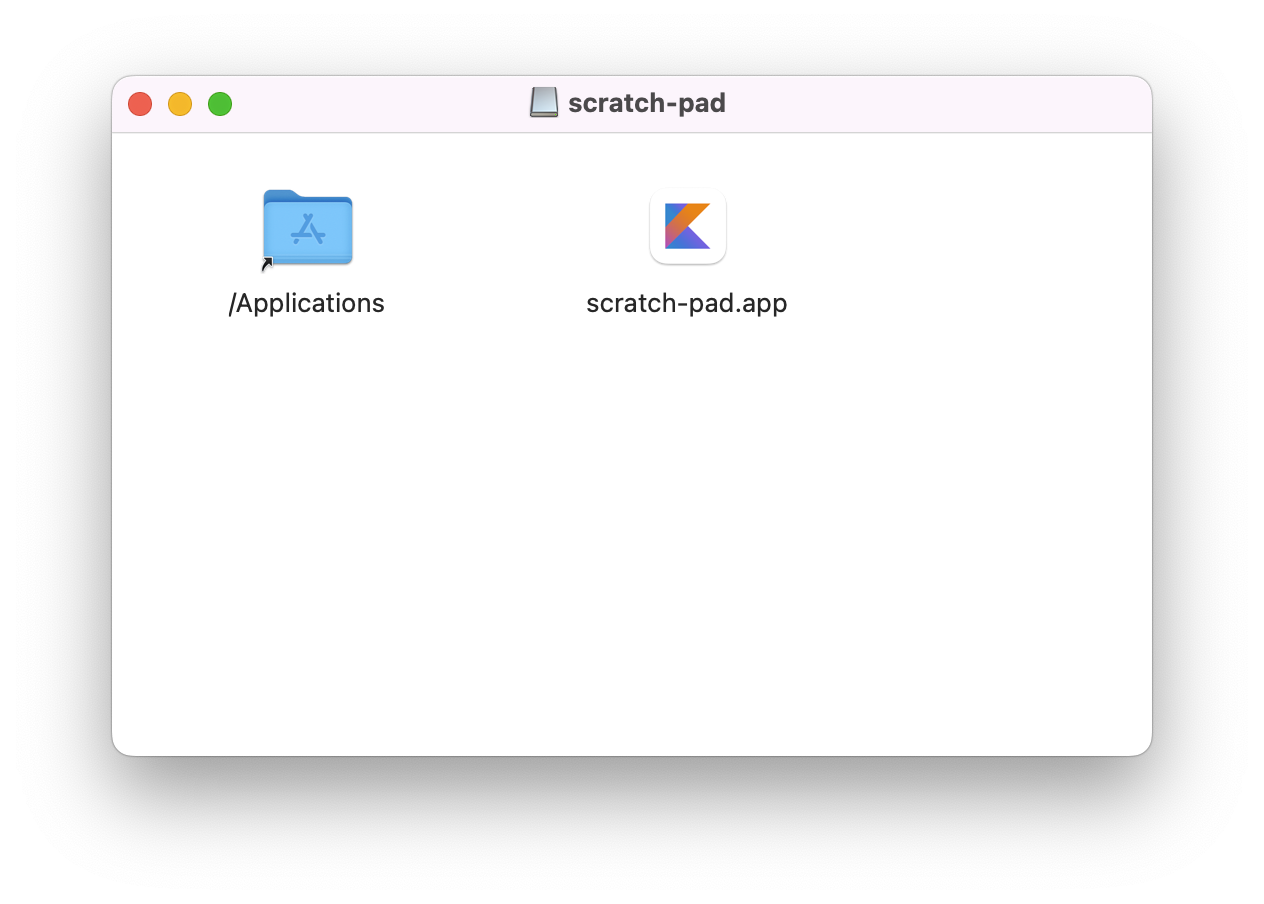

- You must walk through the software release process.

- This means creating Release Notes in GitLab, with a list of issues that you fixed.

- It should also include a link to an installer (or APK file if developing on Android).

During the demo:

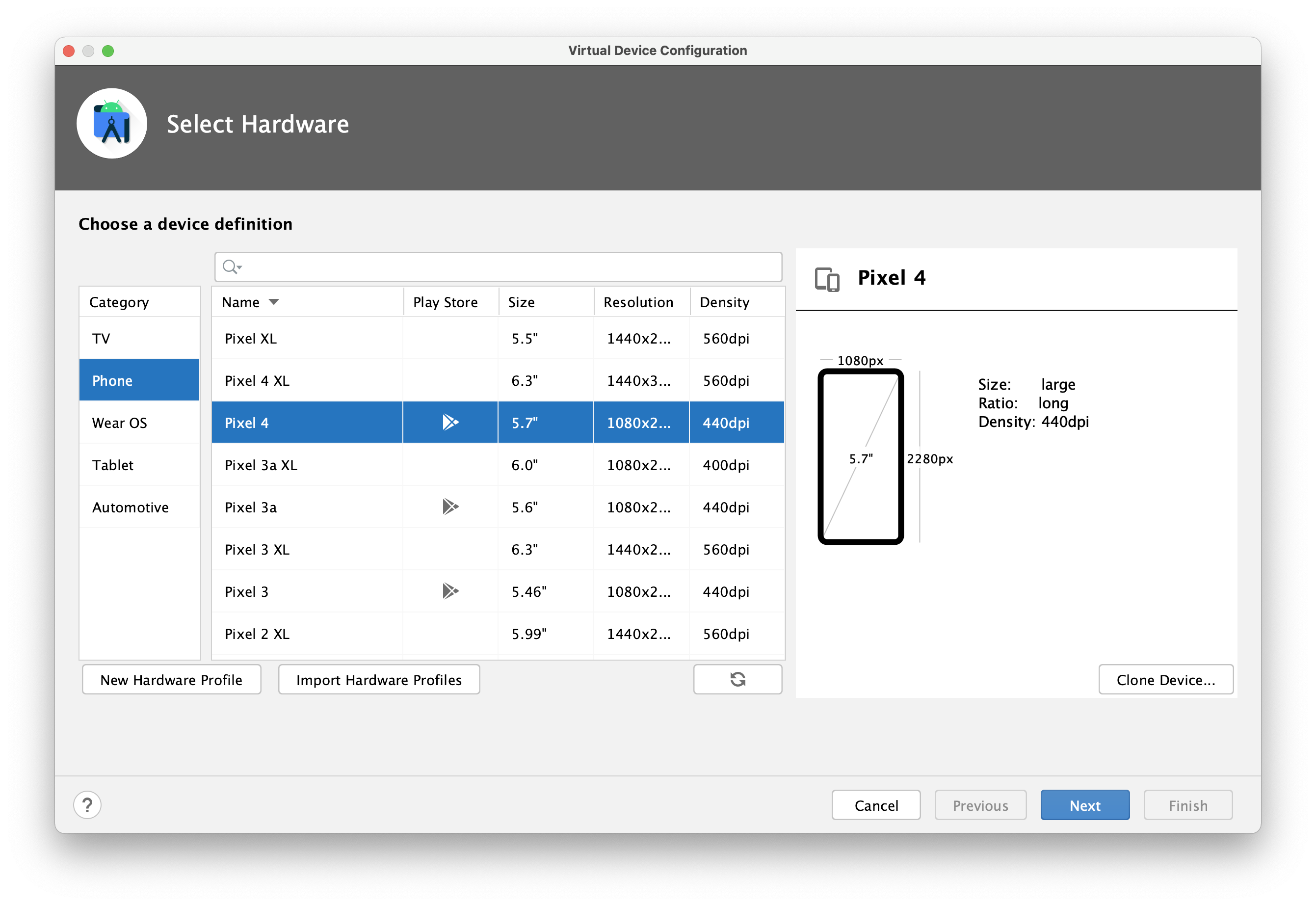

- You must have your application installed on a computer that you bring to the demo (running from an Android Virtual Device (AVD) is acceptable for Android projects).

- Bring up the list of issues completed (

Plan>Issue Boards). - Demonstrate each feature from the list using this machine.

- Each person on the team should demonstrate what they completed.

- We want to see working functionality when possible.

- You are allowed to show GitLab documents, unit tests results, or other work that demonstrates what you accomplished (since these are things that cannot easily be demonstrated in your application).

Your demo will be graded based on the features that are working during your demo. We strongly advise that you have everything working ahead of time.

Coding in the hall before the demo is a really, really bad idea.

How to submit

You do not need to submit anything. Your grade is based on your in-class demo, and your TAs assessment of your progress.

You should expect your TA to git clone your project and run your code following the demo to review what you showed them.

Final submission

This section describes a project milestone. See the schedule for due dates.

At the end of the course, we will review your entire project. Make sure that you have completed everything listed here.

Description

At the end of the term, your GitLab project, documentation and source code should be complete and consistent with one another. This means someone reading your proposal should see the features that you describe, unit tests should run properly, code should compile and run correctly and so on.

Additionally, your project artifacts need to be updated and reflect the end of your project.

- Issues should be assigned to the appropriate sprint milestones.

- Issue status should be correct i.e. completed and merged features should be marked as closed in GitLab.

- It’s acceptable to have open issues at the end of the project, but they should not be assigned to a milestone (and incomplete code should be on a feature branch, not merged back into main).

- All code should be committed and merged back to

main. We should be able to build frommainwithout errors.

What to include

1/ Project documentation

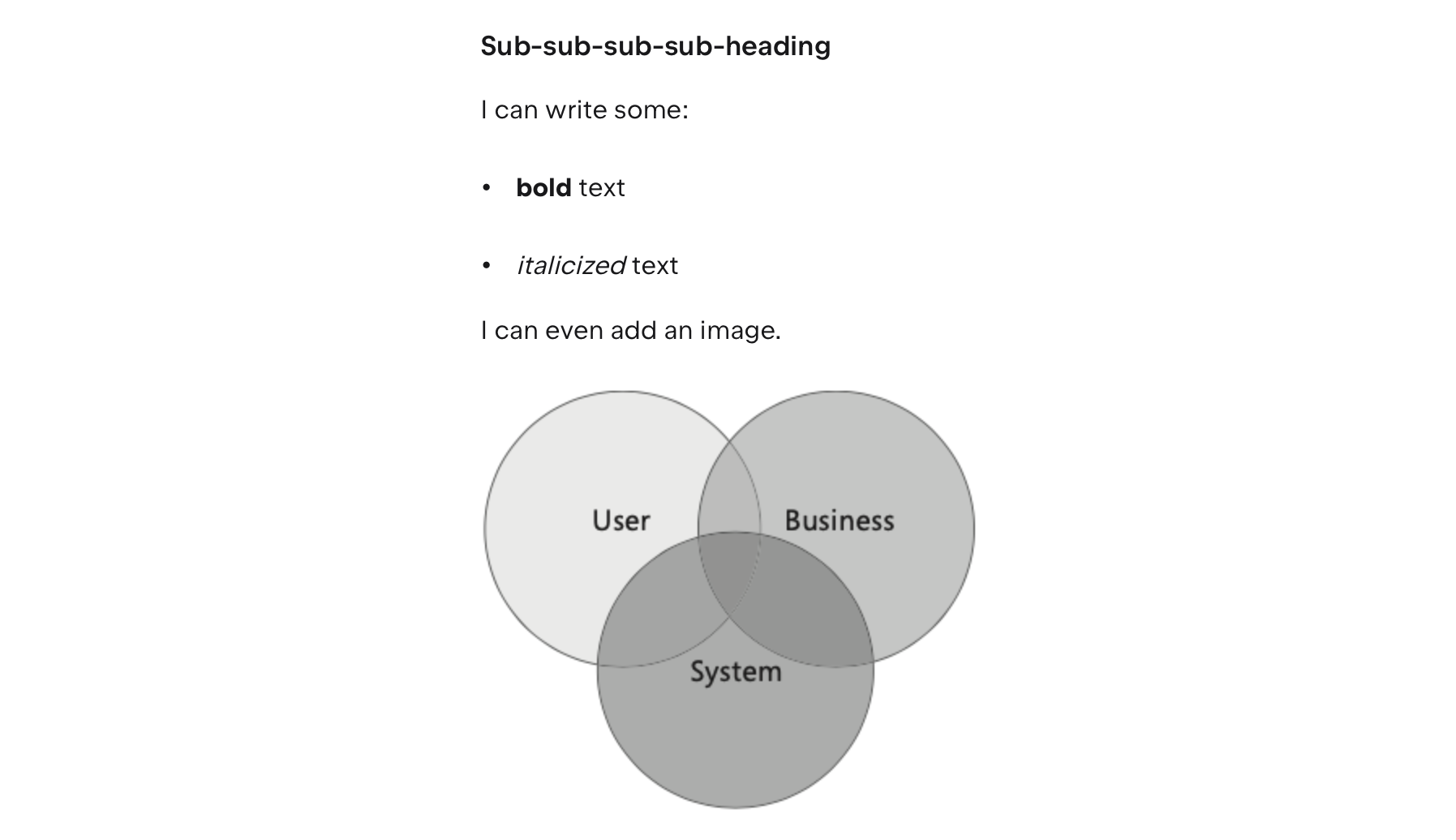

The following project documentation should exist and be stored in your GitLab project. Everything should be written in Markdown format, using Mermaid for injecting diagrams.

You should have a top-level README.md file, and a series of Wiki pages listed here.

README (README.md file)

Your top-level document should serve as the landing page for your project, and a table-of-contents for the rest of your content.

It should include the following sections:

# SUPER-COOL-PROJECT-NAME

## Title

A description of your project e.g., "an Uber-eats app for parakeets!".

## Project Description

A short description of your project, identifying your intended users,

and the problem that you are solving. (ED: think of this as your 15

second "elevator-pitch" of what your project is about).

## Video/Screenshots

Embed a video or series of screenshots that demonstrates using your

application (maximum 1 minute in length).

## Getting Started

Instructions on how to install and launch your project. If you have any

additional instructions that the TA needs, please put them here

(e.g., how to launch from a Docker container; how to install keys so

that they can connect to your database and so on).

## Team Details

Basic team information including:

* Team number

* Team members. Include full names and email addresses.

This should be followed by a Table of Contents section, with links to your Wiki pages.

## Documentation

* Project proposal (unchanged from initial release)

* Design proposal (unchanged from initial release)

* User documentation (new, **see below**)

* Design diagrams (new, **see below**)

## Project Information

* Team contract

* Meeting minutes

## Releases

* Bullet list of software release notes

Project Proposal (Wiki page)

This should be your original project proposal. Add a section at the end titled “Revisions” and describe any deviations you made from your original proposal, to what you delivered. If there are no deviations at all, then indicate that in this section.

Design Proposal (Wiki page)

This should be your original design proposal. Add a section at the end titled “Revisions” and describe any deviations you made from your original proposal, to what you delivered. If there are no deviations at all, then indicate that in this section.

User Guide (Wiki page)

This is a new requirement. You are required to write documentation explaining to your users how to use your product. You should include:

- Installation instructions, only if more detailed instructions are required (beyond what you provided in the README file).

- Installation requirements. List the operating systems and versions (if applicable) that you support. This is a good place to indicate what you used for testing.

- Features. Describe your main features and how they work. Screenshots are very helpful for doing this.

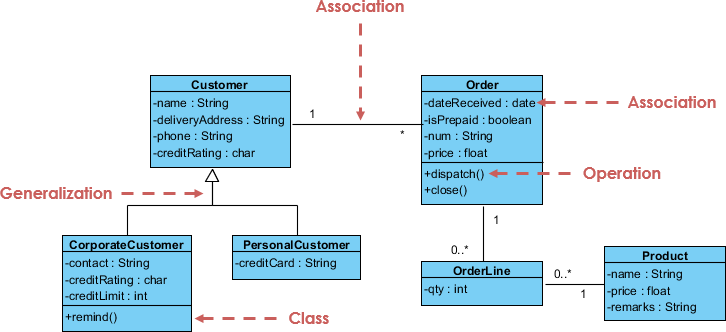

Design Diagrams (Wiki page)

This is a new requirement. Create the following diagrams using Mermaid.

- A high-level component diagram showing your application structure e.g, layered architecture, with external systems for auth and database.

- Create class diagrams for your main classes in your application.

- Focus on the critical internal classes:

views,view models,entities,modelsandserviceclasses. You do not need to create class diagrams for external systems like your database or the user interface compose functions (which are presumably behind an API).

- Focus on the critical internal classes:

- Make sure that you illustrate where the classes “fit” in a Layered Architecture e.g., show them grouped into layers.

Team Contract (Wiki page)