M17: Generative Recursion

Hint: If possible, make your browser wide enough to place the slides and commentary side-by-side.

The slides on this page are not accessible (for screen readers, etc). The 1up and 3up slides are better.

Module Topics

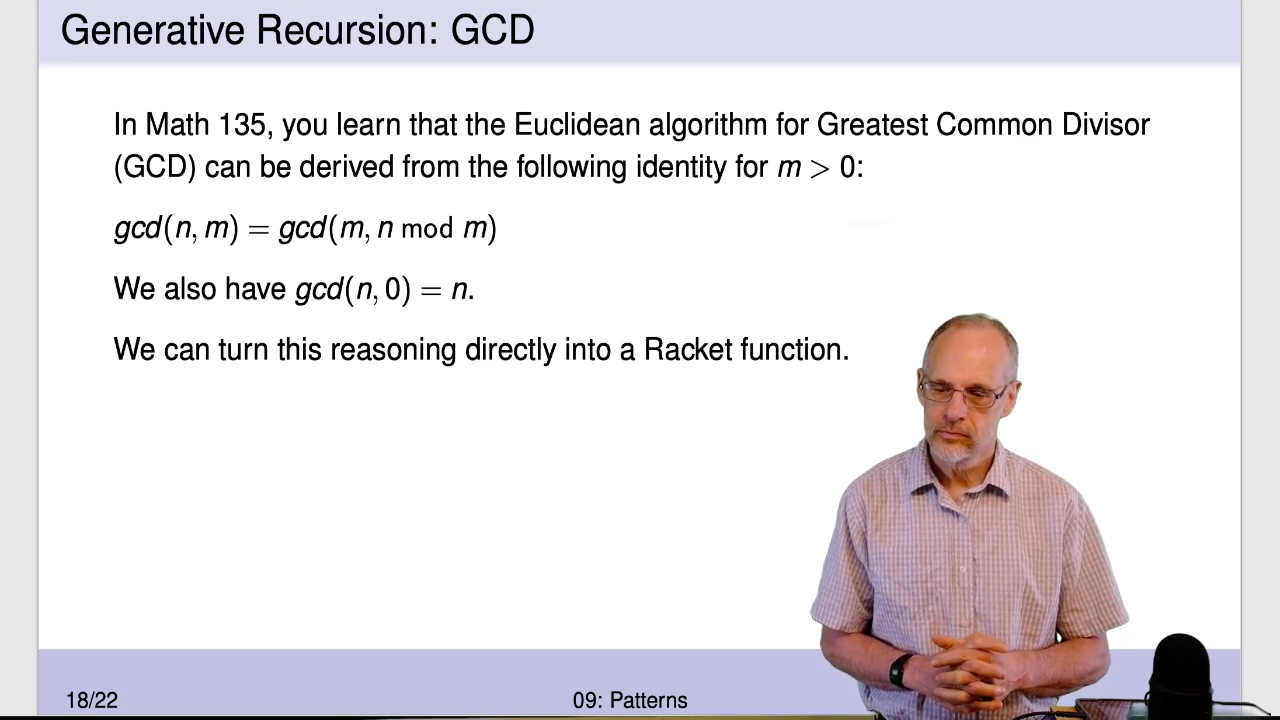

Module 8 introduced the different kinds of recursion we identify in CS135: simple recursion, accumulative recursion, mutual recursion, and generative recursion. We discussed GCD as an example of generative recursion and what makes it so hard.

Take 2:13 minutes to watch the rant again.

Video: Download m09.70_gcd

Briefly, someone (Euclid) had to have an “Aha!” moment and realize that throwing away the first parameter, replacing it with the second, and then doing an apparently random calculation to replace the second would get you closer to the solution. That is a much, much different process than following a data definition and deriving a template!

Correctness is important because we want the right answer.

Termination is important because we want the answer well before the universe ends.

In a non-recursive function none of the code repeats1. So the time taken to execute the function is the sum of the time for each of the functions it calls (plus a little overhead). If each of those called functions terminates (i.e. finishes in a finite time), the sum is also finite and thus the function terminates.

But in a recursive function the same code can be executed repeatedly. Each repetition takes some amount of time to execute. Unless we can guarantee that the number of repetitions is finite, the sum of those execution times will be infinite and the function won’t terminate.

We also discussed termination of simple recursion back in M05 but using different words.

Consider (gcd 100 85):

(gcd 85 100)

⇒ (gcd 100 85)

⇒ (gcd 85 15)

⇒ (gcd 15 10)

⇒ (gcd 10 5)

⇒ (gcd 5 0)The second argument gets smaller and smaller, but can’t get smaller than 0. So the function terminates.

The depth of recursion in this example is 5.

Each of the functions we’ve considered so far have something that gets smaller

and smaller until it stops. That might be the size of the list, the

2nd parameter to GCD, the parameter in count-down or the difference

between the parameter and the stopping point in count-up-to.

But here’s an example where the parameter does not keep getting smaller. Other than the base case, if the parameter is even the next application is smaller. But if it’s odd, the next application is a lot larger. Instead of a decreasing sequence, the size of the parameter oscillates.

As of 2020, the Collatz Conjecture has been checked for all values of n up to 268. All of those values eventually terminated.

But the question remains, does one or more of the infinite number of untested values enter a cycle that does not terminate? We don’t know.

Pop the following code into DrRacket and try a few values: (collatz-list 5),

(collatz-list 23), (collatz-list 27), and (collatz-list 8400511).

(define (collatz-list n)

(cons n (cond

[(= n 1) empty]

[(even? n) (collatz-list (/ n 2))]

[ else (collatz-list (+ 1 (* 3 n)))])))Video: Download m17.20_quicksort

Suppose we made the mistake noted in the slide and wrote

(filter (lambda (x) (>= x pivot)) lon)

instead of

(filter (lambda (x) (>= x pivot)) (rest lon))

Then (my-quicksort (list 1 2 4 3)) would result in the subproblem

(my-quicksort (list 1 2 4 3)) instead of (my-quicksort (list 2 4 3)).

Because the recursive application is exactly the same as the original application, no progress is made and the function runs “forever”.

What’s not said in the slide is that on most lists, quicksort really is

quick. It’s much faster than insertion sort.

We talked about measuring efficiency in module 09. Insertion sort’s running time is always O(n2). Quicksort runs that slowly on some lists, but most of the time its running time is in the much more efficient family of O(n lg n).

-

If you’ve studied other programming languages that involve loops, you’ll need similar arguments for them. But we don’t have loops, so we can ignore them. ↩︎